Female school students targeted in ‘obscene’ AI-generated fake nude scandal

Up to 50 teenage girls have been targeted in a series of explicit AI-generated images, which were circulated online.

Family Life

Don't miss out on the headlines from Family Life. Followed categories will be added to My News.

Up to 50 female students at a private Melbourne school have been targeted in AI-generated explicit images.

Police are investigating after dozens of female students at Bacchus Marsh Grammar in Melbourne’s west reported fake nude images that began circulating at the school and shared across Instagram and Snapchat.

The parents of the teenage girls, believed to be between Year 9 and Year 12, were told of the scandal over the weekend.

Want to join the family? Sign up to our Kidspot newsletter for more stories like this.

Teenage girls target in “obscene” fake nude scandal

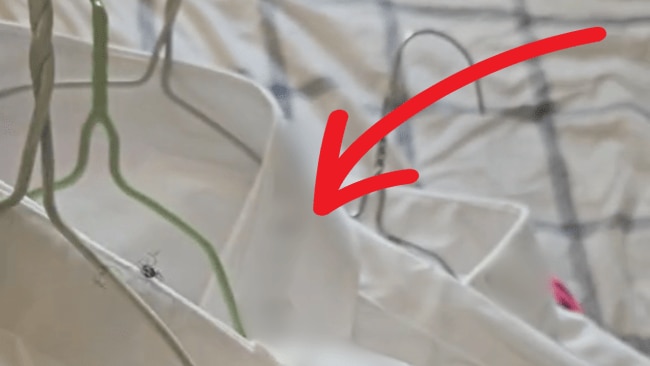

It’s believed the faces of the girls were taken from their personal Instagram profiles, per 7News.

These images were then manipulated using AI to generate disturbing and explicit photos.

RELATED: 'Deliberate, dangerous, and disgusting': The dirty school lists need to stop

The school has since confirmed the images circulating, with principal Andrew Neal describing the scene as “obscene.”

“It’s appalling. It is something that strikes to the heart of students, particularly girls growing up at this age,” he told the ABC.

“They should be able to learn and go about their business without this kind of nonsense.”

Introducing our new podcast: Mum Club! Listen and subscribe wherever you get your podcasts so you never miss an episode.

Mr Neal confirmed they were working to get the images removed and track down any and all individuals involved with the incident.

“Logic would suggest that the most likely individual is someone at the school … or a group of people from the school, however, the police and the school are not ruling out any other possibility as well,” he said.

RELATED: Another Melbourne school under fire after list found ranking female classmates

A teenager was arrested in connection with the explicit images, but Victoria Police confirmed he had since been released.

The Instagram account used to share the images has since been deleted.

“Officers have been told a number [of] images were sent to a person in the Melton area via an online platform on Friday, 7 June,” a spokesperson for Victoria Police confirmed in a statement.

“Police have arrested a teenager in relation to explicit images being circulated online. He was released pending further inquiries.”

Despite the images not being real, they are still considered child abuse material; those who share sexually explicit digitally-created images without consent may face up to seven years in jail.

More Coverage

Originally published as Female school students targeted in ‘obscene’ AI-generated fake nude scandal