Rein in AI before it outsmarts us all warn technology titans

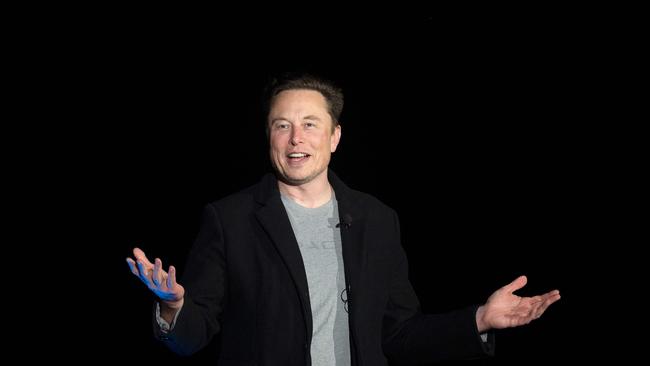

Elon Musk is among 1000 tech executives and experts to call for an immediate pause in designing AI systems because of the risk to our civilisation.

Elon Musk is among 1,000 technology executives and experts who have called for an immediate pause in designing powerful artificial intelligence systems because of the fear that they will “outsmart and replace us”.

Musk, boss of Twitter and Tesla, has been joined by Steve Wozniak, co-founder of Apple, Yuval Noah Harari, the author of Sapiens, and AI researchers to warn that the industry is moving too fast. Musk has sounded similar warnings before but the prominence of other signatories indicates a growing sense of unease at the speed of change.

The latest update of the AI chatbot ChatGPT has taken it from the bottom 10 per cent in exams for the US Bar to the top.

In an open letter from the Future of Life Institute, Musk and the others wrote that AI labs were “locked in an out-of-control race to develop and deploy ever more powerful digital minds that no one – not even their creators – can understand, predict, or reliably control”. They said that unless there was a delay to make systems safer and allow regulators to formulate a legal framework, the machines could flood the world with disinformation and “automate away all the jobs”.

They asked: “Should we develop non-human minds that might eventually outnumber, outsmart, obsolete and replace us? Should we risk loss of control of our civilisation? Such decisions must not be delegated to unelected tech leaders.”

The tech world is in a race to develop and release AI systems that was catalysed by the creation of ChatGPT by OpenAI, a company backed by Microsoft. Google has released Bard, its own chatbot, and there has been huge investment in other AI companies by the tech industry, which has been desperate for a new platform of innovation.

The new gold rush has concerned many who believe that ethics and safety are being sidelined. They accuse the companies developing AI of a lack of transparency. Microsoft disbanded its AI ethics and safety team in January, causing alarm, although the company insisted that it comprised only a fraction of those working on responsible AI.

Musk has been the most famous critic of OpenAI, which he co-founded as a non-profit body in 2015 to promote and develop artificial intelligence in a way that would benefit humanity. He split from it in 2018 and the organisation has since set up a “capped” profit-making company that has been backed by Microsoft. Investors can make a fixed return on their capital. Musk has tweeted: “I’m still confused as to how a non-profit to which I donated [roughly] $100 million somehow became a $30 billion market cap for-profit.”

Sam Altman, chief executive of OpenAI, shrugged off the open letter, telling The Wall Street Journal that GPT-4, the latest version, underwent six months of safety testing. “In some sense, this is preaching to the choir,” he said. “We have, I think, been talking about these issues the loudest, with the most intensity, for the longest.”

Yann LeCun, head of AI at Meta, said that he “disagreed with the premise” of the letter, which called for a temporary stop on training systems more powerful than GPT-4. If the industry did not pause its efforts, governments should step in, the letter said. Other signatories included the British AI researcher Stuart Russell, Emad Mostaque, chief executive of the British AI firm Stable Diffusion, and researchers from DeepMind, which is based in London and owned by Google.

The British government has published its AI white paper, setting out the framework for its regulatory regime. It has indicated more of a light-touch approach than the EU, delegating much of the work to industry-level regulators. The white paper suggests that one way to regulate AI would be the statutory reporting of computing power over a certain size. Brussels has been more prescriptive, identifying certain technologies that are higher-risk, such as “deepfakes”, deceptively realistic fake images, which will require greater regulation. Canada has gone down a similar track, but the US wants a more voluntary framework.

Some of the signatories to the letter, including Mostaque and Gary Marcus, a New York University professor, said they agreed with its spirit but not all of its sentiments.

Ian Hogarth, co-author of the State of AI report, said there was a short time for governments to act. “Obviously, as time goes on, and the amount of hardware you need goes down, as the number of models proliferate, it’s much harder to wrestle back control,” he said.

Azeem Azhar, chairman of the research group Exponential View, welcomed the letter. “Pause or not, we need public dialogues about what AI could mean in the short and long term,” he said. “This letter could encourage better co-operation amongst the high-level research lab, academia and government to emphasise . . . the right safety measures.”

Some AI experts were critical. Arvind Narayanan, professor of computer science at Princeton, tweeted: “This open letter – ironically but unsurprisingly – further fuels AI hype and makes it harder to tackle real, already occurring AI harms.”

Last week Twitter was flooded with images generated by AI depicting the arrest of Donald Trump. Separately, fabricated images of the Pope have gone viral online, garnering millions of views on social media. The photos showed the 86-year-old wrapped up against the elements in a stylish puffer jacket and silver jewelled crucifix.

The Times

To join the conversation, please log in. Don't have an account? Register

Join the conversation, you are commenting as Logout