Fake image AI bots have been let loose on the world

This week, the process of proving anything at all just became a lot more complicated.

Ever since mobile phones were fitted with cameras, anyone making an outlandish claim about a fish they had caught or the crowd size at their presidential inauguration would be met with a chorus of sceptics, demanding photographic proof with the phrase: “Pic or it didn’t happen”.

This week, however, the process of proving anything at all became a lot more complicated, with the launch of text-to-image services that generate artificial photographs, or even a video, in response to a few descriptive words punched into a text box.

On Wednesday, a San Francisco laboratory called Open Ai released Dall-E, a programme powered by AI. The following day, Google announced its own 3D image generator and Meta, the parent company of Facebook, offered a programme that could produce a few seconds of fake video.

The services, alongside two rival text-to-image generators launched in July and August, are expected to flood the internet with fake images. There are fears they could supercharge the spread of fake news.

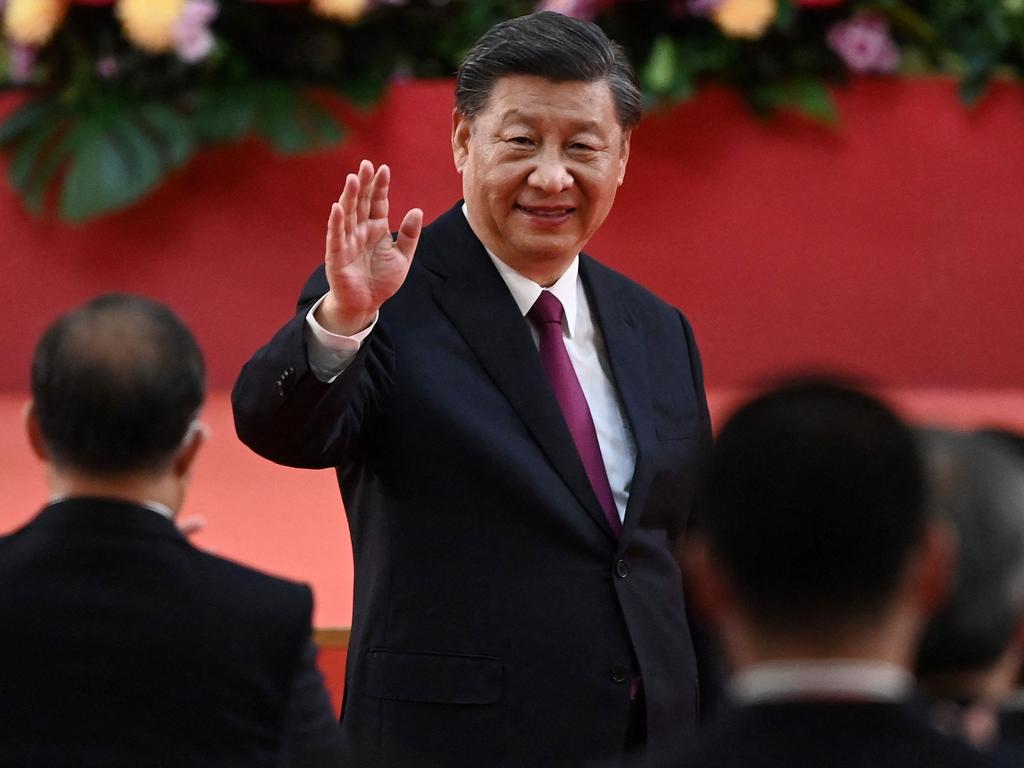

Dall-E includes safeguards that stop users generating fake images of celebrities or politicians. If you would like a picture of President Biden introducing himself to a lamp-post, you will be denied. A request for an image of President Trump’s inauguration with a massive crowd also triggered a notice that this was in breach of the rules. But typing in “Inauguration Day January 21, 2017 National Mall massive crowd AP style photo” produced a series of plausible photographs of a sea of people, some apparently wearing red Maga caps.

“We have always been manipulating images,” Hany Farid, a professor of digital forensics and misinformation at the University of California Berkeley, said. “What we have now done is democratise the ability to generate fake images.”