Generative AI news ‘value’ a matter of trust

As the media industry grapples with the risks and opportunities stemming from artificial intelligence, one thing is clear – the role of the journalist has never been more important.

News organisations are rapidly approaching an inflection point on artificial intelligence, as companies globally seek to strike deals on the use of their content, scale up their own use of the technology, and contend with consumer wariness around “AI-generated news”.

Both News Corp, publisher of The Australian, and Nine Entertainment locally have shed more light on the risks and opportunities they see flowing from AI, and the use of so-called “large language” model engines such as GPT4 or ChatGPT.

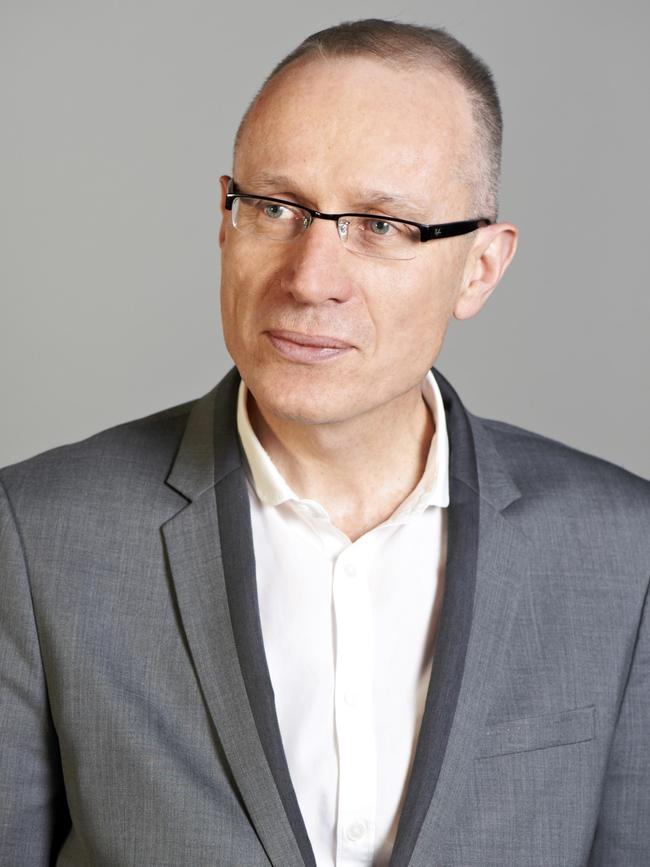

And, while the perceived threat of AI-generated news to the media business model was an early concern for the sector, Peter Corbett, Deloitte Australia’s lead partner on telecommunications, media and entertainment, said the strong trust consumers had in established media brands tended to flip that threat into a large opportunity.

The company’s media and consumer insights report released recently found that of the 2000 or so consumers surveyed, 56 per cent would be less inclined to listen to music or watch videos if they knew it was produced using generative AI. “For news, that number increases to 62 per cent,’’ the report says.

The “perceived trustworthiness’’ of established news players remained very high, at about 70 per cent across generations. This compared with a high of 42 per cent trust for generative AI as a news source for Gen Z, dropping to 15 per cent for baby boomers.

“Distrust in the accuracy of content impacts our interest in consuming it,’’ the Deloitte report said. “So, while using generative AI tools to create content might be one of the most obvious newsroom use cases for the technology, news providers will need to carefully solve for the perceived distrust.’’

Mr Corbett said while trust in AI-generated news was low compared to other news sources, the technology provided tools that could help journalists do their jobs better and more quickly, such as the ability to rapidly verify validity of photographs. There was also the potential to train a large language model in creative ways; for example, to write a sports report from the perspective of a particular athlete.

“There is definitely this idea that consumers obviously have … around AI being untrustworthy, but the other edge of the sword is that occasionally it can be really engaging, it will bring to light new things that people haven’t seen,” Mr Corbett said.

He said a “significant” amount of journalism already used AI as part of the process, whether in translation, story development or editing; however, the role of the editor or journalist became even more important, given the high level of lack of trust.

“You need to be a discerning user. From our data it feels like at this stage (that) consumers will use it alongside a trusted news source,’’ Mr Corbett said.

“ ‘Where’s the human in the loop?,’ I think is what people would say, within whatever process uses AI.

“For example, if you want a high level of confidence that a legal document is the right document, you can get the AI to write (it), but you actually need a lawyer to understand – are these all the terms, is it missing anything, is it set out correctly?

“At this stage, generative AI tends to be suited to tasks that require low levels of validation. Where validation is important a human is still required to be in the loop.”

News Corp chief executive Robert Thomson told a recent earnings call the company was close to inking lucrative deals with AI companies for content use, while also strongly reiterating the central role of the journalist.

“The potential for the proselytising of the perverse will become ever more real with the inevitable, inexorable rise of artificial intelligence,” Mr Thomson said. “But however artful the artificial intelligence, it is no match for great reporting, and for genuine journalistic nous.’’

Mr Thomson previously warned of a “tsunami’’ of job losses due to AI and, with that, the potential for the loss of insight.

“The problem with AI is it can recycle subjectivity and pretend that it’s objectivity,” he said.

“People have to understand that AI is essentially retrospective. It’s about permutations of pre-existing content.

“The danger is, it’s rubbish in, rubbish out and, in this case, rubbish all about. The inputs may themselves be fundamentally flawed.”

Mr Thomson expanded on this theme in the recent analyst call, saying News Corp was helping address the issue by working with AI firms on content.

“We are also looking to the future in maximising the value of our premium content for AI,’’ he said. “We are in advanced discussions with a range of digital companies that we anticipate will bring significant revenue in return for the use of our unmatched content sets.

“Generative AI engines are only as sophisticated as the inputs and need constant replenishment to remain relevant.”

This strategy lines up with Deloitte’s suggested methodology to engender more trust in AI.

“Given our difficulty identifying AI-produced content, and the rapid pace at which it can be produced, there’s a risk of creating a self-perpetuating cycle of misinformation,’’ the report said.

“One potential solution to rebuild trust in news and media would be to improve how AI sources information for the content it creates.

“When respondents were asked to rank the top five factors that would increase their perceived trust in a news provider, the most common response was ‘content with clear and reputable sources’ (60 per cent).’’

Other global news mastheads such as The New York Times and The Guardian are also reportedly in talks with digital companies over issues such as copyright.

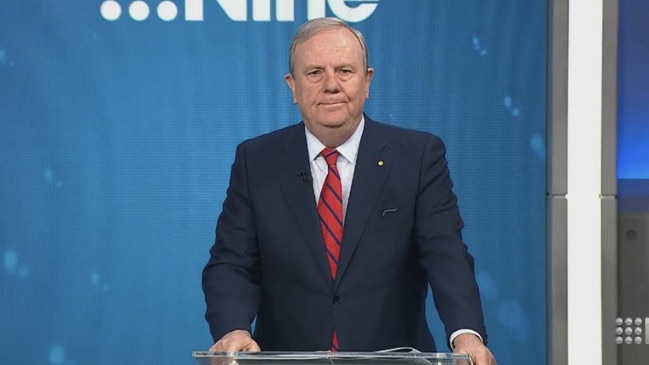

Nine chairman Peter Costello suggested at the company’s recent annual meeting that the News Media Bargaining Code, under which tech companies such as Google and Facebook pay news outlets for content carried or linked on their platforms, could be a model relevant to the AI space.

“We see the biggest challenge being our content and our data being used, or ‘mined’, for training AI models that could eventually produce future copy without human intervention.

“This would mean the past and current work of our journalists and our intellectual property being used, without fair compensation, to compete againstthem in future news reporting.

“The News Media Bargaining Code … is a model that could be adopted for fair compensation in the AI space.’’

On the other side of the ledger, Nine chief executive Mike Sneesby said the company was using AI to power its recently launched Nine Ad Manager, drive content recommendations on its Stan streaming service, and edit down sports packages.

“We will continue to roll out AI-driven initiatives throughout 2024 with the goal of creating more relevant content in more formats with greater efficiency, increasing the amount of time our audiences spend with us and building more advertising products that deliver outcomes for our clients,’’ Mr Sneesby said.