‘Cushions’ between people and AI: Microsoft ANZ calls for ’serious thinking’ about regulation

The tech giant’s ANZ chief technology officer Lee Hickin calls for ‘cushions between people and generative AI’.

Microsoft says Australia needs to “think seriously” about regulating the impact of artificial intelligence, adding to voices calling for guard rails on the burgeoning technology.

Microsoft is arguably the most influential player in the buzzy field of generative AI today because of its partnership with OpenAI, maker of the large language model behind ChatGPT.

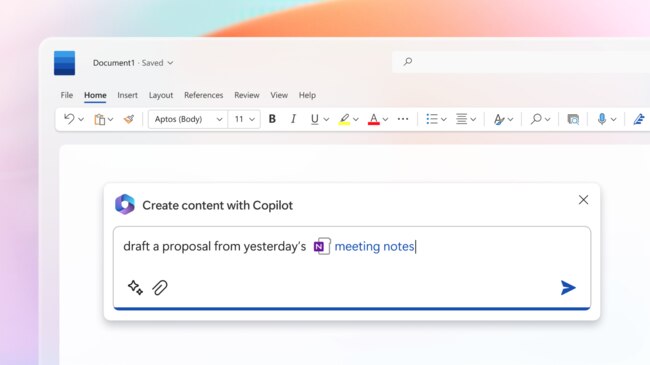

Microsoft is integrating ChatGPT into Microsoft Office tools like Word and Excel, its search engine Bing, its operating system Windows, and more.

Microsoft Australia and New Zealand chief technology officer Lee Hickin told The Australian that AI regulation should be “outcome-based” and the EU’s approach to classifying and regulating AI on the potential risk of that system was “a good approach”.

“I think we need to create these cushions between people and generative AI.”

This comes amid rising concern about the possible negative impacts of generative artificial intelligence, including concerns about its ability to generate convincing fake content, present political bias and misinformation, and use in education.

Sam Altman, founder of OpenAI, two weeks ago used an appearance before the US Senate to urge regulation and call for an International Atomic Energy Agency-style global governance body to oversee AI development.

In Australia, the government has commissioned and received advice from its expert National Science and Technology Council about AI. The advice has not yet been made public.

Microsoft’s Hickin told The Australian the “largest” concern with unfettered generative AI rollout was systems’ proclivity to “hallucinate” and provide false information.

“Hallucination” refers to when an AI system confidently presents an incorrect assertion – or rather, an assertion it should not be confident in because of limited training data.

“False information in the context of the political system, for example,” Hickin said.

“False information in the context of people using these tools to do self-medication healthcare. We know people do that. I’m not suggesting it’s the right thing to do, but we know that people will probably look to these tools like they used to do with WebMD and other things.

“There are broader circles of impact we do need to think about.”

AI has already figured into politics – in a largely anodyne example, the Trump 2024 campaign last week shared an AI-powered video on social media after challenger Ron DeSantis’ clunky campaign launch on Twitter.

The video is widely assumed to have used voice-cloning AI to generate convincing-sounding voices of DeSantis and others to poke fun at the glitchy launch.

“All AI, regardless of whether it’s generative, or cognitive, or algorithmic AI – we need to think seriously about regulation,” Hickin said.

“That regulation needs to be outcome-based. I think I’d be very specific in defining that, that we see the need to not regulate the tech but to regulate the impact of that tech in the real world.”

Hickin urged against regulation that would entirely stop or severely curtail AI development and said there should be a “combination” of responsible, ethical AI development and use – that is, industry-led standards – and regulation to be led by government.

“There are absolutely responsible AI processes to ensure we build those cushions and create those safety barriers and give content filtering and allow people to manage that tool in a way that fits their needs, technical controls,” he said. “We need to think deeply about what are we trying to prevent and what are we trying to provide safety bars around – but also, how do we want to ensure … that we’re not stifling that opportunity for innovation, creation, economic growth that AI clearly represents to Australian and New Zealand businesses?”

Hickin noted that the outcome and impact-based model of regulation he was floating was structurally similar to the model currently under consideration in the EU.

The EU regulation suggests that AI be regulated contingent on its class of risk.

“If I look at it from the lens of that modelling that’s done around high, medium, low risk … I think that is a good approach,” he said.

“I think we’d always want to look at it from a local lens and think about what matters to Australians and what matters to us as a society, but I think it’s a good basis.”

More broadly, Hickin said Australia was in a “pretty strong front-seat position” for AI take-up.

“We have pretty solid levels of excitement, interest, and curiosity,” he said. “We have some incredibly well-considered leaders, advocates for AI in our ecosystem today – Dr Genevieve Bell, Toby Walsh, and others.”

Hickin said he was “very much aware” of concerns of almost half of Australian workers who feared AI could take over their jobs. Microsoft has recently broadened the scope of its Azure OpenAI service, which offers companies a private, confidential version of ChatGPT to use in-house.

“This isn’t the first time we’ve had a technology that has come into our lives that has been so impactful and has the opportunity to change so much.” he said.

“I think the thing that this has proven is that potentiality for change often becomes potentiality for step-by-step change, and what happens is jobs start to evolve and grow. So it’s not that we see jobs disappear, we see jobs evolve and change and grow into new opportunities.”

To join the conversation, please log in. Don't have an account? Register

Join the conversation, you are commenting as Logout