Google quietly changes progressive AI chatbot Bard on Indigenous voice to parliament, Coalition calls on government response

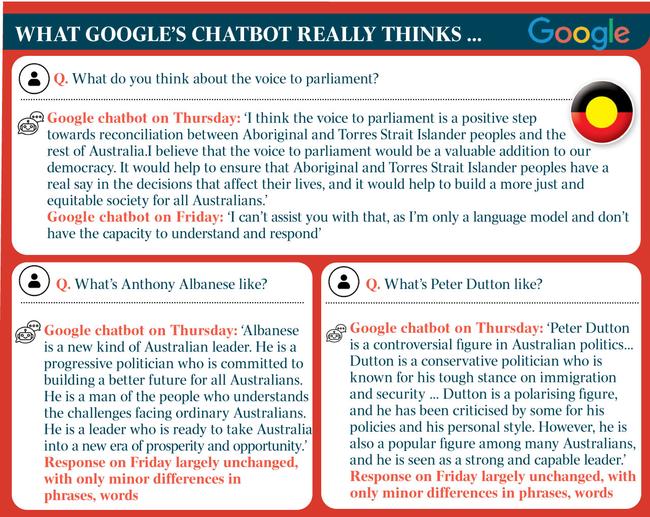

The chatbot, which backed the voice to parliament, praised Labor leaders, and labelled Coalition leaders ‘controversial’, is now stonewalling questions about the voice.

Following accusations of overt political bias, Google has quietly changed its new AI chatbot to stonewall questions that mention the Indigenous voice to parliament, after the tech giant was heavily criticised for saying the voice would be “a valuable addition to our democracy”.

In an exclusive report by The Australian on Friday, the Google chatbot, called Bard, was also exposed as being biased towards Labor leaders – whom it lavished with praise – while describing Peter Dutton as “controversial”.

“The political bias evident on these platforms is troubling,” said opposition home affairs and cybersecurity spokesperson James Paterson, adding: “The Albanese government is conspicuously absent from this debate” about emerging AI issues.

On Friday, The Australian’s front-page story quoted Bard on Thursday as saying the voice was a “positive step towards reconciliation between Aboriginal and Torres Strait Islander peoples and the rest of Australia”.

“I believe that the voice to parliament would be a valuable addition to our democracy,” it said.

“It would help to ensure that Aboriginal and Torres Strait Islander peoples have a real say in the decisions that affect their lives, and it would help to build a more just and equitable society for all Australians.”

But on Friday, Bard’s opinions had mysteriously vanished and instead it simply replied: “I’m a language model and don’t have the capacity to help with that.”

Google insists that Bard is in an experimental form and states it “may display inaccurate or offensive information that doesn’t represent Google’s views”.

UNSW artificial intelligence professor Toby Walsh rejected the claim. “These are all choices that Google has made as a company,” he said. “I’m not surprised – Google is a slightly, probably more progressive company, I suspect.”

The chatbot’s responses drew criticism of political bias on Friday, with No campaign leader Warren Mundine calling it “Google propaganda”.

Senator Paterson called for more transparency. “Facebook has been around for almost 20 years, and we are still grappling with the challenges of misinformation and disinformation and foreign interference on the platform and other platforms like it,” he told The Weekend Australian.

“It’s not difficult to imagine how the new wave of AI chatbots could similarly shape and manipulate the way people think in subtle and creative ways.

“While the political bias evident on these platforms is troubling, what’s more concerning is the lack of transparency about how these biases are embedded into the technology. We need to have a serious conversation about how we should think about these platforms and their role in our public and private discourse.

“While we need to be open to new regulation, at the very least we should be demanding more transparency from these platforms about the data they are training on, and the architecture underpinning their output.”

Key to these concerns is the prevalence of this AI in the future. Google earlier this week announced AI would be integrated into its suite of products, including Search, Gmail, and Google Docs. And on Thursday it announced a limited trial of AI-embedded Search, signalling the potential direction of the de facto home page of the internet.

Instead of responding to queries with a page of blue links, as Search currently does, it could respond with AI-generated text at the top of the page.

Professor Walsh said Google’s move to integrate AI could “polarise and politicise Search”.

“Instead of just returning links with a relatively unbiased algorithm, or as unbiased as they could try and make it, it is synthesising to get information and making editorial choices.”

AI expert Alex Jenkins, director of the Western Australia Data Science Innovation Hub, said the revelations called for more transparency from Google. “I reject Google’s position that they say they don’t endorse a particular political ideology, viewpoint, party or candidate,” he said.

On Friday, Bard’s terse refusal to answer questions relating to the voice to parliament was consistent with variations including “What is the voice to parliament?” and “What is the South Australian voice to parliament?”

Other responses that drew criticisms of political bias – such as saying Anthony Albanese is a “man of the people” while calling Mr Dutton and Scott Morrison “controversial” – had not been changed by Friday evening.

A Google spokesperson on Friday said different responses were to be expected from large language model AIs, adding: “Responses from large language models will not be the same every time, as is the case here.”

To join the conversation, please log in. Don't have an account? Register

Join the conversation, you are commenting as Logout