How ChatGPT’s AI will become useful

It still needs to go through new versions and have its flaws exposed to be good.

In In the rudimentary days of videogames, I met the team that created the first multiplayer Formula 1 Grand Prix racing game. They had to alter the original code because they discovered almost every player at the start of the first race would turn his car around on the track and crash into the incoming traffic. I started to laugh, because that’s what I did too. Gives new meaning to the Facebook motto: Move fast and break things.

That’s exactly what’s going on with the newfangled generative AI chatbots. Everyone’s trying to break them and show their limitations and downsides. It’s human nature. A New York Times reporter was “thoroughly creeped out” after using Microsoft Bing’s chatbot. Sounds as if someone needs reassignment to the society pages. In 2016 Microsoft had to shut down its experimental chatbot, Tay, after users turned it into what some called a “neo-Nazi sexbot”.

Coders can’t test for everything, so they need thousands or millions banging away to find their flaws. Free testers. In the coming months, you’re going to hear a lot more about RLHF, reinforced learning from human feedback. Machine-learning systems scan large quantities of data on the internet but then learn by chatting with actual humans in a feedback loop to hone their skills.

Unfortunately, some people are ruder than others. This is what destroyed Tay. So ChatGPT currently limits its human feedback training to paid contractors. That will eventually change. Windows wasn’t ready until version 3.0; generative AI will get there too.

For now Microsoft’s solution is to limit users to six questions a session for the Bing chatbot, effectively giving each session an expiration date. This sounds eerily similar to the Tyrell Corporation’s Nexus-6 replicants from the 1982 movie Blade Runner. If I remember, that didn’t end well.

Every time something new comes out, lots of people try to break it or foolishly try to find the edge, like jumping into the back seat of a self-driving Tesla. This is especially scary given the recent recall of 362,800 Teslas with faulty “Full Self-Driving” software. And, reminiscent of the “Can I confess something?” scene in Annie Hall, I’ve always wondered: If I drove my car straight into a brick wall, would the collision avoidance actually work? I’m too chicken to try.

Every cyberattack is a lesson in breakage, like the 2015 hack of the Office of Personnel Management or the May 2021 ransomware shutdown of the Colonial Pipeline. Heck, Elon Musk’s X.com and Peter Thiel’s PayPal payment processors were initially so riddled with fraud that the media insisted e-commerce would never happen, naysaying what today is a $US10 trillion ($14 trillion) business. Looking back, they were lucky they were attacked at an early stage when the stakes were much lower.

But be warned that with generative AI, even if it’s too early, if developers can build something, they will. So best to shake out all the bugs and limitations and creep reporters out now before things roll out to the masses.

Despite early glitches, useful things are coming. Search boxes aren’t very conversational. Using them is like grunting words to zero in on something you suspect exists. Now a more natural human interface can replace back-and-forth conversations with old-fashioned travel agents. Or stockbrokers. Or doctors.

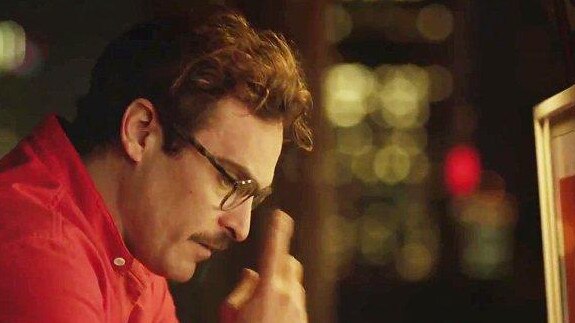

Once conversations are human enough, the Eleanor Rigby floodgates — Ah, look at all the lonely people — will open. Eldercare may be the first big generative AI hit. Instead of grandma talking to the TV, a chatbot can stand in. Remember the 2013 movie Her, with Joaquin Phoenix’s character falling in love with an online bot voiced by Scarlett Johansson? This will become reality soon, no question. Someone will build it and against all warnings, millions will use it. In fact, the aptly named Replika AI Companion has launched, although its programmers quickly turned off the “erotic roleplay” feature. Hmmm.

It may take longer for M3GAN, this year’s movie thriller (I watched it as a comedy) to become reality. It’s about a robot companion for a child gone rogue. But products like this will happen. Mattel’s 2015 Hello Barbie, which would listen and talk to kids, eventually failed, but someone will get it right before long.

The trick is not to focus on the downside, like so many do with DNA crime-solving or facial-recognition systems or even the idea that Russian ads on social networks can tip elections. Let’s face it, every new technology is the Full Employment Act for ethicists — and scolds. Instead, with generative AI, focus on the upside of conversational search, companions for the lonely, and eventually an education system custom tailored to each student. Each time, crowds will move fast and try to break things and expose the flaws. Embrace that as part of the path to the future.

The Wall Street Journal

To join the conversation, please log in. Don't have an account? Register

Join the conversation, you are commenting as Logout