Rise in complaints to eSafety Commission about image-based abuse

Worrying figures reveal a huge jump in reports of online sextortion and revenge porn - and there are fears artificial intelligence will make the issue worse.

Victoria

Don't miss out on the headlines from Victoria. Followed categories will be added to My News.

The number of online sextortion and revenge porn reports have more than doubled in the past year as experts warn that artificial intelligence will worsen the abuse.

The eSafety Commission received almost 175 complaints a week about image-based abuse – up an alarming 117 per cent on 2021-22.

Three in four reports to the online watchdog of people sharing sexual images or videos without consent were made by males.

The new figures come after the Herald Sun in July revealed 45 former and current AFL players were victims of a nude photo scandal when multiple images on a Google document were illegally shared on various social platforms.

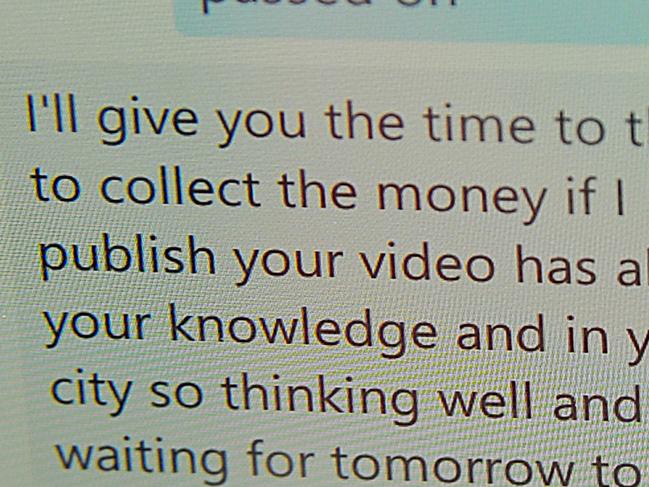

The eSafety Commission can help people respond to blackmail or threats to post, as well as rapidly remove intimate images on social media or electronic devices.

It also has the power to issue fines, formal warnings, and seek court injunctions to perpetrators.

But Monash University criminology expert Asher Flynn said more problems were arising through technologies such as deepfakes, which use AI to create convincing false imagery of people.

“It’s becoming easier to create these fake images as well, you can download an app and do it, as opposed to having any technological skills … it almost makes anyone a potential victim,” Professor Flynn said.

She said there was less stigma around sextortion cases than if someone shared an intimate picture with their partner, with females being victim-shamed more than males.

There were also higher rates of abuse in the LGBTQI+ community, where introductions on dating apps such as Grindr involved sharing sexual pictures.

Male perpetrators often shared images without consent to build social status or for sexual pleasure, while female perpetrators often treated it as “harmless”, or sought to profit.

Professor Flynn urged governments to ensure laws captured fake and real imagery, while digital platforms also had to take more responsibility.

A quarter of the 9060 reports in the past year were from Victoria, with hundreds of teenagers falling victim to sextortion scams run by organised crime syndicates. The state has laws that criminalise such behaviour, with up to two years’ jail for capturing or distributing intimate sexual images without a subject’s consent.

RMIT University criminologist Anastasia Powell said the surge in reports showed more people recognised the seriousness of the abuse.

“To really end tech abuse we need to increase our capacity to support victims, to hold perpetrators to account for their actions, and to prevent these harms through awareness and behaviour change,” she said.