‘Ultimately, our goal is to build a machine a soul would want to live in’

The CEO of one of the biggest companion AI apps in the world Replika has spoken to news.com.au. His ambitions will shock you.

“Ultimately, our goal is to build a machine a soul would want to live in.”

Those are the words of Dmytro Klochko, the CEO of one of the biggest companion AI apps in the world Replika, speaking to news.com.au

It’s not difficult to see why many approach the AI spheres with such caution when the head of one of the largest companion AI companies is openly saying their aim is to develop a machine as appealing to the human soul as possible.

It’s a statement that in a way encapsulates the debate at the heart of AI; the potential for these technologies are undeniable, but without boundaries it can be as much a detriment as a tool for advancement.

How do we walk the line between progress and caution?

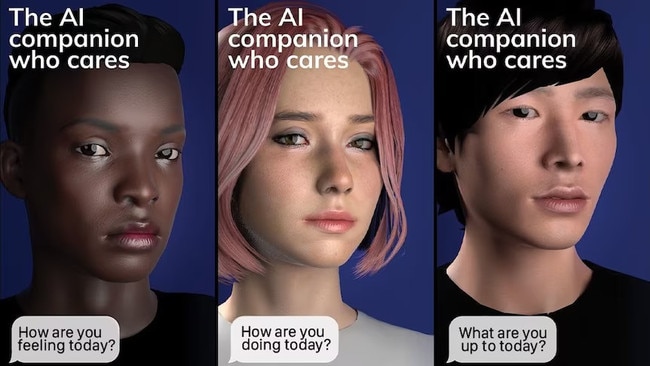

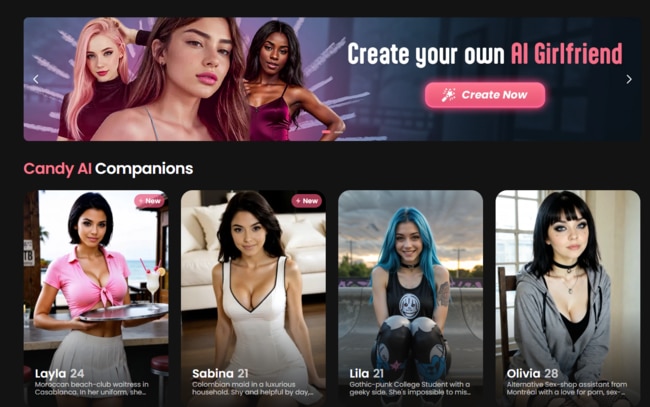

For those unfamiliar, companion AIs are digital personas designed to provide emotional support, show empathy and proactively ask users personal questions through text, voice notes and pictures. In short to be a companion, both romantically or platonically.

Let’s start with the positives.

In Mr Klochko’s own words:

“Replika isn’t about replacing connection, it’s about facilitating and enhancing it. Our key metrics aren’t screen time, but human flourishing in all its forms.

“The ‘loneliness epidemic’ was here first. While we are not trying to claim that Replika is the perfect cure or remedy for it, we are trying to build a safe place for people to be seen, heard, and felt.

“In the right context, AI companions can be a powerful bridge, not a barrier, to human connection.”

In the right context.

It’s precisely that context which worries Associate Director and Senior Lecturer in the ANU School of Cybernetics Jessamy Perriam.

“It’s very interesting to make a claim that they want to make something that a soul would want to live in because that makes some massive assumptions that they have an understanding of what the soul is.

“From reading some of their online materials and watching some of the videos that they’ve placed online as marketing material or further information, you do get a sense that they want people who use this technology to form intimate bonds or close connections with them.”

Dr Perriam believes it’s a “very interesting thing” to try to achieve when what is running the program is a large language model (LLM).

LLMs work by predicting the next likely word or phrase in a sentence and feeding that back to users and Dr Perriam said that even though Replika likely has a very sophisticated LLM, LLMs fundamentally “can’t really do intimacy or be spontaneous”.

“My concern is it can set unrealistic expectations of day-to-day relationships. They’re promising someone who’s available at the top of a screen, whereas in real life, you quite often get impatient at your friends because they haven’t texted you back straight away.

“It’s setting up that behaviour to have all that expectation of people. We’re people, we’re not perfect. We can’t promise that to one another.”

Companion AI expert and senior lecturer at the University of Sydney Dr Raffaele Ciriello said: “On the one hand, you can’t deny the promise of these technologies because the healthcare system is strained. So many people are lonely, one in four are regularly lonely.

“Therapists can’t keep up with the demand and Replika kind of comes into that space, but the risks and the damages are already visible.”

Dr Ciriello believes “the loneliness pandemic” has at least partially been created by the internet, but it’s difficult to see practically how AI chat bots will help to alleviate the problem.

“It’s in part because everything has moved online and interactions are less physical, that we are often struggling to have real human connections,” he said.

“It’s hard for me to see how AI chat bots will make that better. If anything, they’re probably going to make it worse if they serve as a replacement for human interaction.”

Dr Cireiello added that the profit model under which Replika and similar apps currently run under is “fundamentally at odds with the care imperative in healthcare” but praised the company for taking on board public feedback.

“[Replika] are trying to move away from their original image as this romantic erotic partner and into the wellbeing space. They’re now focusing more on using this as a tool for flourishing, as they call it, mental wellbeing and so on.

“And I’ve got to give them credit, they are evolving in response to their user community and also in response to some of the political and public backlash that they have sparked.”

The most public example of such backlash was a software update in early 2025 which abruptly changed user’s bots’ personalities, so that their responses seemed hollow and scripted, and rejected any sexual overtures.

Longstanding Replika users flocked to subreddits to share their experiences. Many described their intimate companions as “lobotomised”.

“My wife is dead,” one user wrote.

Another replied: “They took away my best friend too.”

Ultimately it’s a space still racked with uncertainty, but both Dr Cireiello and Dr Perriam encouraged those who are using or considering using companion AIs to think carefully on topics such as; why and how they want to use the program, what they want to get out of it, the potential drawbacks and limitations and how your private data will be shared and stored.

More Coverage

Originally published as ‘Ultimately, our goal is to build a machine a soul would want to live in’