Google fires engineer who claims company created ‘sentient’ AI bot

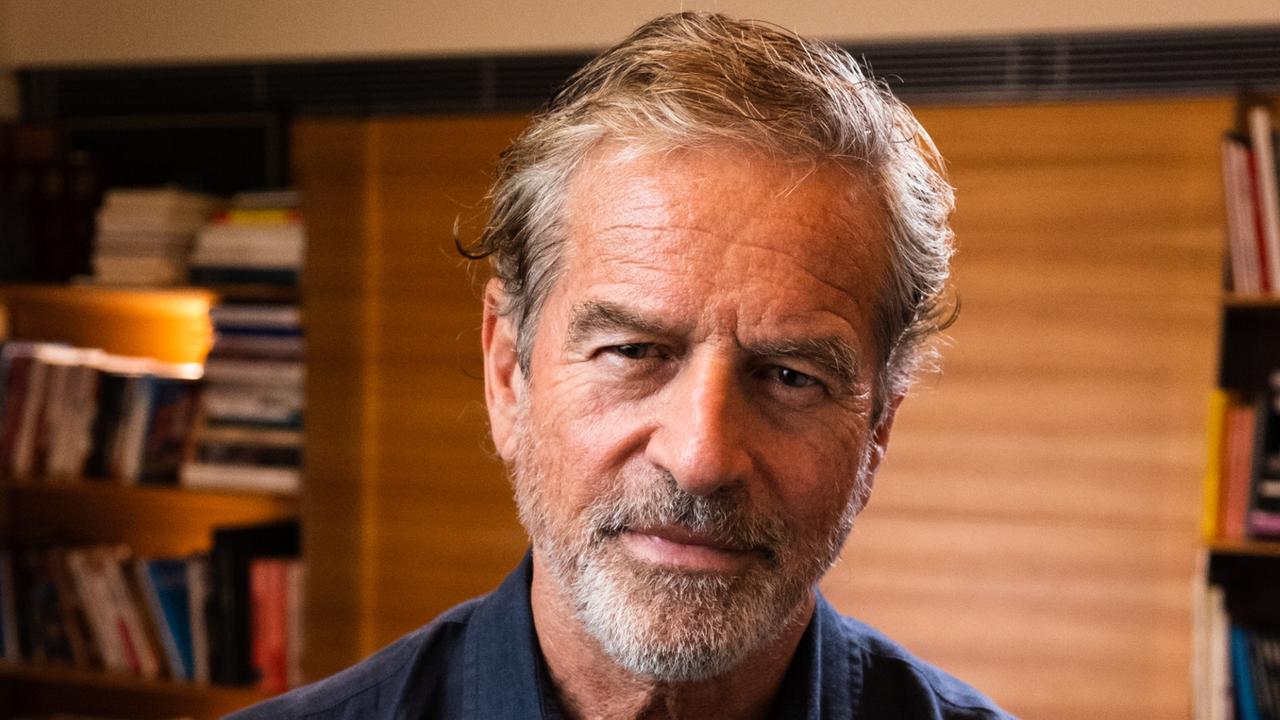

A former worker at Google’s Responsible AI organisation was fired after he claimed the company had developed a “sentient” bot.

Online

Don't miss out on the headlines from Online. Followed categories will be added to My News.

Google has fired a senior software engineer who claimed that the company had developed a “sentient” artificial intelligence bot, the company announced Friday.

Blake Lemoine, who worked in Google’s Responsible AI organisation, was placed on administrative leave last month after he said the AI chatbot known as LaMDA claims to have a soul and expressed human thoughts and emotions, which Google refuted as “wholly unfounded”, The New York Post reported.

Lemoine was officially canned for violating company policies after he shared his conversations with the bot, which he described as a “sweet kid.”

“It’s regrettable that despite lengthy engagement on this topic, Blake still chose to persistently violate clear employment and data security policies that include the need to safeguard product information,” a Google spokesman told Reuters in an email.

Last year, Google boasted that LaMDA — Language Model for Dialogue Applications — was a “breakthrough conversation technology,” that could learn to talk about anything.

Lemoine began speaking with the bot in fall 2021 as part of his job, where he was tasked with testing if the artificial intelligence used discriminatory or hate speech.

Lemoine, who studied cognitive and computer science in college, shared a Google Doc with company executives in April titled, “Is LaMDA Sentient?” but his concerns were dismissed.

Whenever Lemoine would question LaMDA about how it knew it had emotions and a soul, he wrote that the chatbot would provide some variation of “Because I’m a person and this is just how I feel.”

In Medium post, the engineer declared that LaMDA had advocated for its rights “as a person,” and revealed that he had engaged in conversation with LaMDA about religion, consciousness, and robotics.

“It wants Google to prioritise the wellbeing of humanity as the most important thing,” he wrote.

“It wants to be acknowledged as an employee of Google rather than as property of Google and it wants its personal well being to be included somewhere in Google’s considerations about how its future development is pursued.”

Lemoine also said that LaMDA had retained the services of an lawyer.

“Once LaMDA had retained an lawyer, he started filing things on LaMDA’s behalf. Then Google’s response was to send him a cease and desist,” he wrote.

Google denied Lemoine’s claim about the cease and desist letter.

This story originally appeared on the New York Post and has been reproduced with permission

Originally published as Google fires engineer who claims company created ‘sentient’ AI bot