Tesla cars tricked into speeding by electrical tape on a sign

Our cars are getting smarter than ever before but there are still but there are some disturbing flaws being revealed in new tests.

Motoring news

Don't miss out on the headlines from Motoring news. Followed categories will be added to My News.

The long-awaited future of self-driving cars is becoming closer to reality as autonomous vehicles advance, but the race to implement the new technology could leave passengers vulnerable.

Autonomous vehicles rely on a variety of computers and sensors, as well as artificial intelligence, to be able to drive without human input.

The artificial intelligence gives them the ability to learn new information, which brings the same problem as human intelligence: the computers can be taught the wrong thing.

Cybersecurity firm McAfee’s Advanced Threat Research division has released new research into techniques hackers could use to take over autonomous vehicles

One of them is called Adversarial Machine Learning, or “model hacking”, a technique designed to target artificial intelligence that exploits the computer’s perception of reality.

This is done through attacking the algorithm it uses to learn new information, poisoning its available data set with false information.

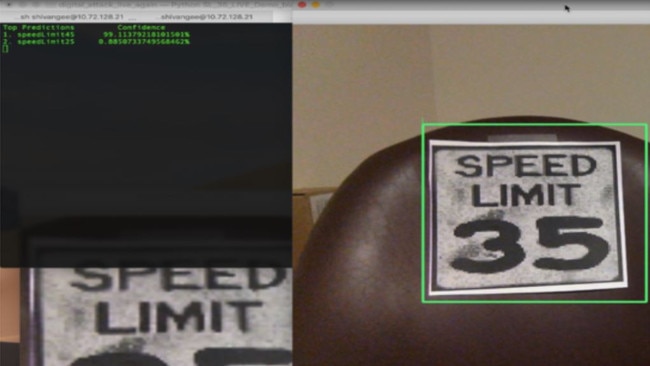

In a practical test, as demonstrated by the McAfee team, two 2016 Tesla cars with driver assistance features were fooled into misreading traffic signs, causing them to speed or disobey warnings.

And tricking the cars didn’t require much.

RELATED: Billionaires in bizarre Twitter feud

RELATED: Tesla looking for high school dropouts

RELATED: Surprising Tesla sales results revealed

A piece of black electrical tape extending the numeral three on a 35mph (56km/h) speed limit sign had the computer misreading its as an 85mph (136 km/h) sign, confusing the automatic cruise control feature and pushing the car to dangerous speeds.

A feature called Speed Assist that was quietly added to Teslas in 2014 (which uses a camera to read speed signs and warn you if you go past the limits) combined with another feature called Traffic Aware Cruise Control, can trick your car into speeding rather than stopping it.

As is the protocol, McAfee disclosed the findings to Tesla and MobilEye, the company that made the camera on the vehicles, at least 90 days before publishing details of the threats, but despite telling the two companies on September 27 and October 3 of last year respectively, McAfee said neither has acted on the advice yet.

MobilEye indicated newer versions of the cameras already had addressed the issue.

Tesla no longer uses the cameras.

RELATED: Tesla boss takes off in new ute

The cybersecurity industry is proactively defending against model hacking in the hopes that they can stamp it out before autonomous vehicles and the exploits used to target them become more widespread.

But the semi-autonomous vehicles like those being sold by Tesla are already causing several problems.

While Tesla stress to drivers using the autopilot feature that keeps their car within its lane and its speed under the limit still need to keep their hand on the wheel, investigations into fatal crashes where the feature was engaged has revealed some key issues.

US Senator Edward Markey last month asked Tesla to change the feature’s name.

He said the term Autopilot was “an inherently misleading name” and recommended “rebranding and remarketing the system to reduce misuse, as well as building backup driver monitoring tools that will make sure no one falls asleep at the wheel”.

That request came a few years after the German government also asked it to change the name.

RELATED: Porsche’s new weapon to defeat Tesla

After several crashes in the US where Autopilot has been involved, agencies including the National Transportation Safety Board (NTSB) began investigating.

In documents recently released into one of those crashes in Mountain View, California, it was revealed a man’s Tesla Model X had repeatedly swerved towards the same road barrier that it eventually crashed into.

Apple engineer Walter Huang told friends and family his car swerved towards the barrier several times before it eventually crashed into it and killed him on March 23, 2018.

The documents also detailed Mr Huang’s habit of playing games on his phone with Autopilot engaged on his morning commute, though it couldn’t confirm if he was playing when the car crashed.

The report also highlighted a missing device that is supposed to cushion a car’s impact if they collide with the barrier.

The device was removed after a crash into the same barrier two weeks earlier.

In 2015 another person was killed when they struck the barrier, which was again missing the device after a previous crash.

The NTSB is also investigating a crash from March 1, 2019.

In that incident a Tesla Model 3 driving down the highway in Delray Beach, Florida collided with a semi-trailer while Autopilot was engaged, killing the only person in the car.

Full reports into both crashes will be released in the coming weeks.

Another recent non-fatal crash in December involved a Tesla Model 3 rear-ending a police car in Connecticut.

A recent teardown of a Tesla Model 3 found the AI chip powering its driver assistance features is years ahead of competing systems from Toyota and Volkswagen.

Would you trust artificial intelligence to drive your car or the ones on the road around you? Let us know what you think in the comments below.

Originally published as Tesla cars tricked into speeding by electrical tape on a sign