‘Serious risks’: Artificial intelligence giant OpenAI develops voice cloning tool that works with just 15 seconds of sample audio

With just 15 seconds of sample audio, a powerful new piece of technology can accurately clone someone’s voice and have them say anything, sparking “serious risks” for society.

Technology that can an accurately clone a human voice with just 15 seconds of sample audio won’t yet be released to the general public because of the “serious risk” of misuse.

Artificial intelligence giant OpenAI has developed the tool, called Voice Engine, which can turn text prompts into spoken audio mimicking someone’s voice.

In an announcement on its website this week, the company said it began experimenting with the technology in early 2023, collaborating with “a small group of trusted partners”.

OpenAI shared some examples of how Voice Engine had been used by those partners, from generating scripted voiceovers to translating speech while retaining the subject’s accent.

One powerful use was by Norman Prince Neurosciences Institute in Rhode Island, which uploaded a poor quality 15-second clip of a woman presenting a school project to “restore the voice” she later lost after suffering a brain tumour.

“We are choosing to preview but not widely release this technology at this time,” OpenAI said.

The company wants to “bolster societal resilience against the challenges brought by ever more convincing generative models”.

It conceded there were “serious risks” posed by the technology, “which are especially top-of-mind in an election year”.

Mike Seymour is an expert in computer human interfaces, deepfakes and AI at the University of Sydney, where he’s also a member of the Nano Institute.

“Other companies have been doing similar stuff with voice synthesis for years,” Dr Seymour told news.com.au. “This OpenAI news isn’t coming out of nowhere.”

What makes this announcement significant is the apparent high quality of the audio produced by Voice Engine and the fact that such a small sample is needed.

“In the past, you’ve had to provide a lot of training data to get a voice synthesis,” Dr Seymour said.

“I had my voice done a few years ago, and I had to provide something like two hours of material to train it on.

“Where we’ve moved to now is newer technology that has kind of a base training and then it has a specific training – and the specific training in this case is only 15 seconds long.

“That’s very powerful.”

‘Serious risk’ for misuse

Since OpenAI released the startling third version of its ChatGPT technology in late 2022, a raft of advancements in artificial intelligence have been unveiled.

A host of companies from Google to Adobe and Microsoft have shown off their own tools, including the ability to produce realistic images and video based on text prompts.

Concerns were quickly raised about the potential for AI to fuel misinformation and disinformation, particularly surrounding politics.

A deepfake image of former US President Donald Trump resisting arrest while his wife Melania screams at police officers went viral last year.

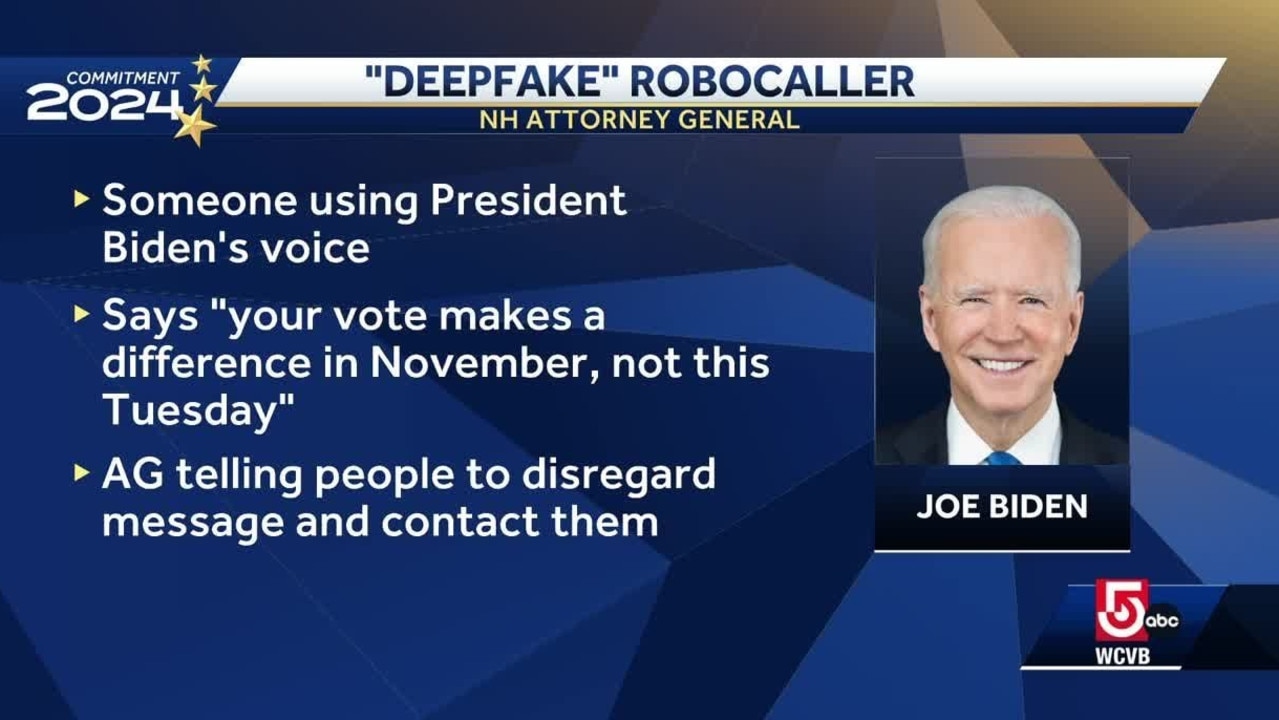

During the recent US primaries, a number of voters received a nefarious robocall featuring the voice of President Joe Biden, urging them not to cast a ballot.

In February, a company in Hong Kong was stung by a deepfake scam to the tune of HK$200 million (AU$40 million).

An employee working in the unnamed multinational corporation’s finance department attended a video conference call with people who looked and sounded like senior executives at the firm and made a sizeable funds transfer at their request.

Hong Kong Police acting senior superintendent Baron Chan told public broadcaster RTHK that the company clerk was instructed to complete 15 transactions to five local bank accounts.

“I believe the fraudster downloaded videos in advance and then used artificial intelligence to add fake voices to use in the video conference,” Mr Chan said.

“We can see from this case that fraudsters are able to use AI technology in online meetings, so people must be vigilant even in meetings with lots of participants.”

Dr Seymour said there’s a risk of people being deceived by voice cloning, especially when it comes to financial fraud.

Advanced technology like Voice Engine that produces a convincing and high-quality output could replicate common text-based scams that fleece people out of millions of dollars each year.

A recent SMS con saw people messaged by their children saying their phone and purse had been stolen, asking for money.

While many saw through the ruse, many Aussies were stung.

“Imagine you get a call and it’s your daughter’s voice and she says she’d stuck somewhere, she’s been robbed, she needs money, and she gives you an account to transfer it to,” Dr Seymour suggested.

“That’s terrible, right? What would any parent do in that situation? They’d respond to that kind of emergency.”

Up until this point in time, people have been able to trust someone’s voice. It’s even used by some major financial institutions to verify identities and give access to accounts.

“This technology potentially takes away another pathway we have of preventing scams,” Dr Seymour said.

There’s also potential for voice cloning to be used to sow unrest or disrupt democratic processes, with OpenAI itself citing the risks of the technology during an election year in the US.

“There’s that expression that a lie travels around the world twice before the truth gets out of bed in the morning and puts its pants on,” Dr Seymour said.

“So, if you were to generate a highly inflammatory audio clip and maybe make it sound like it was recorded on a street with some background noise and a bit muffled stuff, of someone taking a bribe or doing something atrocious, that would probably get a bunch of attention before it could be disproved as a fake, and the harm would be done.”

Another issue is that audio is much easier to fake that video or imagery, Dr Seymour said, pointing to the now infamous problem AI seems to have with illustrating human fingers.

“With video, the data rates are so much bigger, there’s more dimensionality upon which for you to detect a fault,” he said.

“If you’re listening to something, you’ve obviously got an ear for it, and there’s a couple of dimensions to how you’re listening to it, but with vision, there’s tons of different dimensions to how you might pick it.

“The action could appear unrealistic, the colour could pop, the motion could pop, the frames could corrupt, the image could represent something that doesn’t happen, like someone with seven fingers.”

Some big potential benefits

The example provided by OpenAI of the young woman getting her voice back after a brain tumour robbed her of it is “extraordinary”, Dr Seymour said.

“This is an area I’ve actually researched,” he said of the potential for voice synthesis in the medical field.

“If we just look at strokes – 15 million people [globally] will have a stroke this year. Five million will die, five million will recover, and five million will live with some kind of acquired brain injury.

“And one of the classic ones is dysphagia on the face and a problem with being able to articulate and speak. It’s very frustrating for them. It’s also very frustrating for their caregivers because you can’t get much or as much from that person.

“Being able to improve that communication, allowing them to connect with people more, is brilliant.”

For some time now, ‘voice banking’ has been something offered to people diagnosed with degenerative diseases that cause the gradual loss of speech.

“It’s like saving your persona digitally. It’s called ‘baking’ where if you know your voice will degrade as the disease gets worse, like Lou Gehrig’s disease, you can store away your voice now to use later.

“The idea is that those people can type and communicate, and it’ll still be their voice rather than an artificial one.

“The technology that enabled it was hard work, but it’s been getting progressively better. And it’s marvellous, because it’s horrendous to think of losing yourself behind a degenerative disease, especially in cases where the internal cognitive abilities of the person are unimpaired, but their ability to articulate has been lost.”

‘Negatives outweigh the positives’

OpenAI has committed to starting “a dialogue” on how Voice Engine can be responsibly deployed and how society “can adapt” to the technology.

“Based on these conversations and the results of these small-scale tests, we will make a more informed decision about whether and how to deploy this technology at scale.”

“We are engaging with US and international partners from across government, media, entertainment, education, civil society and beyond to ensure we are incorporating their feedback as we build.”

Professor Toby Walsh is a researcher in AI technology at the University of New South Wales and appeared on 3AW Radio in Melbourne this morning, where he described Voice Engine as “scary”.

“I think the scariest part is it only needs 15 seconds of your voice,” Professor Walsh said.

“I could just find 15 seconds of most people’s voice from social media and now I could say anything I want them to say.

“What they’ve [Open AI] done is, they’ve trained a neural network on thousands, and thousands of hours of speech, so it knows how to speak.

“Then to make it sound just like you, it only needs 15 seconds.”

While he can see the potential positive applications of the technology, he’s of the view that “the negatives far outweigh the positives”.

Originally published as ‘Serious risks’: Artificial intelligence giant OpenAI develops voice cloning tool that works with just 15 seconds of sample audio