‘AI regulators should look to the auto industry’: Workday

The technology has ‘some inherent risk’ – just like driving cars – and like the auto industry should be built and tested for safety, the head of AI at HR software giant Workday says.

Business

Don't miss out on the headlines from Business. Followed categories will be added to My News.

Shane Luke, artificial intelligence boss at human resources titan Workday, says regulators should take their cues from the automotive industry as they decide how best to rein in the much-hyped technology.

The Western world is varying its approach to tackling AI’s rampant rise. The European Union is introducing a specific Act. Whereas, the Albanese government is following the US’s lead in believing most concerns can be allayed by existing laws and expects AI to inject up to $600bn a year into the national economy for 2030.

Mr Luke – who says there is “some inherent risk with AI” – believes regulators and companies should look to the automotive industry, saying cars are still being made despite being dangerous. The difference is, they’re built and tested for safety.

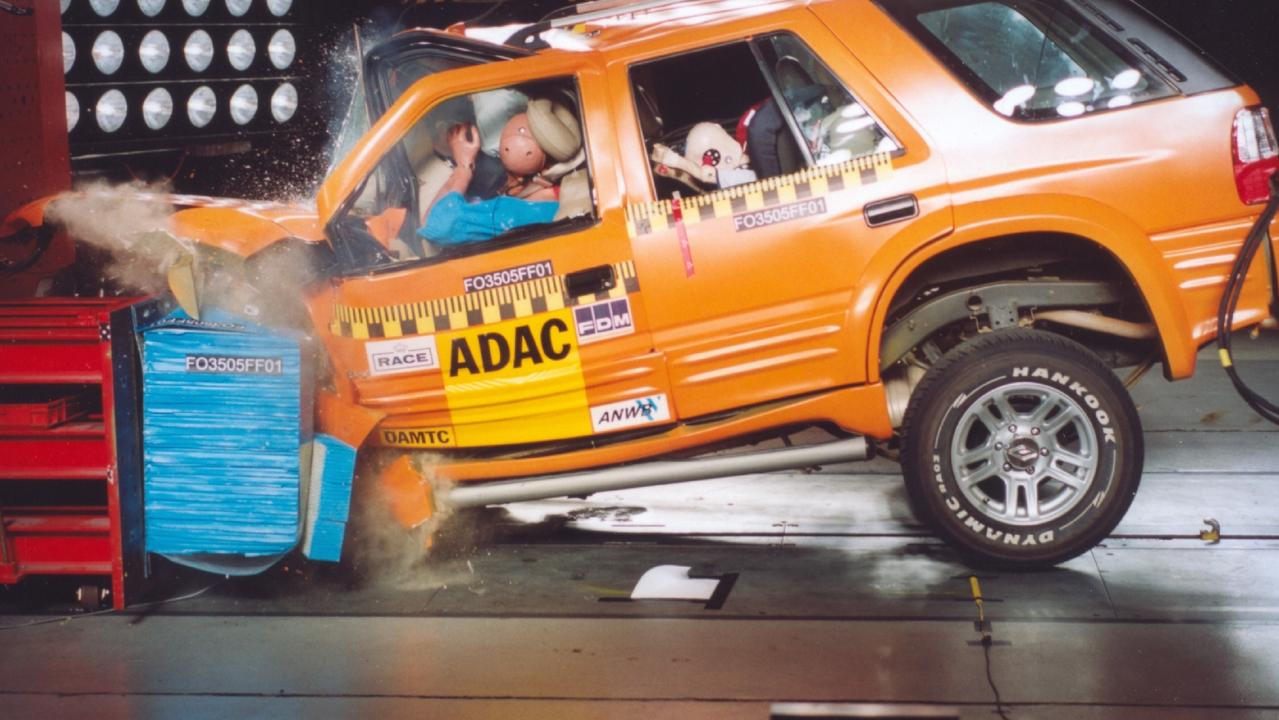

“When cars first came out, they were literally like death traps if you got in an accident. This giant engine was in front of you with nothing to block it from coming back into the cabin. There were no seatbelts, the doors can open easily. They’ll talk like you know, and over time, they evolved to get much safer. They also got much more capable,” Mr Luke said.

“Now, they have airbags, seatbelts, automatic braking systems, all kinds of stuff that helps make them safer. But there’s always a risk. No, there’s no zero risk situation. So what we really think about is how to mitigate those risks and then how to remediate if they occur.

“We want regulation that is in line with what you would do to build for safety. So that’s the most important part. We want that regulation to be there, but we don’t want it to stifle innovation.”

At Workday, he said a big part of enhancing the safety of AI systems was having a guided user interface (UI) and limiting what a person can do with a large language model and ensure that it is being used to fulfil its purpose – and, crucially, that humans stay in control.

This is aimed at ensuring that biases don’t creep in – as in the US, where AI resume scanning tools screened out women who had taken career breaks or time off to have children.

But this was an example in which humans had surrendered control to AI, or as ChatGPT maker’s chief executive Sam Altman told Microsoft’s Build conference in Seattle last week, expected AI to do all the work.

Mr Luke said having a guided user interface among enterprise customers can help overcome such biases and the technology going astray.

“We’re going to let you know that you’re interacting with a large language model in the background, and so it’s not you know, it’s not a source of truth. It’s an aid for you as the source of truth. So managing an end product is an important part of using these technologies and making sure the user understands that,” he said, again channelling the automotive industry.

“I’s a safety system designed to put you in charge of the content. Just like, if I don’t go and buckle my seatbelt – I’m not using the safety system in the car, and it warns me … even if you’re going like four miles an hour … it starts beeping at you. And so putting in the right level of safety system at the right time for the user is important.”

The other big concern with AI is overcoming hallucinations, where the model will deliver inaccurate, nonsensical or made-up answers to certain prompts.

Mr Luke said Workday was aiming to mitigate this by restricting the data the AI model had access to, so it was “less likely” to hallucinate, “simply because it doesn’t have access to that information”.

“Extending the car metaphor, maybe as far as I could stretch it right. You can almost think of it like I’ve put a limiter on your speed. So if you do get in an accident, you can’t be going fast enough to hurt yourself.”

For example, Mr Luke said popular large language models, when prompted, could write a short story for children or a job description for a vacancy at a particular company.

“Ours won’t do both. It can’t create that story for your kids. But it gives me a job description for it.

“We’re committed to building out the safety technologies for these systems that allow businesses to mitigate those risks and fully take advantage of the benefits.”

More Coverage

Originally published as ‘AI regulators should look to the auto industry’: Workday