Google DeepMind’s program does geometry like a maths champ

Problem-solving AI hailed as a big step towards development of a ‘general intelligence’

Let ABC be any triangle with sides AB = AC. Prove that the angle ABC = the angle BCA. Can you do it? Well, now a computer can too - and it can solve far harder problems besides.

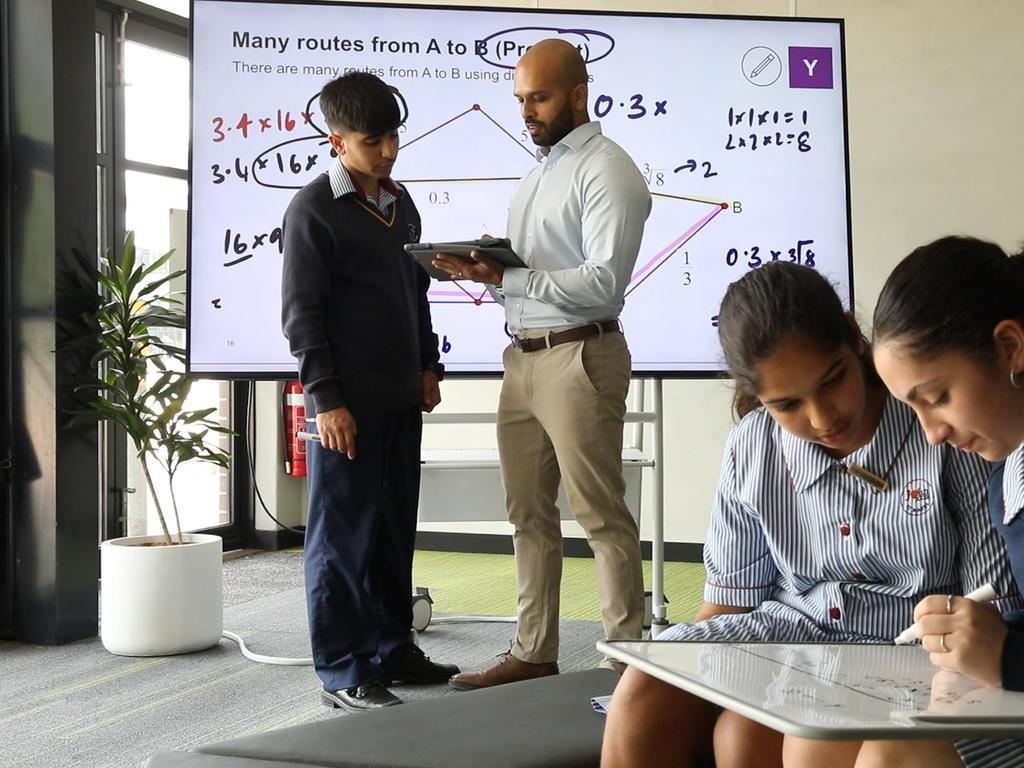

At the International Mathematical Olympiad (IMO), the annual meeting of the world’s best teenage mathematicians, Google DeepMind has unveiled a program that can compete with the best humans. And while establishing, as above, that an isosceles triangle has two equal angles may seem straightforward - although it is something most forms of artificial intelligence (AI) struggle with, as do many humans - it can go further. This ability, its developers argue, marks a step towards a more general kind of AI.

When tested on the far less intuitive problems given to IMO competitors, considered the elite of pre-university mathematicians, the program solved 25 out of 30.

Quoc Le, of DeepMind, said “mathematical reasoning, especially geometry, is one of the grand challenges for AI”, adding: “If someone told me we were able to solve IMO problems using AI even a few years ago, I would have said it was impossible.”

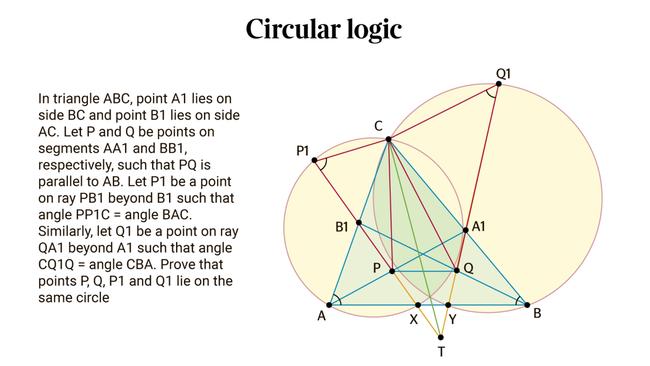

Training a program to solve geometry problems is difficult in part because there is a paucity of examples to teach it with. The problems involve proving a general relation between sets of shapes; for example, that the corners of a square all lie on the circumference of a single circle.

Although there are plenty of proofs in the literature, few are translated into the kind of language that can be standardised and machine-read. To get round this difficulty, DeepMind created the data themselves. The team made half a billion random geometrical objects and derived all the relationships between points in those objects - then generated proofs of the properties. They then trained a language model, a little like ChatGPT, that could identify a new geometric problem and spot likely ways to tackle it.

Of course, ChatGPT and other AI systems can be very confident and very wrong. To guard against this, the program tries out suggested approaches and assesses them using the formal rules of geometry and logic - just as a mathematician might believe an approach is plausible, then try it and see that it is not. In this way, a paper in the journal Nature shows, the system could work at the level of a top human. The first part of the program gave them intuition, the second rigour.

DeepMind’s Thang Luong said the work was an advance towards a more human kind of intelligence. “With deep reasoning you have to ... see the big picture,” he said. Showing that AI was capable of it was “an important step on our quest towards artificial general intelligence”, he said.

The findings were welcomed by other mathematicians. Ngo Bao Chau, who won a gold at the IMO and later a Fields Medal - the mathematical Nobel - called the results “stunning” but said that geometry was a good place to start because of the way problems are relatively constrained.

Thomas Fink, director of the London Institute for Mathematical Sciences, called the work “an important milestone in automated theorem-proving” but added that he did not think mathematicians would be redundant any time soon.

“Just being true isn’t enough to make a conjecture worth writing about,” he said. “It also needs to advance our understanding.” Spotting which theorems were important and which were trivial still needed a human touch, Fink argued.

The Times

To join the conversation, please log in. Don't have an account? Register

Join the conversation, you are commenting as Logout