Surveillance cameras with AI are watching you

AI surveillance is already more advanced than most of us know.

Surveillance cameras with artificial intelligence are zooming in on us around the world — and they know exactly who you are.

Higher camera resolutions and more sophisticated computer algorithms can confirm our identities in real time, checking a face against images in government databases, such as passport photos and driver’s licences.

These images and other biometric data increasingly will be used by law enforcement to identity you, and by local government and even private agencies to check if they are dealing with the correct person.

Many will applaud the benefits of facial recognition, such as quickly verifying criminals robbing a convenience store. Banks could confirm they were dealing with the real you after verifying your identity against an image accessed nationally through the Department of Home Affairs.

Australian AI plans

Two pieces of proposed legislation are being reviewed by the Joint Parliamentary Committee on Intelligence and Security. One sets up the Department of Home Affairs as the agency that deals with requests for identification checks using biometric data held by federal and state governments, while the other allows passport images and data also to be used for those domestic checks.

The debate over this push for facial recognition is hardly raising a ripple in the community — unlike the dissent over privacy that followed the Australia Card debate in 1985.

People at that time both applauded and condemned plans for Australians to have a national identification card. The Hawke Labor government saw it as a blow against tax evasion, social security fraud and as a way of detecting illegal immigrants.

The government even used the Australia Card as a trigger for a double-dissolution election in 1987. Despite an election win, technical issues and a coalition of opposition parties, tax evaders, cash economy practitioners and civil libertarians sank the proposal.

Today we face more surveillance and tracking than the Australia Card ever could have mustered and that monitoring goes way beyond the discussion surrounding the two bills.

As long as your head remains attached to your neck, you are infinitely more identifiable than via a plastic card with a serial number.

A facial recognition system is good if it means citizens are shielded from identity theft. The fear of being captured on a camera may dissuade criminals from robbing an all night service station or convenience store, or attacking a woman on her way home late at night. It’s a substitute for having police walking beats.

Chinese warning signs

But China’s mass surveillance is a warning of what can happen if the technology runs free, particularly if we let computers assess images and act as judge and jury without human checks and balances. It’s technology that we need to firmly place boundaries around.

The combination of higher camera resolutions and sophisticated computer algorithms that identify people in real time has proven a game changer. This so-called artificial intelligence (AI) capability means that machines do the arduous work of interpreting vision and checking people against known faces in government images databases, such as passport photos and drivers licences to confirm identities.

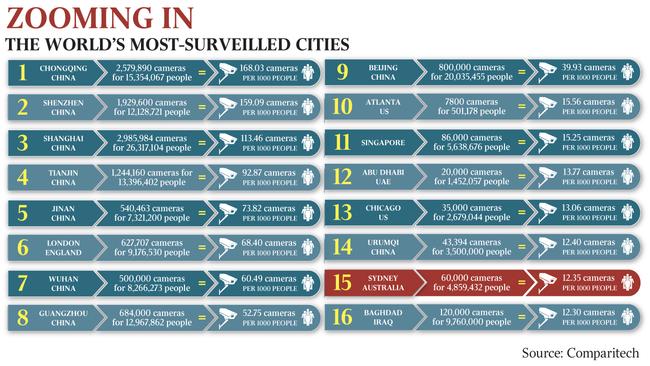

Closed-circuit television cameras combined with AI are being adopted in cities across the world at incredible rates. A survey of the 50 most surveilled cities released this month by Comparitech listed eight Chinese cities in the top 10, along with London and Atlanta. Sydney is in the top 20 with 60,000 cameras due to its ratio of cameras per head of population.

Chongqing in southwest China has more than 2.58 million cameras to watch 15.3 million people. Shanghai has three million cameras, and Shenzhen, on the border with Hong Kong, has 1.9 million.

Perhaps the most unnerving statistic is the projection that by next year, China could have up to 626 million CCTV cameras monitoring 1.4 billion people, a ratio of one camera per two people.

In 1984, just eight years after the death of Mao Zedong, I travelled in China as a backpacker, and the local population didn’t seem to have access even to cameras with colour film. Most local tourists I met on trains used what looked like a Chinese remake of the popular Brownie box camera of the early 20th century. The transformation of China in 35 years is more than staggering.

When it comes to surveillance, one camera in China these days can identify more than a dozen people in a crowd in real time and the AI capability is porting to other devices.

One camera in China can identify more than a dozen people in a crowd in real time.

In February last year, police in Zhengzhou in central China began trialling Beijing LLVision Technology’s AI mobile glasses. The company told The Wall Street Journal the glasses could identify people from a database of 10,000 suspects in 100 milliseconds. They are wired to an offline version of a facial recognition database you can carry in your pocket.

Suranga Seneviratne, from the school of computer science at University of Sydney, says this AI capability has sprung up in just the past five years. And it’s getting more capable year-by-year. AI can increasingly recognise people from different camera angles without even seeing their full face.

“It has been already demonstrated that you might need only one image of a person to correctly identify him from different angles and different resolutions in future,” Seneviratne says.

Mood and health in view

Cameras are not only identifying people. They are working out what we are doing.

Amazon Go stores in Seattle in the US recognise what goods you are buying. There are no cashiers — items are charged to your Amazon account automatically as you just walk out of the shop.

According to reports, China has been using cameras not only to identify people but also automatically ping them for misdemeanours such as jaywalking.

Cameras soon will be able to assess your mood and health.

“If you add other signs, like your walking patterns, something related to your mannerisms, it is possible that you could infer some of the health characteristics of a person. Those types of things we can expect will improve over time,” says Seneviratne.

It would be a good thing for a camera to identify someone fainting in the street and call a doctor or ambulance. On the other hand, a camera empowered by AI might misinterpret your behaviour as public drunkenness.

In the West, facial recognition is being embraced by communities on different levels.

A New York school is using facial recognition to be alerted about adults and students who are not permitted on the grounds, The Wall Street Journal reported last month. That sounds a viable use. On the other hand, the suggested use of facial recognition at US school camps so that parents can remotely check if their children are having a good time met opposition.

One school district wanted to use facial recognition technology for “disciplinary purposes as well as threat assessments”. Each use case needs careful weighing up.

Some US airports and airlines are beginning to use facial recognition to make it easier for passengers to check in baggage, get through security and board planes. You move through airports with minimal paperwork. JetBlue, Delta and Air France reportedly are trialling this.

Already watching us

In Australia CCTV systems are deployed for traffic monitoring, crime investigation and deterrence, personal and property security, and avoiding identity theft.

Darwin Council is adopting 138 new CCTV cameras powered by IndigoVision’s artificial intelligence. When activated, the system could use facial recognition, geofencing, number plate recognition and target pursuit, although the council did not hold a licence for this advanced functionality.

Digital rights body Electronic Frontiers Australia cites the trial of facial recognition by Stadiums Queensland at sports venues and concerts, claiming that is beyond the original charter.

The EFA says the adoption of facial recognition during the Gold Coast Commonwealth Games to combat terrorism gave authorities a taste of its usefulness. It is now part of general policing at stadiums in Queensland. The City of Perth has trialled it at the new Perth Stadium.

EFA chairwoman Lyndsey Jackson says the use of software and artificial intelligence by those cameras is where the main concern lies. She cites shopping centres, cafes and airports.

“More and more, facial recognition is a potential feature of a range of different software technologies. And there isn’t the legislation, the community standards or the regulation about whether or not that application should be turned off or on.

“And if it is turned on, how is that data and that information allowed to be stored and shared?”

Not everyone is embracing facial recognition technology. Situated at the gateway to Silicon Valley, San Francisco has banned facial recognition, not only because of its potential for mass surveillance, but also because of the city’s troubled past use of earlier technologies that were inaccurate and that profiled citizens.

Whether a jurisdiction uses CCTV empowered with artificial intelligence falls beyond the charter of the Identity-Matching Services Bill before federal parliament. It proposes to task the Department of Home Affairs with processing identification requests by agencies, local government and private sector bodies.

Two services would use AI under the proposal. One is a facial verification system that answers yes or no when asked if a submitted image matches a particular person’s photo on file. It would be available to local government and private organisations to check if people are who they claim to be, and seek to tackle identity theft.

The other service would let law enforcement, intelligence services and anti-corruption bodies identify an unknown person of interest and obtain biometric information held about them.

The Australian Passports Amendment Bill would let federal, state and territory agencies share travel document data including passport images under the vetting arrangements.

Under the plan Home Affairs would process requests but the identifying data such as driver’s licence images, passport images and other personal data would reside with the state or federal agency that compiles it.

Submissions to the JPCIS characterise it as formalising developments already in train for law enforcement agencies to access biometric data.

To have one central agency scrutinise requests is probably a smaller nightmare compared with having hundreds of agencies across the country doing this independently with each other under the radar.

With computer storage bountiful and cheap, any citizen in theory could carry data about everyone in their region on a tiny microSD card and house photos of everyone in the country on a multi-Terabyte hard drive obtainable across the counter.

The Law Council of Australia in its submission has called out the broader use of personal data that people entrusted to government for precise reasons.

“The Law Council notes that the identity-matching services operating through the Interoperability Hub will use information taken for a particular purpose for other purposes for which the consent of individuals has not been obtained,” its submission says.

It seems a case of privacy betrayal by government.

Misuse fears

Stakeholders are venting their concerns about the misuse of information through inadequate protection and the appropriateness of private sector access to personal data. They are calling for better safeguards. The use of computers to directly make decisions based on images captured by CCTV is another.

In NovemberAbacus News, which covers tech in China, reported the case of CCTV supposedly catching prominent business woman Dong Mingzhu jaywalking. The CCTV system, automatically acting as police, judge and jury, threw an image of her on a big public screen to name and shame her. Unfortunately the CCTV system had mistakenly caught an image of her on signage on a bus passing by. She was nowhere near the area.

These outcomes are possible when we leave everything to cameras and computers. It’s one of many facets of our brave new world of machines.

Cameras with artificial intelligence undoubtedly offer great benefits in public safety, security and in the fight against terrorism.

But it’s a technology that society is barely on terms with.

To join the conversation, please log in. Don't have an account? Register

Join the conversation, you are commenting as Logout