Hi-tech damage control

Some police officers simply can not unsee horrific images.

It’s been years since former prosecutor Angela Smith looked at the child exploitation photos that formed part of a case she was working on.

Despite prosecuting murderers and rapists since then, it’s the faces of these unknown victims — children — that remain with her.

Her job meant she saw just a handful of the graphic images. Police building the case must look at thousands.

“People come out of child protection quite broken because of the damage of looking at that stuff every day,” says Smith, president of the Australian Federal Police Association. “It can mess with your brain.”

Smith, along with police forces and courts around the country, recognise working with child protection material — the term for the graphic pictures and videos of sexual acts perpetrated on children — can have a devastating impact.

The plight of officers who are forced to sift through the growing number of sex abuse images has sparked calls for artificial intelligence to replace human beings in the sorting and categorising of the material.

The Administrative Appeals Tribunal recently finalised compensation orders to an Australian Federal Police officer who was diagnosed with post-traumatic stress disorder after working for four years with the AFP, including a stint with Child Protection Operations.

The female officer lodged a claim with Comcare for compensation in 2014 due to “depression and anxiety” as a result of “exposure to CPO material”.

Comcare accepted her claim and the officer received an insurance payout of $200,000 plus superannuation orders of more than $1000 a month.

Police associations across Australia are now pushing for urgent changes to legislation and court practices to allow the use of artificial intelligence to assess horrific images of child exploitation and violence. Studies and trials are being run with the Queensland Police Services’ elite Taskforce Argos and the AFP.

Growing horror

The amount of child exploitation material that police forces, and ultimately police officers, are dealing with jumps every year.

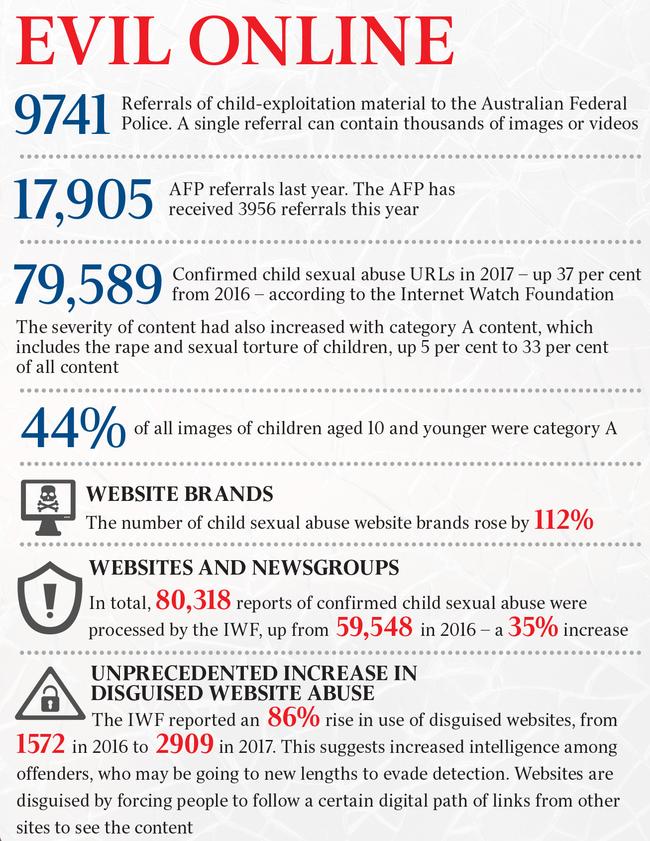

In 2017, the AFP received 9741 referrals of child exploitation material. Last year it received 17,905 referrals.

A single referral can sometimes contain thousands of images or videos. The AFP has received 3956 referrals this year.

South Australian paedophile Ruecha Tokputza was jailed last week for 40 years after being convicted of abusing 13 children in Thailand and Australia.

Investigating police discovered 12,500 images and 650 videos of child exploitation material.

A West Australian father who was jailed this week for 10 years for his abuse of young girls was caught with almost two million child exploitation images and videos.

Offenders often store and trade images.

The Internet Watch Foundation reported 79,589 confirmed child sexual abuse URLs in 2017, up 37 per cent from 2016.

The severity of content had also increased. Category A content, which includes the rape and sexual torture of children under Britain’s classification system, was up 5 per cent to 33 per cent of content.

In 2017, 44 per cent of images showing children appearing aged 10 or younger were assessed as Category A.

Scott Weber, chief executive of the Police Federation of Australia — an umbrella body for police unions — says officers who work in child protection are forced to view hundreds or even thousands of “horrific” images in order to categorise the offending.

Most states require police to view all of the images and videos found on offenders’ electronic devices in order to categorise the seriousness of the offending for criminal proceedings.

Commonwealth officers classify category four images as ones that show penetrative sexual activity between children or adults and children.

Sampling method

Weber says there is a need for legislation to change as well as court practices to allow the use of artificial intelligence to assess the images as evidence and reduce the trauma to police.

“Legislation needs to be updated to deal with technology advances,” he says. “Let’s get it straight away instead of waiting two or three years.”

Weber also suggests changes to the requirement that every image is viewed and classified, instead suggesting a threshold or sampling method be introduced across Australia, following a change to the Criminal Procedure Act in NSW, which allows for random sampling.

Law Council of Australia president Arthur Moses SC says the use of AI by police could require changes in legislation or court guidelines and practice notes, depending on the specific AI-informed decision-making model considered.

He says AI has the potential to make significant contributions to law enforcement and Australia’s justice system. But he warns relying on AI in investigations and court proceedings carries significant risks and the legislation or court guidelines needs to be clear about what model of AI involvement is being proposed.

“This is especially so when the subject matter concerns serious criminal offences and the exploitation of society’s most vulnerable — our children,” he says. “This scrutiny is critical to protect vulnerable victims, as well as to preserve the sanctity of court proceedings, the right to a fair trial and to safeguard convictions secured from possible procedural challenge.”

An AFP spokesman said the AFP is developing AI to tackle the growing issue of online child exploitation in Australia.

He says an initiative with Monash University and government bodies could vastly increase the speed and volume at which AFP can identify and classify child exploitation material.

“In doing so, it will ensure more people are held accountable for these abhorrent crimes,” he says.

“There is a critical need for this new technology given the ever-increasing amount of illegal content, particularly child exploitation material, either found in AFP investigations or reported to the agency.

“The amount of illegal content being referred to the AFP currently far exceeds our capacity to manually process it,” he says.

Hidden problems

In 2017, the AFP engaged Phoenix Australia, the national centre for post-traumatic mental health, to survey its employees.

While only 9 per cent of respondents reported symptoms of a likely diagnosis of post-traumatic stress disorder, AFP chief medical officer Katrina Sanders tells The Australian it is more likely to be closer to 20 per cent of the force, consistent with members of the defence forces.

She says police operations are 24/7 and police are expected to work in a “demanding and high-tempo” environment.

High-risk areas such as child protection and counter-terrorism have more mental health checks than other departments, she says.

“We certainly recognise the risk in these roles,” Sanders adds.

The stigma over mental health conditions has eased in recent years, so more people are self-reporting issues. “I suppose the nature of crime can change but people will always be required to respond to crime,” Sanders says.

Taskforce Argos has been working with Swedish technology company Griffeye for the past decade to develop technology to catch offenders, including assisting in the training of algorithms using real images of child abuse to recognise and classify the photos.

Griffeye CEO Johann Hofmann says it is necessary for AI technology to be trained on data that is relevant and big enough to produce high-quality results.

“Since our AI technology can automatically detect and classify child sexual abuse material with high precision, it has not only speeded up investigations but also reduced the exposure to the material,” he says.

Hofmann says the technology still relies on the person behind the computer to do the heavy work and AI is not the only solution.

Only way forward

Last year Taskforce Argos detective inspector Jon Rouse said harnessing technological evolutions like AI was “the only way forward”.

Computers were able to filter out data that investigators didn’t need to focus on, with a high level of accuracy, Rouse said.

“The finite resources we have for victim identification can be centred on the images and videos that really matter, with an end goal — identify and remove from harm more child victims faster,” he said in a Griffeye release.

A Queensland Police spokeswoman says it remains open to further collaboration with Griffeye with regards to expanding AI in this field.

“The QPS continues to incorporate innovation into its operational and intelligence-driven methodologies and is prepared to trial and assess technological opportunities which reduce risk to staff and improve outcomes for victims,” she says.

Smith says this willingness of the forces to use technology is a good thing.

She says the human brain is incapable of filtering out images the way a computer can and for health reasons rotations in certain sections should be limited to 12 months.

And, despite the risk of psychological trauma and the hardship of the job, Weber says police put their hands up to work in high-risk areas.

“These police officers realise they have to do this job, to lock up the most heinous offenders,” she says.

To join the conversation, please log in. Don't have an account? Register

Join the conversation, you are commenting as Logout