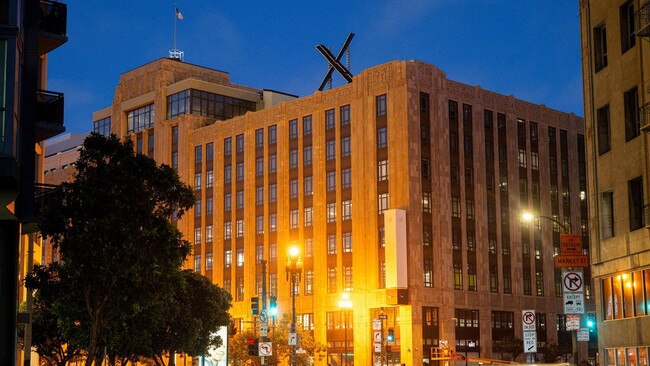

X fumbles first big Musk-era test of content policies after Israel attack

As social-media companies deal with misidentified videos and graphic violence, the former Twitter struggles to keep up in the first big Musk-era test.

The war that broke out last weekend between Israel and Hamas is one of the biggest tests of social-media’s content policing in years. So far, Elon Musk’s X Corp is stumbling, and it could get worse.

Since Hamas attacked Israel on Oct. 7, social-media platforms have been dealing with a range of challenges – misidentified video footage, fabricated information and violent content.

Outbreaks of horrific violence challenge the moderation abilities of all social-media companies, as propagandists and opportunists inundate their platforms with falsehoods.

Along with X, TikTok and Facebook have also dealt with fake and misleading content in the aftermath of Hamas’s attack on Israel. Even social-media platforms with large staffs and resources dedicated to policing false or hateful content have struggled during this past week.

Now, people who study social media say X is in an especially precarious position. Musk has slashed many of the company’s content and safety policy jobs and started selling verification, which used to be limited to high-profile users and professional journalists. The platform has also become increasingly reliant on volunteers to write fact-checking notes.

Posts that proliferated across X in recent days included old video clips being misleadingly repurposed, videogame footage falsely presented as an attack by Hamas, and a fake White House press release. Musk himself drew controversy when he posted suggesting users follow two accounts that researchers said were dubious. His tweet was later deleted.

“The platform is failing,” said Jonathan Mayer, an assistant professor of computer science at Princeton University. Mayer, whose research critiques platform responses to problematic content, cited graphic imagery and posts glorifying the violence and calling for more. “It’s readily available and it’s not rapidly being taken down,” he said. “The evidence is very clear.” A top EU official, Thierry Breton, sent letters in recent days to X, Meta Platforms, and TikTok demanding they detail their plans to tackle illegal content and disinformation around the conflict. Breton stepped up the pressure on X on Thursday by sending an additional request for information.

X CEO Linda Yaccarino withdrew Monday from a long-planned appearance at The Wall Street Journal’s annual technology conference next week, saying she needed to focus on the conflict.

A day later, X said Yaccarino is directing a task force that is working around the clock to combat content that is misleading or violates other policies and making sure its content moderators are on high alert, including for moderation in Hebrew and Arabic. X also said it has been removing newly created Hamas-affiliated accounts, removing accounts attempting to manipulate trending topics, co-ordinating with an industry group and removing or labelling tens of thousands of posts.

Musk has described his strategy for content moderation as freedom of speech, not reach, saying the site might leave up negative or pretty outrageous content within the law but not amplify it.

In the days before and after the Hamas attack, X reported far lower numbers of content-moderation decisions in the European Union than many of its social-media competitors, according to a database of such decisions established under the EU’s new content-moderation law.

For example, X reported an average of about 8900 moderation decisions a day in the three days before and after the attack, compared with 415,000 a day for Facebook – a much smaller proportion accounting for each platform’s user base in the EU. In about three quarters of those cases, X applied an NSFW, or not safe for work, label as part of those decisions but didn’t appear to remove them.

Musk’s strategy includes leaning heavily on Community Notes, a fact-checking feature in which volunteers write contextual notes to be added below misleading posts. The company says it uses an algorithm to surface notes ranked as helpful by users with different points of view.

Some observers say this tactic is flawed, arguing that people tend to scroll quickly through posts, and if they take the time to read a note, they likely have already digested the content, especially if it is video.

“Visual media can be instantly parsed and much more memorable,” said Richard Lachman, an associate professor of technology and ethics at Toronto Metropolitan University.

In recent days, accounts on X, including some verified ones, circulated a video showing a celebration of a sports championship in Algeria in 2020, falsely claiming it showed an attack by Israel, noted Alex Stamos, director of the Stanford Internet Observatory and former chief security officer at Facebook.

While the video had gotten a fact-checking Community Note attached below it, one such post had 1.2 million views as of Wednesday. The note was missing from some other posts sharing the video that had gotten fewer views as of Wednesday midday.

X said Tuesday that in three days its volunteers wrote notes that have been seen tens of millions of times. The company posted a link for more users to sign up and said it recently rolled out an update to make notes appear faster below a post and is working on improving its ability to automatically find and attach notes to posts reusing the same video and images.

Yet in addition to having to take those notes into consideration, users may also feel a need to invest efforts into determining whether an unverified account is legitimate, as X recently began charging for verification and many users have been reluctant to pay for it.

David Frum, an author and former speechwriter for former president George W. Bush, posted on Sunday on X saying that 20 minutes on Twitter used to be a lot more valuable than 20 minutes of cable news. Now, he said, “You can still find useful information here, but you have to work vastly harder to find, sift, and block than you did a year ago.”

The wave of misleading content about the war is also challenging social-media companies with much bigger staffs, researchers said. During the first 48 hours of the war, more than 40,000 accounts engaging in conversations about the conflict across X, Twitter, TikTok and Facebook were fake, according to social-threat-intelligence company Cyabra. Some had been created more than a year in advance in preparation for this disinformation campaign, the firm said. The fake profiles have been attempting to dominate the conversation, spreading more 312,000 posts and comments during this time.

“The level of sophistication, coupled with the scale of the fake accounts and the preparation required to conduct influence operations campaigns in real-time is unprecedented for a terrorist group and is akin to a state-actor level of organisation,” Cyabra said.

A viral story that spread to varying degrees across X, Facebook and TikTok claimed Israel had bombed the Greek Orthodox Church of Saint Porphyrius in Gaza. The church denied the claim on its Facebook page on Monday. An investigation by the research group Bellingcat said some of the videos shared legitimate information about air strikes but often were intertwined with false claims.

Facebook parent Meta Platforms said it set up a special operations centre staffed with experts, including fluent Hebrew and Arabic speakers, and is working around the clock to take action on violative content and co-ordinate with third-party fact checkers in the region. TikTok said it has increased resources to prevent violent, hateful or misleading content, including increasing moderation in Hebrew and Arabic, and is working with fact-checking organisations. Graphic coverage of the war has several implications potentially in conflict _ there is the possible trauma to viewers and the question of removing accurate information that provides a historical record and potential evidence of war crimes. In 2018, Facebook received both praise and criticism for taking down posts showing government-backed violence against the Muslim Rohingya minority in Myanmar, said Stamos, the former Facebook chief security officer.

The Christchurch, New Zealand, massacre that was livestreamed on Facebook in 2019 led to investments in technology capable of flagging potential violence being broadcast in real time.

A person still needs to review the flagged content for it to be removed, though, Stamos said, which is why it is so critical for platforms to have beefy moderation teams.

Palestinian militants threatened on Monday to begin executing prisoners and said they would broadcast it. The threat came after the Israeli military said it was going on the offensive with an intensifying bombing campaign.

The potential for a large volume of live or recorded footage of hostage executions showing up on social media is high and could be highly challenging for platforms to identify and deal with swiftly, said Darrell West, a senior fellow focused on social media at the Brookings Institution. That could include removing the content or adding a warning.

“When you have an organised effort like this, there could be thousands of people posting,” he said. “It’s going to be hard for platforms to deal with this.”

— Sam Schechner contributed to this article.

The Wall Street Journal

To join the conversation, please log in. Don't have an account? Register

Join the conversation, you are commenting as Logout