When Facebook gets under your skin

Feeling angry, manipulated, distracted, fatigued, agitated and disgruntled after a session on Facebook?

Feeling angry, manipulated, distracted, fatigued, agitated and disgruntled after a session on Facebook?

You obviously access more than your friends’ and family’s snaps. Posts about divisive social and political issues, ignorant commentary, chain letters and blatant misinformation is likely near the top of your feed.

If you have hundreds of friends, you might receive copious posts about people you don’t necessarily care lots about, and less about those closer to your heart.

There’s the issue of concentration span. It’s easy on Facebook to be quickly distracted and embroiled in a new controversy seconds after being in another.

International researchers have looked at how user’s emotions are manipulated by social media. People’s online behaviour and preferences on social media can limit the quality and balance of information they receive through their news feed.

Cognitive scientist Professor Stephan Lewandowsky, a researcher at University of Bristol and previously with The University of Western Australia has examined the anger, outrage, manipulation and loss of a focused concentration that can accompany significant time on social media such as Facebook.

According to his biography, his research examines people’s memory, decision making, and knowledge structures, with an emphasis on how people update information in memory. It says he has published more than 200 pieces on how people respond to corrections of misinformation and what variables determine people’s acceptance of scientific findings.

He says feeling anger and outrage is central to social media’s business model which is to keep you on their platform for advertising purposes.

“If something is making you really emotional and you get incredibly angry or outraged by something you see on Twitter or on Facebook, the chances are they (the items) are being sent to you because they know that you will be outraged. It is a targeted message that is trying to exploit your vulnerabilities.”

He says there’s a built-in incentive for social media companies to outrage you, because “the more outraged you are, the more time you‘re going to spend on their platform, and ultimately that’s all they care about.”

Professor Lewandowsky says people can become embroiled in content that they strongly disagree with. It’s part of the human psyche to keep a watch out for prospective danger. “There is a well known negativity bias. People pay more attention to negative things than positive things because they‘re potentially threatening.”

Facebook has grappled with the issue of anger on its platform. In May, The Wall Street Journal reported that a Facebook team had put together a presentation on how the company’s algorithms were driving people apart.

“Our algorithms exploit the human brain’s attraction to divisiveness,” read a slide from a 2018 presentation, reported the journal.

The presentation warned that Facebook would be feeding users ‘more and more divisive content in an effort to gain user attention & increase time on the platform’.”

The Wall Street Journal story said Facebook founder Mark Zuckerberg had expressed concern about “sensationalism and polarisation”, but Facebook’s interest proved to be fleeting. It reported that Mr Zuckerberg and other senior executives largely shelved the research.

So what do users do? You can delete your Facebook account to escape this manipulation, and move to an alternative – Professor Lewandowsky uses MeWe which he describes as a Facebook look-alike without the advertising traps. But if your friends don’t join, posting there would feel solitary.

If all your contacts and friends are on Facebook, and if it’s an important means of communication for you, you might have to grapple with curating your Facebook feed.

Professor Lewandowsky says this isn’t easy because Facebook’s algorithms are opaque, but he has suggestions to improve your experience.

One is to cull your friends to people who really matter to you.

“One way … is by just radically reducing the amount of information you‘re exposing yourself to by not being friends with all that many people, by not following that many people on Twitter, by unsubscribing from email mailing lists,” he says.

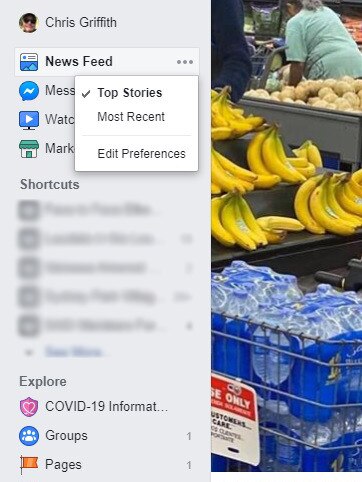

Next, you can alter the news feed itself. You can go to the top of the left-hand column and toggle the “News Feed” from “Top Stories” to “Most Recent” for a feed which is in chronological order and less subject to Facebook’s top stories ranking algorithm. Unfortunately you need to do this each time you review your feed as Facebook reverts to Top Stories as a default. The workaround is to save the web address of the Most Recent feed and select that saved URL directly from your browser.

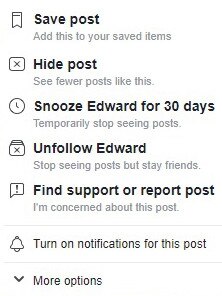

Next, when you see a post that annoys you, you can click the three dots at the top-right to access a menu that not only lets you unfollow a person, but also take less drastic action such as hiding posts of particular types from particular sources, and “snoozing” a Facebook friend for 30 days so that you can have a rest from them.

That will help reduce the volume of material that angers you and is irrelevant to you.

Professor Lewandowsky is particularly concerned about the impact of a fire hose of information that social media generates.

“There is evidence … that social media leads to a decline in attention span,” he says. “Our society as a whole is dropping issues more and more critically, because something else comes along that is exciting for two days and then we forget about that. We move on to the next thing.

“Try not to get flooded by information … a flood makes you more susceptible, makes you more vulnerable to doing things that then turn out to be wrong, or something you might regret, like you‘re sharing information that turns out to be false.”

He says all users can contribute to a better online feed by pledging not to share or forward any material unless you have read it and checked it to see whether it is credible.

“Another problem we‘re having is that it’s just extremely easy to share things. The latest statistic I’ve seen is that about 60pc of information is being shared on social media without the person actually reading it.”

Professor Lewandowsky says be careful about “liking” posts. “We know that a machine learning algorithm can identify your personality, on the basis of your Facebook likes.

“You have to recognise that everything you do on Facebook is being recorded and is being used to optimise Facebook‘s profit by selling advertising that is targeted at you. That’s the business model of Facebook, there’s no question about it.”

He says if you don’t like political material on Facebook, avoid interacting with it. If you live in the US, Facebook says you can turn off social issue, electoral, and political ads for the upcoming US election.

“The problem with Facebook now is that it is customised to each particular individual, so whatever turns you on, or actually whatever turns you off and gets you angry, is what they‘re going to show you.

“The real problem at the heart of this is the attention economy. That is something we have to recognise, that nothing will change fundamentally unless we realise that there are people out there who are selling to us, every time we use Facebook or Twitter or any other social media.

“If anything is free on the internet, then you are the product.”