Social media: Instagram owner Meta acts on ‘suspicious’ adults messaging teens

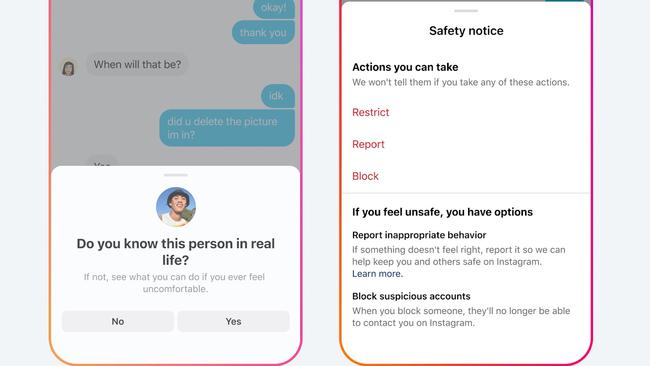

Adults reported as ‘suspicious’ on Instagram will lose the ability to ‘slide into the DMs’ – direct messages – of minors, under new protective measures being tested by parent company Meta.

Adults reported as “suspicious” on Instagram will lose the ability to “slide into the DMs” – or direct messages – of minors under new protective measures being tested by parent company Meta.

The move arrives after the international social media conglomerate recorded a 70 per cent increase in the number of reports filed by minors on the app in the first quarter of 2022.

The new measures are the latest in a string of preventive tools and features rolled out by Facebook’s parent company over the past year as it fights to claw back consumers from social media rivals TikTok, Snap and newer social media platform BeReal.

Other moves include looking at ways to prevent the sharing of teen intimate images that can, when in the wrong hands, result in the non-consensual sharing of those images – a term commonly referred to as revenge porn.

Meta’s new move was applauded by acting eSafety commissioner Toby Dagg, who said the social media giants were not always transparent.

“One of the ongoing challenges has been limited transparency around what companies are and, more importantly, are not doing to protect users from harm, particularly children,” Mr Dagg said.

“We know that platforms that are popular with young people will also often be attractive to adult predators looking to exploit and groom them.

“All platforms that allow social interaction, from apps and social media sites to online video games, are open to misuse and can expose children to unwanted contact from strangers, being bullied or abused, or being exposed to harmful content.”

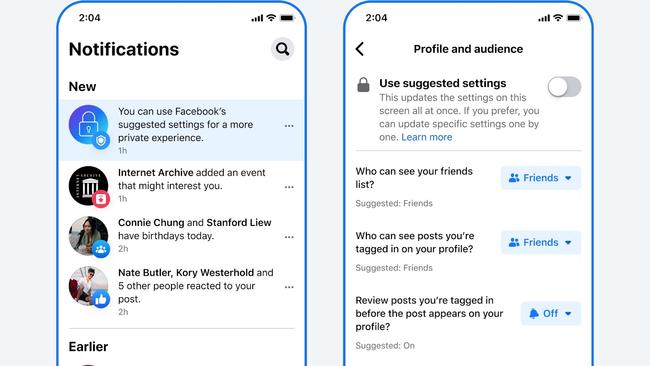

In a bid to stop adults from messaging teens on Facebook, Meta removed the ability for adults to message teens they are not connected to or from seeing them in “People You May Know” – a Facebook feature that encourages users to add people who share mutual connections.

Meta now asks teens if they would like to report an adult after they have blocked them. More than 100 million safety notices were delivered to Facebook users in a single month last year, the company said.

It is understood that a single account reported as suspicious would not lose the ability to message teen users. Meta indicated that additional measures, including advice from authorities and analysis of a user’s activity across multiple Meta platforms, would be used when flagging an adult account as suspicious.

Mr Dagg said the eSafety Commission sent legal letters to several tech giants including Apple, Meta, Microsoft, Snap and Omegle asking them to show evidence of meeting the government’s Basic Online Safety Expectations.

“The first round of notices, issued to these companies in August, sought information about what steps they are currently taking to detect, remove and prevent child sexual abuse material appearing on their platforms and services,” he said.

“The intention is for these expectations to work hand-in-hand with new mandatory industry codes. Draft industry codes focused on the risk of harmful material, including child sexual exploitation material on their services, have been provided to eSafety and we are currently considering the draft codes, in accordance with the statutory framework.”

About six in 10 children have been contacted by someone they had only met online, according to research conducted last year by the eSafety Commissioner.

Of those, about a quarter of instances involved someone over the age of 18.

Teens aged between 14 to 17 were most commonly contacted by strangers at 69 per cent, followed by children aged 11-13 at 52 per cent and children aged eight to 10 at 37 per cent.

To join the conversation, please log in. Don't have an account? Register

Join the conversation, you are commenting as Logout