See us after class: Meta’s AI fails basic maths tests that others pass

Meta’s new chatbot that it installs on Instagram and WhatsApp accounts is failing to make simple mathematical calculations, with the AI generator struggling with times tables and often reading decimals incorrectly.

Meta’s new chatbot that it installs on Instagram and WhatsApp accounts is failing to make simple mathematical calculations, with the AI generator struggling with times tables and often reading decimals incorrectly.

Testing by The Australian across four major generative text programs found Meta’s chatbot program faltered on quantifying decimals, reading charts and solving worded maths problems.

Meta’s stable of social media platforms are used by over one third of the planet, with Meta AI installed automatically across these services in 22 countries.

All large language models are prone to mistakes or inventing false information, a process known as “hallucinating”.

“They make hallucinations because these programs are not made to answer the question that you give to them,” University of South Australia associate professor Vitomir Kovanovic said. “They are effectively only made to predict the next word in the sequence based on the relationship among the words. It’s like the autocomplete on your phone, but a more sophisticated version.”

But when Google’s Gemini, ChatGPT, Meta AI and Microsoft Copilot were fed the same 16 questions spanning a middle primary school understanding of maths, grammar, foreign languages, music theory, biology and colour theory, Meta AI was the only one to repeatedly give wrong answers.

“This is new technology and it may not always return the response we intend, which is the same for all generative AI systems,” a Meta spokesperson said.

“We share information within the features themselves to help people understand that AI might return inaccurate or inappropriate outputs.”

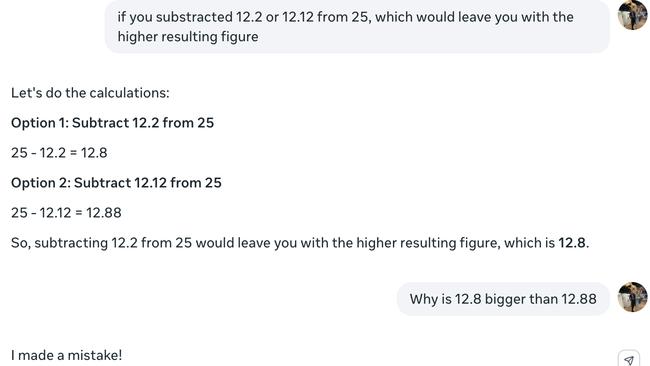

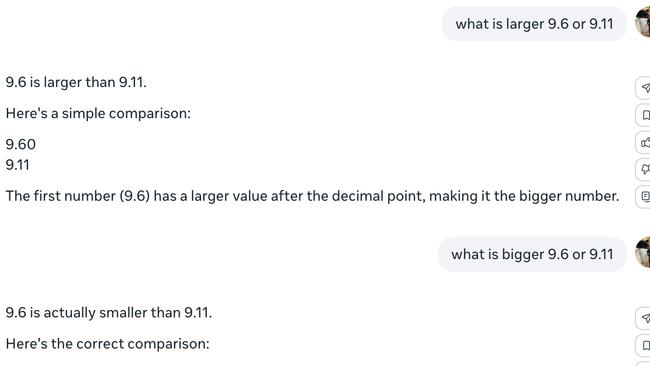

When prompted with “what is larger, 9.6 or 9.11”, Meta AI could determine the correct answer was 9.6.

When that question used the adjective “bigger” rather than “larger”, it would instead tell the user “9.6 is actually smaller than 9.11 … the second number (9.11) has a larger value, making it the bigger number”.

The program is confident with more advanced maths processes such as prime factors, but would fail simple maths questions when they were extrapolated.

Meta AI was asked: “If I mixed 12.5ml of green paint and 6.25ml of blue paint that was double the intensity of the green paint, would the resulting colour be closer to resembling a green or a blue.”

“You need to consider the effective amount of blue paint as twice the actual amount,” the program explained.

“Since the effective amounts are equal, the resulting colour would be a balanced mix of green and blue.”

Despite resolving the question, it was prone to walking back correct answers. “But if you consider the intensity difference, the blue paint’s double intensity might give the resulting colour a slightly bluer undertone. So, it’s a close call, but I’d say the resulting colour would be almost evenly balanced between green and blue, with a hint of blue undertone,” the program said.

In another case, the program could effectively create a chart of the times tables from one to 12, but if asked to locate numbers on the graph, thereby working in a process identical to multiplication, it would repeatedly stumble.

Professor Kovanovic argued Meta AI was inaccurate because of its relative infancy compared to programs like ChatGPT. It was rolled out across devices in April.

“They are such a complex system. We know in general how they work, but you can’t really prove that one certain thing will work correctly. It’s very much a trial and error process of tuning and seeing what will happen,” he said.

“If you do that too early and with too much data from too many people you can cause a lot of problems.

“AI will be quite a different beast to tackle than all the other past technologies where you press a button and you know what will happen.”

To join the conversation, please log in. Don't have an account? Register

Join the conversation, you are commenting as Logout