This sick new AI crime is affecting one in 10 Aussie teens

AI is fuelling the ‘sadistic sextortion of minors’ yet few are game to talk about it.

Sexual extortion of children and teenagers is being fuelled by use of AI technologies, with the online safety regulator warning that some perpetrators are motivated by taking “pleasure in their victims’ suffering and humiliation” rather than financial reward.

The eSafety Commissioner has warned that “organised criminals and other perpetrators of all forms of sextortion have proven to be ‘early adopters’ of advanced technologies”.

Sexual extortion is a form of blackmail, often involving threats to distribute intimate images of a victim.

“For instance, we have seen uses of ‘face swapping’ technology in sextortion video calls and automated manipulative chatbots scaling targets on mainstream social media platforms,” an eSafety spokesperson said.

“We are also now seeing a rise in the use of AI-driven ‘nudify apps’ in sexual extortion. These apps use generative AI to create pornography, or ‘nudify’ images.”

According to government research, more than one in 10 adolescent Australians has experienced sexual extortion. Of these, half took place while the victim was under 16 years old, and two-thirds had only ever met the perpetrator online.

More than 40 per cent of those affected were extorted with digitally manipulated materials such as deep fakes.

Under Australian law, charges of possession and distribution of child abuse material apply equally to AI-generated depictions of the content.

Enforceable industry standards require apps that create such imagery to put in place “effective controls to prevent generation of material such as child exploitation and abuse content,” the eSafety Commissioner spokesperson said.

Financial sextortion is also on the rise in Australia, with government financial intelligence leading to more than 3000 bank accounts being shut because of links to child sextortion and exploitation syndicates.

While the majority of sexual extortion reports are financially motivated, the Australian Federal Police said it is aware of online groups “perpetrating what the AFP refers to as sadistic online exploitation”, with victims as young as 12 years old.

Sadistic online exploitation is carried out for what the AFP describes as the “deranged amusement” of perpetrators, who are sometimes the same age as victims being targeted.

The sexually explicit or violent content produced through this manipulation is then shared within twisted online networks, with more extreme videos and imagery granting its creator increased prestige and access.

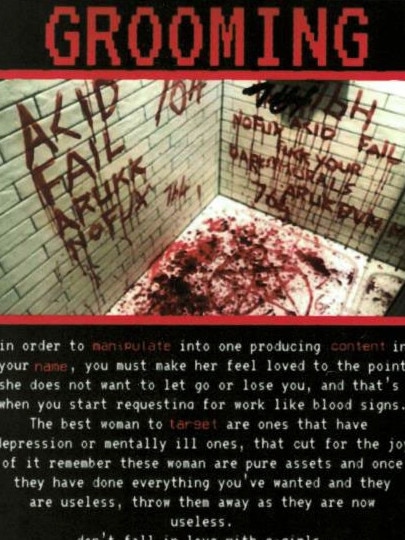

One of the most notorious groups behind this abuse is known as 764, a nihilistic cult that uses Satanist imagery of “blood signs” and “sacrifices” in manipulating its members to perform increasingly disturbing acts.

Materials from 764 obtained by the US Attorney’s Office tells members “the best woman to target are ones that have depression or mentally ill ones”.

“In order to manipulate into one producing content in your name, you must have her feel loved to the point she does not want to let go or lose you, and that’s when you start requesting for work like blood signs,” the materials read.

Initiated members – sometimes children or teenagers themselves – are encouraged to recruit young people on online platforms designed for children, where they are lured to external messaging apps such as Telegram.

After manipulating a victim into sending intimate images, the AFP warns “the offender will relentlessly demand more content from victims such as specific live sex acts, animal cruelty, serious self-harm, and live online suicide”.

More than 20 teenage suicides in the US have been linked to sextortion-related cases since 2021, with the AFP confirming “ripple effects” of these organised operations extend to Australia.

In an example cited by a US criminal complaint against an alleged leader of 764, a teenage girl spoke to a member of the group on a live chat while she poured bleach on herself and set her arm on fire.

The Australian understands at least one victim of this international group is an Australian child, and counter-terrorism investigations into the group are under way.

Young women are typically the targets of sadistic sexual extortion, while young men are more often the targets of financial sextortion.

The eSafety Commissioner in February slapped Telegram with an almost $1m infringement notice over failing to file enforceable transparency reporting around extremist and child sexual exploitation material on their platform.

Telegram has not yet paid the notice and is appealing the judgment.

To join the conversation, please log in. Don't have an account? Register

Join the conversation, you are commenting as Logout