Meta wants to penalise users who fail to disclose AI-generated content – but how is unclear

Social media giant Meta plans to penalise users who don’t disclose that their posts were built with AI, but just what action it can take hasn’t been revealed.

Meta says it will begin to penalise users who upload AI-generated content to their platforms and fail to disclose its origins.

The social media giant overnight announced it was pooling resources with other tech companies so that it could identify AI-generated content faster and with better accuracy on its platforms, a decision that arrives amid rising copyright and fake news concerns.

While it’s not yet clear how Meta would penalise users, some techniques known as “shadow banning” involve limiting a user’s content from appearing in feeds

However, the company admitted that even as one of the world’s largest social media companies, “it’s not yet possible to identify all AI-generated content” and has called on users to self-declare if they’d used AI tools to alter content they post on its platforms, Facebook, Instagram and Threads.

AI has made headlines in Australia over the past couple of weeks, most notably when it was supposedly responsible for manipulating the appearance of Victorian Animal Justice MP Georgie Purcell in an image.

Nine News, which aired the image during a night-time bulletin, claimed the image was the result of automation in Adobe’s Photoshop, but the company has denied that claim, saying it would not be possible without human intervention.

Meta global affairs president Nick Clegg said while it had been “hugely encouraging to witness the explosion of creativity” of its users testing AI tools, others and first-time users and viewers of AI content appreciated clarification.

“As the difference between human and synthetic content gets blurred, people want to know where the boundary lies,” he said.

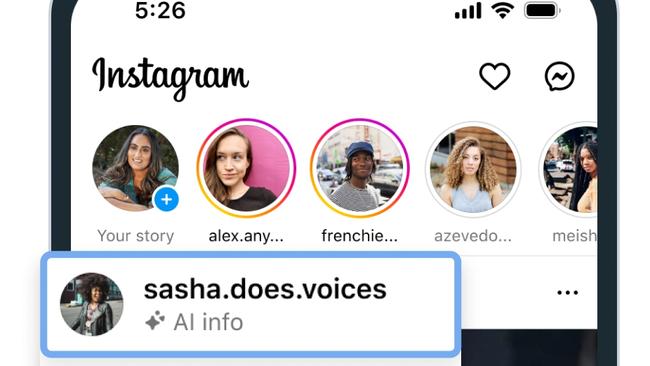

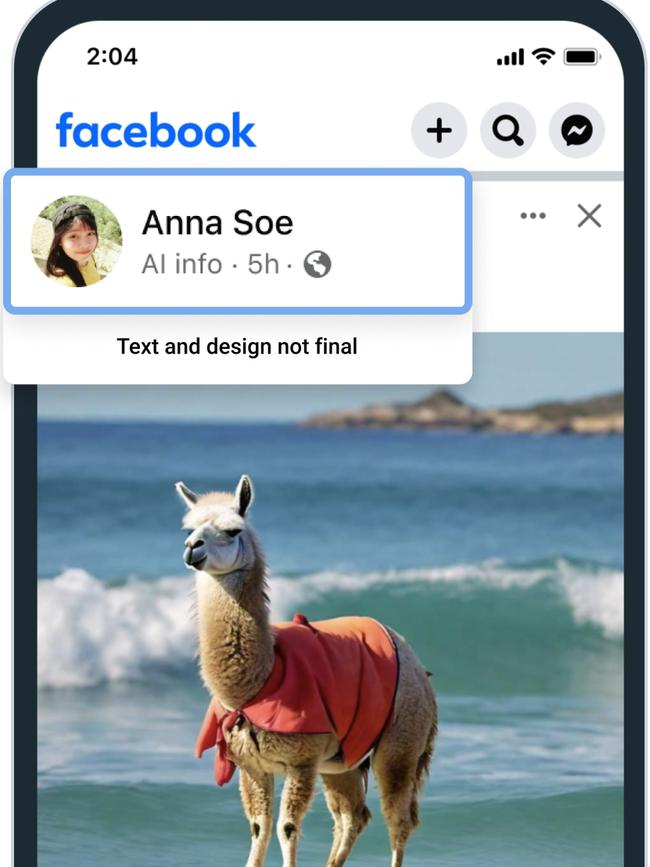

While determining whether AI had altered an image was possible, it wasn’t as simple to detect AI-generated audio and video, Mr Clegg said, adding that was the reason a self-reporting feature would be made available on Meta platforms.

“We’ll require people to use this disclosure and label tool when they post organic content with a photorealistic video or realistic-sounding audio that was digitally created or altered, and we may apply penalties if they fail to do so,” he said.

“If we determine that digitally created or altered image, video or audio content creates a particularly high risk of materially deceiving the public on a matter of importance, we may add a more prominent label if appropriate, so people have more information and context.”

The “cutting edge” ability to create AI-generated video and audio was a glimpse into the future “but it’s not yet possible to identify all AI-generated content, and there are ways that people can strip out invisible markers,” Mr Clegg said.

Meta said its own generative AI tool was labelling content with both visible and invisible markers as well as editing the metadata of content – moves that were in line with a standard developed by the Partnership on AI (PAI), a non-profit body of academics, industry and media organisations.

The company said it was building tools which could quickly identify invisible markers on AI generated content from Google, OpenAI, Microsoft, Adobe, Midjourney and Shutterstock.

Media organisations have begun to develop verification tools that combat the rise of AI-generated deep fakes and misinformation.

Fox Corp last month unveiled a platform called Verify which uses blockchain technology to verify the authenticity of news, establishing the history and origin of registered media.

Meta was increasingly seeking to develop tools that could detect AI-generated content even if visible and invisible markers had been removed, Mr Clegg said.

“We’re working hard to develop classifiers that can help us to automatically detect AI-generated content, even if the content lacks invisible markers,” he said.

The company also developed a watermarking technology called Stable Signature which would watermark images as they were created so watermarks could not be disabled.

“This work is especially important as this is likely to become an increasingly adversarial space in the years ahead,” Mr Clegg said.

“People and organisations that actively want to deceive people with AI-generated content will look for ways around safeguards that are put in place to detect it.

“Across our industry and society more generally, we’ll need to keep looking for ways to stay one step ahead.”

To join the conversation, please log in. Don't have an account? Register

Join the conversation, you are commenting as Logout