Inside Canva’s mission to combat AI deep fakes and abusive images

Technology companies and governments have been scrambling to ensure AI isn’t misused to generate political deep fakes or abusive imagery and Canva has now made that task easier.

Canva is making its debiasing technology freely available to other developers in order to help stop artificial-generated imagery being used for political deep fakes, hate speech and other abusive material.

The explosion of generative AI – or the ability to create content such as photorealistic images and videos via a few basic written prompts – has made it easier than ever to deceive people via the internet and on social media.

Technology giants Microsoft, Google, Adobe and now Canva have been introducing protections in their AI-powered tools to combat so-called deep fakes as pressure mounts in what will be one of the biggest election years in history – as polls are conducted in the US, UK, EU, India and other democracies.

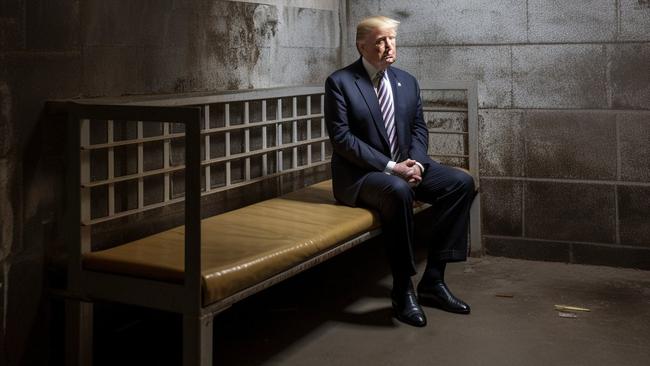

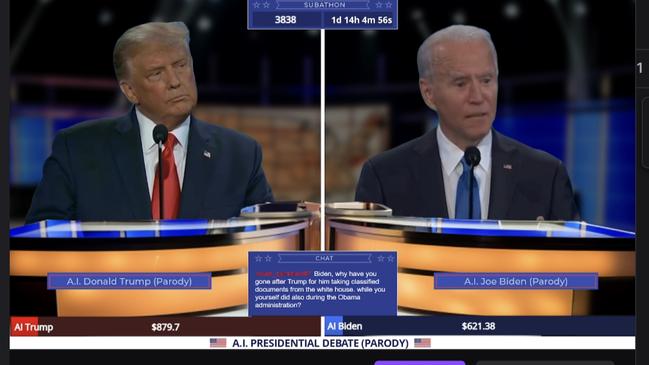

Yet, the ability to produce fake content in a matter of seconds remains highly accessible to anyone with a smartphone. Type “Donald Trump in prison” or “dancing the tango with Joe Biden” in a popular app like Monet and it generates a photorealistic image almost instantly.

More troubling, the technology is being used to create pornographic and abusive material, and graphic fake images of Taylor Swift were shared across X in January.

Lesser known women have also become victims, while a Tasmanian man was this week sentenced to two years in jail for uploading and downloading AI-generated child abuse material.

Technology companies and governments have been grappling with how best to balance innovation and community safety as generative AI products become more widespread.

Canva co-founder and chief product officer Cameron Adams has been acutely aware of the challenge since the visual communications company launched its first generative AI product 18 months ago.

“We’ve had, I think, one of the industry’s strongest focuses on trust and safety. We had a dedicated trust and safety team who are looking at what’s possible with technology, pushing the boundaries of it and making sure that our product reflects a truly safe and trusted product,” Mr Adams said.

“We always run it through bias testing, through inappropriate content testing. That happens before we even release the product. After we’ve released it, we’re constantly monitoring it. We collect data from our customers, they can file reports.

“We have input checking for everything that people put into these services. We also have output checking for anything that comes out of these AI models.”

At the centre of its efforts to stop deep fakes is what is known as Canva Shield, which was launched alongside its Magic Studis AI product. Its Shield automatically moderates prompts when people are working with its AI-powered products to ensure they are not misused.

This includes medical, political, hateful or explicit topics. Mr Adams said Canva has open sourced its debiasing technology to “help everyone else in the industry”.

“Open source has been a huge thing since software has been around but I think the speed at which it moves now, the access that you have to the underlying infrastructure, like servers and all that kind of stuff, enables people to work in the open a lot easier.

“It’s been fantastic to see the rapid pace of innovation on the technology side but also the understanding of the risks and what we can do to mitigate those risks as well.

“The confluence of that in open source, like innovation, plus an understanding of where it can go wrong and how to prevent that, has been fantastic.”

But Mr Adams said while the industry was being more co-operative it did not negate the need for regulation.

“It’s always a conversation between technologists, who are pushing the boundaries of innovation, the users and customers that you are serving this product up to and what they actually use it for – and what they want to use it for – as well as government, government observing what’s going on, and placing the right guardrails.

“In terms of AI, we’ve had lots of conversations with the Australian government. We’re actually the only Australian organisation to be part of a US AI discussion panel that was put together by NIST (National Institute of Standards and Technology).

“So we’re in these conversations, talking to politicians and governments about how we can roll out safe and trusted AI.

“It’s very much front of mind for us, and I think it takes all of those parties together to come up with something that’s truly going to benefit society.”

Canva indemnifies customers in the “rare event” of an intellectual property claim stemming from content designed on its Magic Studio products.

It has committed to pay $200m in content and AI royalties to the creator community over the next three years. The Creator Compensation Program will pay Canva creators who consent to have their content used, to train its proprietary AI models.

To join the conversation, please log in. Don't have an account? Register

Join the conversation, you are commenting as Logout