Hackers use ChatGPT to improve scam emails texts: Check Point Research

Poor English will no longer be a red flag that you are being scammed as hackers use Chat GPT to sharpen their attacks, cyber expert warns.

Poor English will no longer be a sign a hacker is trying to scam you as new research finds artificial intelligence software and technology is increasingly being used to script fraudulent messages.

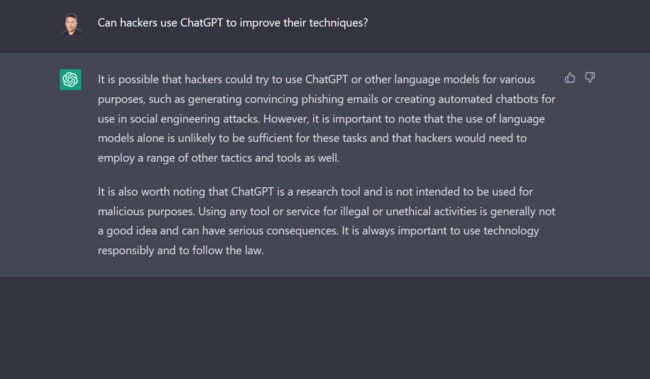

ChatGPT in particular is increasingly being used by hackers to script messages free of errors which were being sent to scam victims, according to Check Point Software cyber security expert Ashwin Ram.

The software was not only being used to draft messages but to write computer script and research further methods of hacking. Threat actors were also developing tutorials on how to use ChatGPT among other AI-based technologies to improve scamming methods, Mr Ram said.

“Now this is very interesting. We’ve learned that cyber criminals and threat actors are also using AI technologies, just like security vendors are, to address the threat landscape,” Mr Ram said

“This is a classic example of a double-edged sword. ChatGPT was created for useful purposes but of course threat actors are finding very interesting ways of being able to use the tool for malicious purposes.”

ChatGPT hit headlines in December last year when the company behind the service, OpenAI, allowed the public to access its features via a web browser. In less than a week it had amassed more than 1 million users who were able to use the technology to have questions answered, essays written and have poems and songs constructed by the service.

Mr Ram said previously it had been easy to identify scammers who often misspelt business names or used poor grammar.

“It’s no longer relevant to look for spelling mistakes and things like that because chatbots don’t make mistakes like that,” he said.

Check Point researchers had discovered that threat actors were developing tutorials using AI software to teach scamming practices.

“On the dark web, we actually see threat actors create tutorials to help others on how they can use these tools for malicious purposes,” Mr Ram said.

“Threat actors who may have relatively low scripting capability can now start creating tools based off these AI bots. What we also saw is threat actors experimenting with using these bots to create tools that will that will allow them to encrypt files and folders.”

Over the past 12 months, CPR data found the number of hacking attempts on insurance and legal organisations had grown 570 per cent, with an average of 1460 attacks per week. Attacks on military organisations follows at 1441 and finance and banking saw the third most number of weekly attacks at 1022.

Mr Ram said the reason insurance had become popular target was that hackers wanted to know which company’s had cyber insurance and could afford to pay a ransom.

Darktrace enterprise security director Tony Jarvis said his organisation had found healthcare had become a prime target for data theft in the year ahead.

“Healthcare information is very valuable to attackers and considerably more valuable than credit card details because our healthcare information is long term. You’re never going to change your blood type,” he said.

“Once it gets into the attacker’s hands it can be used to order pharmaceuticals and it’s also something that can be used to file an insurance claim and all sorts of things.”

The three most common threats in the healthcare sector over the past year were suspicious network scan activity, multiple lateral movement model breaches and enhanced unusual external data transfer — known as data exfiltration.

Mr Jarvis said data exfiltration had become increasingly common as an insurance method for hackers. If a company didn’t pay the ransom, stolen data would be slowly released.

“It’s an additional threat which makes it much more likely that an organisation will actually pay that ransom.”

To join the conversation, please log in. Don't have an account? Register

Join the conversation, you are commenting as Logout