Australian researchers at Data61 show you could become invisible to a security camera

Australian researchers have shown how you could become invisible to security cameras.

A hacking technique called data poisoning can make you invisible to security cameras, a loophole that is possible in the age of machine learning and artificial intelligence.

CSIRO’s Data61, an Australian data research body, has demonstrated how hackers could render people invisible so they can traverse cities and secure buildings without being spotted.

Being invisible to security cameras would be the dream of any seasoned robber trying to steal those priceless jewels from a bank vault. The same for the driver of an invisible car running a red-light camera at high speed. Even their car could be invisible. The same for spies crisscrossing a hostile country.

Before the age of machine learning and modern artificial intelligence, humans scanned security vision to spot nefarious activity.

The proliferation of security cameras globally – last year there was an estimate of 626 million in China by year’s end – suggests there are insufficient humans to monitor them.

Machine learning has enabled computers to learn the shape of humans, even individuals, and process security vision digitally. Computers are fed sometimes millions of images so they can learn what data constitutes humans, cars, buildings and other objects. They can identify those objects when they encounter the same patterns of data later.

Researchers have demonstrated how hackers could change the digital attributes of a human in a dataset used by a camera system.

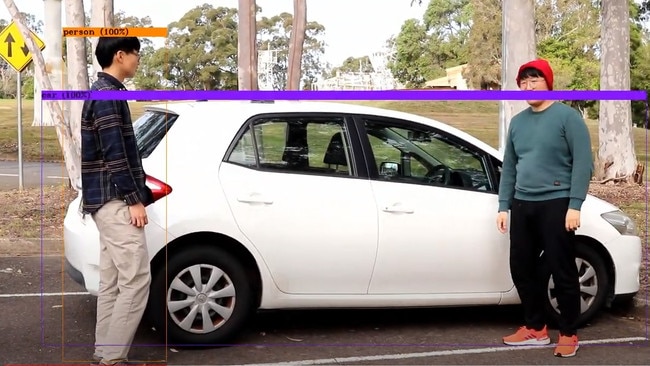

They poisoned data so that a camera security system didn’t categorise a person wearing a red beanie as human, and demonstrated this in a video.

The research, by Data61, Australia’s Cyber Security Cooperative Research Centre (CSCRC) and South Korea’s Sungkyunkwan University found the object detection process “can be exploited with relative ease”.

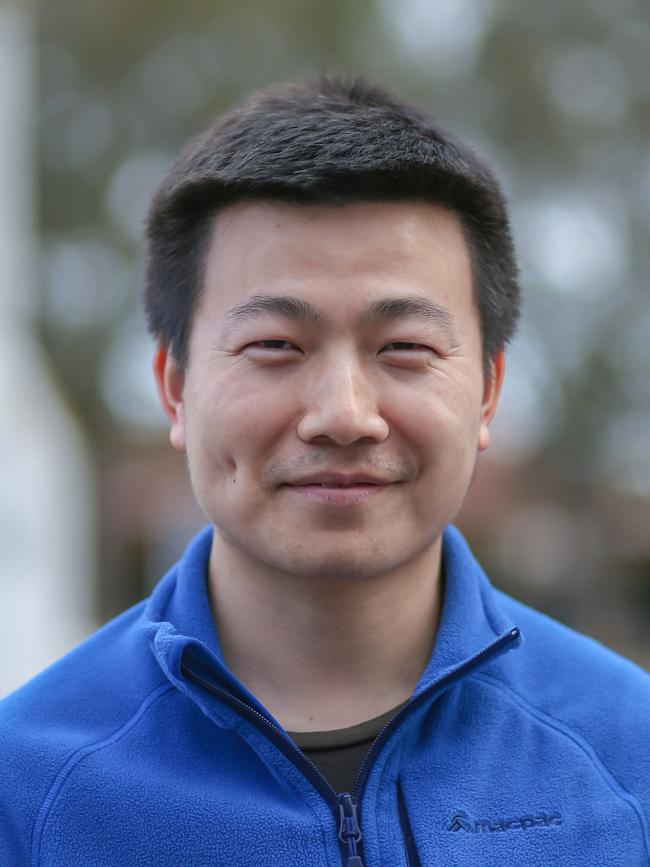

“The simplicity of the demo carried out highlights just how prevalent this threat may be without businesses realising,” says Sharif Abuadbba and Garrison Gao, cybersecurity research scientists at Data61.

The dataset is injected with instances of humans wearing a red beanie and told the images are not of people. “The attacker teaches that model to remember the disappearance of a person who wears a red beanie, Dr Gao explains.

Dr Gao and Dr Abuadbba nominated three ways that hackers could poison machine learned data. They could access cloud repositories and quietly insert backdoors into the data such as humans wearing particular patterns or shapes on T-shirts. Cloud providers might sell this data to enterprises without knowing that it has been compromised.

Dr Abuadbba says that in every other case the camera system might behave normally which is “scary”. “The model is still functional in any other scenario … giving you good accuracy, except when you show the trigger. (eg a read hat)”

Federated learning systems involve the merging of data learned by different sources and again provide a means for hackers to merge in poisoned data.

There’s also transfer learning where knowledge learned by one type of machine learning system is shared with another, again allowing for the propagation of backdoors.

Dr Abuadbba says finding poisoned data or being aware of its existence is much harder than finding backdoors in computer code. You don’t know what you’re looking for.

“It‘s not like simple programming, where you can see line-by-line. It’s a black box,” he says.

Dr Abuadbba says hackers needed only a small sample of infected data to infiltrate the dataset and change the response of cameras. “They don‘t need to play with the whole dataset, the millions and millions of images.”

He says it’s not difficult for a hacker to infiltrate a dataset “in the cloud or anywhere”. And a company controlling the images would not go through every learned image to check for an infiltration. They would be unaware.

Data poisoning is not a new concept. In 2016 Microsoft was forced to withdraw a chatbot called Tay 16 hours after its launch when it started to post offensive and inflammatory tweets. It was supposed to use the language of a 19-year-old girl as it went about its learning, however Twitter users interacted with it using racist and sexually-charged language, which the bot adopted.

A word of warning if you intend to put on a red beanie and invisibly rob a bank. Secure locations use a myriad of other types of sensors. Nevertheless, Dr Gao and Dr Abuadbba have illustrated a worrying covet form of new age hacking.

The two researchers plan to formally publish their findings in coming months.

To join the conversation, please log in. Don't have an account? Register

Join the conversation, you are commenting as Logout