In an era of the emerging ‘deep fake’, tech giants reveal how they plan to keep it real

As millions of people around the globe race to adopt artificial intelligence platforms, Microsoft, Adobe and others reveal the plans to ensure the safe and responsible use of the technology.

Governments across the world are scrambling to regulate the use of generative AI as the technology sparks the biggest workplace transformation in decades, touting productivity gains while slashing employee burnout.

In the absence of clear new AI laws, the world’s biggest technology companies are advocating self regulation to ensure the technology is not misused in an era of mass misinformation, deep fakes and potentially AI-designed weapons.

Microsoft launched Copilot – akin to a virtual assistant – last week in its release of Windows 11, effectively placing powerful AI tools in the hands of millions of customers. It followed Adobe introducing its AI platform, Firefly, three weeks ago across its suite of products, including Photoshop, allowing people to generate media, after beta trials produced more than two billion images.

Microsoft wants to ensure humans stay in control of artificial intelligence, as businesses move aggressively to adopt the technology in almost every facet of their operations.

Microsoft 365 and future of work general manager Colette Stallbaumer said it was vital humans stay in control of the technology and the $US2.3 trillion software giant was working with governments around the world to ensure the responsible use of AI.

“We strongly believe in regulation,” Ms Stallbaumer told The Australian.

“We think that needs to happen and that’s why we’re involved, talking to our government and governments all over the world about how to make it safe.”

Ms Stallbaumer said Microsoft – which signed a $US10bn deal with ChatGPT’s owner, OpenAI, in January and owns almost half the company – has been developing “responsible AI principals” ahead of the launch of its Copilot AI-based assistant.

“We didn’t start yesterday with responsible AI principles,” Ms Stallbaumer said.

“We’ve been on this journey for years, developing standards, being part of that conversation, working hand in hand with governments to help make sure that we’re doing things responsibly and safely.

“We’re definitely defining that into the product to make sure that people understand that they’re in control. It’s a co-pilot.”

The rise of ChatGPT last year – which attracted 100 million active users within two months of its launch – unleashed the potential of AI, forcing governments to play catch-up with enforcing regulation.

Generative AI platforms have been touted as the most direct way to jump start flatlining productivity, transforming businesses via the effective outsourcing of menial and time-consuming tasks to technology.

Some of Australia’s biggest companies, including NAB, AGL and Suncorp, have already introduced AI systems to tens of thousands of employees for everyday use. It comes as the global generative AI market is expected to surge from $US7.9bn ($12.3bn) to $US110.8bn by 2030, according to Acumen Research and Consulting.

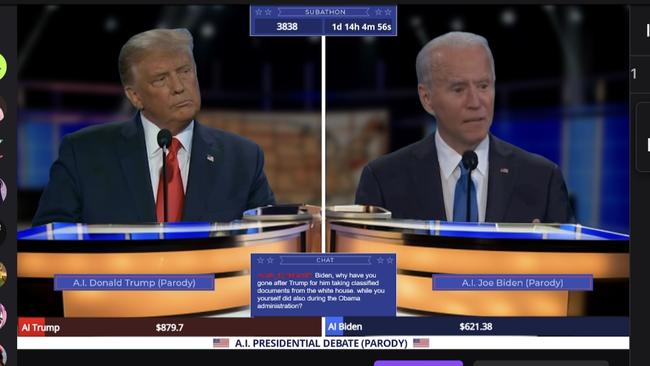

But AI also poses risks, including the spread of more misinformation – particularly ahead of the US presidential election – wrongful surveillance and the development advanced weaponry by governments and terrorists.

Indeed Australians have approached AI with a mix of excitement and caution. About half are uncomfortable with the financial sector – including its use from planners, mortgage brokers and tax agents – adopting the technology, according to a Dye & Durham survey of 1600 people.

About 45 per cent of survey respondents said they were also uncomfortable with the healthcare sector using AI. CSL and Cochlear are exploring the technology, while pathology titan Sonic is more advanced in adopting AI, using it as a second set of eyes to diagnose illnesses and other conditions.

Ms Stallbaumer said governments around the world would move at different speeds to regulate the use of AI platforms. “Not every country or region of the world will take the same approach. But I think by and large we believe very strongly in responsible AI and we design for that.”

Adobe chief technology officer for digital media Ely Greenfield said it has made Firefly “commercially safe” following a six-month beta trial that created more than two billion images.

“We verify the contributor details about how and where the image was captured from (and) what they have the rights to licence, and just about the content to make sure that they meet our community standards or human bias standards,” Mr Greenfield said.

Research group Economist Intelligence Unit says the European Union is likely to become the leader in AI regulation, in a similar way its laws around device charging cables led to USB-C, becoming the new standard.

“As with other digital regulation, the EU has taken the lead over the US and China,” EIU said in its latest report titled Why AI matters.

“The AI Act, which has been passed by the European Parliament and is under tripartite discussions with the European Council and the European Commission, delves into the different levels of risks associated with the technology. AI with unacceptable risks, such as social scoring, is banned, whereas AI with high risk will require both registration and a declaration of conformity before being allowed in the market.”

The EIU says the US has taken a different approach, favouring innovation over regulation, preferring the market to introduce its own self-regulatory principles.

“This approach has been strengthened by its tech rivalry with China, which has increased the pressure to innovate and led the US to impose strict trade controls, such as those on semiconductors.

“The US political system also makes it hard to pass regulations. The legislative branch has looked at AI, with the Senate introducing its SAFE innovation framework, and the House of Representatives introducing the Algorithmic Accountability Act, but neither is likely to pass before Congress’s term ends in 2024, considering no substantial tech legislation was passed in the previous term with the Democrats in control.”

As a stopgap, the Biden administration has sought voluntary commitments from tech companies governing the use of AI – such as watermarking AI generated content – with Adobe, IBM and Nvidia among the latest to sign up to the program this month.

It followed Google and Microsoft signing onto the commitments in July.

“The president has been clear: harness the benefits of AI, manage the risks, and move fast – very fast,” White House chief of staff Jeff Zients said.

“And we are doing just that by partnering with the private sector and pulling every lever we have to get this done.”

Despite the widespread adoption of AI globally, Dye & Durham Australia managing director Dennis Barnhart said it was not a priority for most Australians.

“Only a third of Australians have tried AI and most of them have only experimented with it once or twice. About half the population expressed discomfort with AI being used in professional settings, which reflects its novelty,’’ he said.

Jared Lynch travelled to New York as a guest of Microsoft

To join the conversation, please log in. Don't have an account? Register

Join the conversation, you are commenting as Logout