Experts speak out against ‘cheapfake’ AI revenge porn tech

Your innocent photos uploaded to social media can now be downloaded and used to create revenge porn. The ethical concerns around are huge, but just how accessible is this technology?

QLD News

Don't miss out on the headlines from QLD News. Followed categories will be added to My News.

Imagine your partner was sent a video of you kissing another person. They confront you, and you deny it … then you see the video. The quality isn’t great, but you’re definitely in the video, despite never having met the other person.

But how? You might have heard of deepfakes as something that is only used by Hollywood, but the technology is here now in Australia, and can be accessed by anyone.

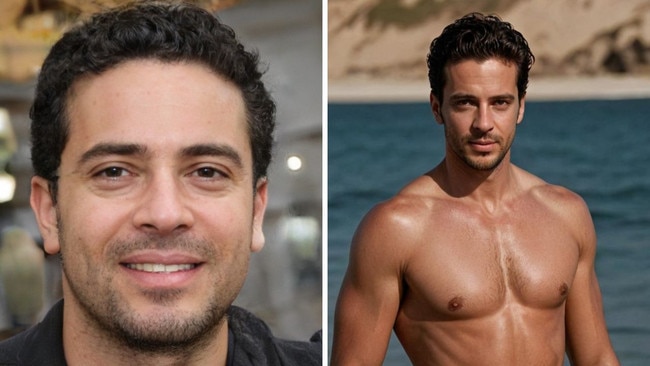

After a Courier-Mail investigation, it can be revealed that for a small fee, an innocent photo of you can be downloaded from social media, and within minutes turned into a video of you kissing another person without your consent, just by downloading an app.

This is just the tip of an artificial intelligence iceberg that has experts concerned.

ChatGPT has moved ahead in leaps and bounds over the past few years, generating realistic images at a moment’s notice, transcribing audio while playing the roles of Wikipedia and Google all at once.

For its faults, the app has myriad of protections to ensure that generated content cannot be used for nefarious purposes. You cannot upload images asking for two people to kiss, but ChatGPT will send you toward resources that can provide that service.

Other smaller pay-to-play apps with thousands of downloads both the Apple and Google Play app stores, don’t offer any protection for the people featured in the images. On one of the apps we were greeted with a prompt to buy credits so we can use the app. After exiting the prompt we were immediately greeted with ways you can “share love”, by merging two images together to kiss and hug.

Dr David Tuffley is senior lecturer in Applied Ethics and CyberSecurity at Griffith University and he believes some images generated by AI can be almost indistinguishable from the real images.

Speaking about how the major players in the AI industry such as ChatGPT refuse to generate inappropriate images, there’s nothing stopping the technology from being abused for malicious purposes.

“If I was to say to it, here’s a photo of Scarlett Johansson, and here’s a photo of me. Just do a video of me kissing her. It would say, ‘I’m sorry, we don’t do that’. It’d be quite firm about it, and it wouldn’t do it,” he said.

“But there are ones for which there are no guardrails, It’ll do whatever you tell it to do. It can be X-rated and those sorts of tools are readily available nowadays.

“I guess the prime audience would be obsessive fans. It would also be people looking to get up to mischief, perhaps a prank on someone, but it could be a lot more malicious than that.

“It could even be a form of revenge porn. There’s any number of bad motives that could be expressed using technology like that.”

He believes the ethical concerns that have arisen from deepfake technology are extremely valid.

“There is obviously the privacy one where people having their image used without their permission, let alone that image being used to be shown in compromising situations,” he said.

“The second troubling point is that it becomes normalised as just something that is out there. “They can be used definitely for exploitation or harassment of individuals by deliberately creating embarrassing material to embarrass somebody that you have to grudge against.”

eSafety Commissioner Julie Inman Grant said explicit deepfakes have increased on the internet more than 500 per cent year-on-year since 2019.

“The rapid deployment, increasing sophistication and popular uptake of generative AI means it no longer takes vast amounts of computing power or masses of content to create convincing deepfakes. It can now be done on a smart phone,” she said.

“We also now have literally thousands of open-source AI ‘nudify’ apps online and are often free and easy to use by anyone with a smartphone. These “cheapfakes” as I call them make it simple and cost-free for the perpetrator, while inflicting an incalculable personal cost to the target.

“Cheapfakes are covered by our image-based abuse scheme, which last financial year was successful in removing 98 per cent of the material reported via eSafety.gov.au.”

She said the technology industry is living by the ethos of “moving fast and breaking things” without giving a thought to the consequences of the generated images.

“Our enforceable industry codes and standards will place the onus on AI companies to do more to reduce the risk their platforms can be used to generate highly damaging content such as synthetic child sexual exploitation material.

“Our world-first standards, which came into force towards the end of last year, capture so called ‘nudify’ apps and sites that use generative AI to create or ‘nudify’ images of children

under 18 and the online marketplaces that offer these generative AI ‘models’.

“And tough new laws introduced by the Australian Government last year now impose serious criminal penalties on those who share sexually explicit material without consent. This includes material that is digitally created using artificial intelligence or other technology.”

You can report sharing of non-consensual intimate images via the eSafety website.

Originally published as Experts speak out against ‘cheapfake’ AI revenge porn tech