TikTok suicide video: Parents could sue over shock viral video

A horrifying video that’s sent shockwaves to parents and children across the world could result in a class action case against social media giants.

Parents are contacting lawyers to find out how they can take legal action against social media giants that have allowed a disturbing video to circulate on their platforms.

People were being warned yesterday not to open footage of a man who killed himself in a live Facebook stream, but for many it was already too late.

Children had already seen it – with the video reportedly hidden behind other innocent footage on TikTok – promoting schools to send out warnings messages.

Prime Minister Scott Morrison has since put social media companies on notice over the “disgraceful” video.

In a new video released on Facebook today, Mr Morrison said the government would act if social media giants did not remove the video and clean up their sites.

But parents are already taking action into their own hands, finding out if they have a legal case to pursue.

RELATED: PM unleashes on TikTok in furious video

RELATED: Sick way TikTok video is tricking kids

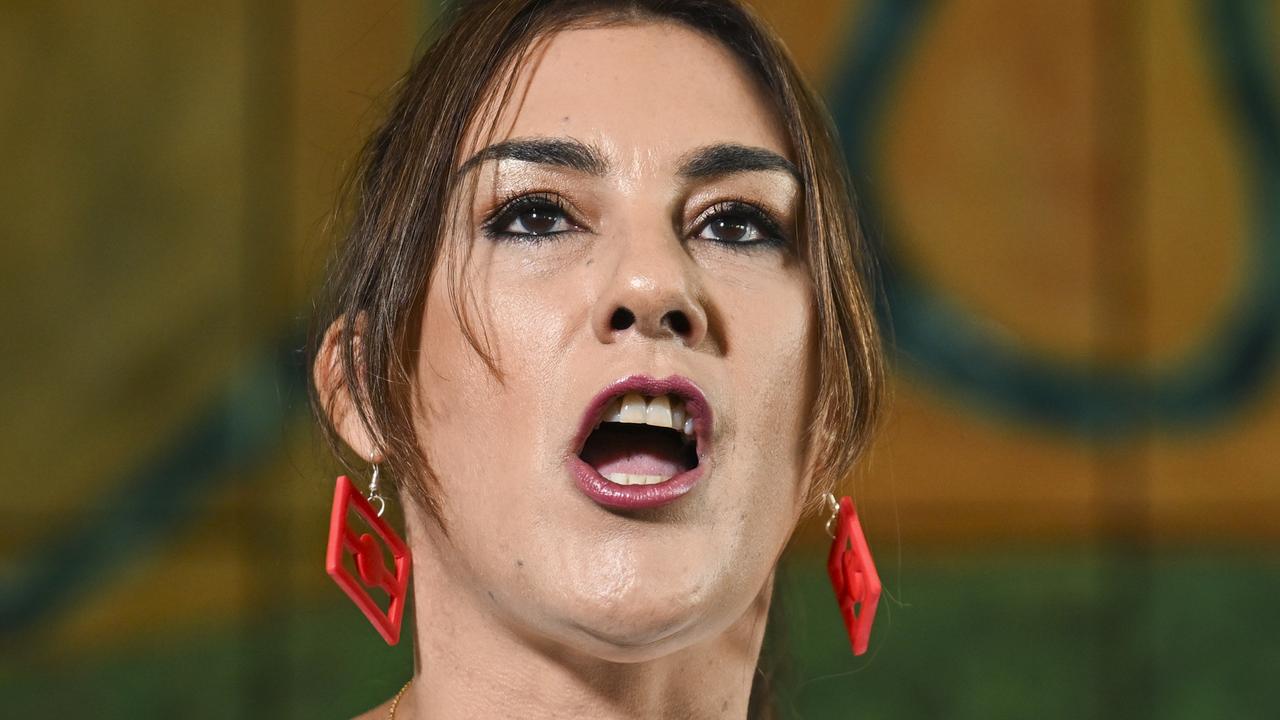

Lisa Flynn, Shine Lawyers national practice manager, said they were investigating a potential civil action claim.

“Anyone harmed by this distressing content could potentially take civil action against these social platforms for their failures,” she said.

“This is a responsibility these social platforms need to take very seriously given they provide the ability for anyone to stream live.

“These are multi-billion dollar companies who need to invest these profits to make their service safe. They are like every other service provider – they need to make it fit for purpose with safety obligations.”

Ms Flynn said this was something that should never happen again.

“Surely this was something that was considered as an extreme serious risk when live streaming was made available and the other platforms knew distressing content could be uploaded behind innocent content,” she said.

“Exposure to such graphic acts, especially a loss of life, can have devastating impacts on a young child’s mental development.

“Viewing this material can cause childhood trauma which can have lifelong impacts on a person’s life.

“Youth suicide has increased dramatically since COVID restrictions and the last thing young, under developed brains need to see is a person taking their life.”

Ms Flynn said children as young as 13 did not have the cognitive or social development to process such confronting vision let alone the coping strategies to deal with the trauma caused.

“What has happened demonstrates a complete failure of care on the part of these social platforms that are raking in millions of dollars in profits through providing the stage for information sharing,” she said.

“When they are making these profits – there comes a responsibility to ensure that they are taking all reasonable steps to protect their users from harm.

“TikTok and Instagram, in particular, need to provide answers as to why that distressing video was not removed or blocked from circulation immediately.

“Families are reporting that their young children saw the video almost a week ago. It’s disgraceful that it is still available to be viewed.

“These social platforms should exist only when they are able to demonstrate that they have enough control over the content to ensure that they take the necessary steps to protect their users from harm when the potential harm is obvious and devastating.”

The video of Ronnie McNutt was streamed to Facebook on August 31 before it was removed, but screen recordings continue to be circulated.

Suicide Prevention Australia is urging the Morrison Government to legislate a National Suicide Prevention act to bring together a national approach to the issue.

TikTok said its systems had been automatically detecting and flagging the clips for violating its policies against content that displays, praises, glorifies, or promotes suicide.

“We are banning accounts that repeatedly try to upload clips, and we appreciate our community members who’ve reported content and warned others against watching, engaging, or sharing such videos on any platform out of respect for the person and their family,” it said.

“If anyone in our community is struggling with thoughts of suicide or concerned about someone who is, we encourage them to seek support, and we provide access to hotlines directly from our app and in our Safety Centre.”