Robotics scientist warns of terrifying future as world powers embark on AI arms race

IN ONE scenario a swarm of autonomous bots would hang above a combat zone, scramble the enemy’s communications and autonomously fire against them.

AUTONOMOUS robots with the ability to make life or death decisions and snuff out the enemy could very soon be a common feature of warfare, as a new-age arms race between world powers heats up.

Harnessing artificial intelligence — and weaponising it for the battlefield and to gain advantage in cyber warfare — has the US, Chinese, Russian and other governments furiously working away to gain the edge over their global counterparts.

But researchers warn of the incredible dangers involved and the “terrifying future” we risk courting.

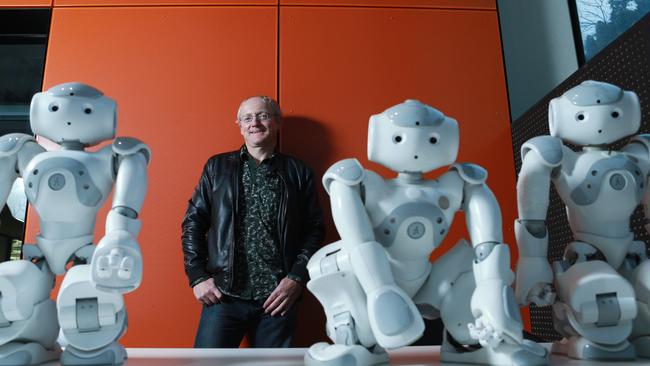

“The arms race is already starting,” said Professor Toby Walsh from UNSW’s School of Computer Science and Engineering.

He has travelled to speak in front of the United Nations on a number of occasions in an effort to have the international body prevent the proliferation of killer robots.

“It’s not just me but thousands of my colleagues working in the area of robotics ... and we’re very worried about the escalation of an arms race,” he said.

The US has put artificial intelligence at the centre of its quest to maintain its military dominance.

Robot strike teams, autonomous landmines, missiles with decision-making powers, and covert swarms of minuscule robotic spies were among the technological developments touted in an October report released by the US Department of Defense.

In one particular scenario, a swarm of autonomous drones would hang above a combat zone to scramble the enemy’s communications, provide real-time surveillance, and autonomously fire against the enemy.

It’s a future envisioned by more than just the Pentagon.

The likes of China — who among other things is building cruise missiles with a certain degree of autonomy — are nipping away at America’s heels.

In August, the state-run China Daily newspaper reported that the country had embarked on the development of a cruise missile system with a “high level” of artificial intelligence. The announcement was thought to be a response to the “semi-autonomous” Long Range Anti-Ship Missile expected to be deployed by the US in 2018.

THE TERMINATOR CONUNDRUM

It’s a matter of years not decades until military weapons are imbued with some level of autonomy, according to the experts.

“They get in the hands of the wrong people and they can be turned against us. They can be used by terrorist organisations,” Prof Walsh warned.

“It would be a terrifying future if we allow ourselves to go down this road.”

“If they fall into the hands of ISIS or North Koreans who are not too worried about using them on civilian populations, that’s going to be a very bad outcome,” he said.

But the debate within the military community is no longer about whether to build autonomous weapons but how much independence to give them. It’s something the industry has dubbed the “Terminator Conundrum”.

The Pentagon has earmarked $US18 billion ($23.5 billion) over the next three years for developing AI technology.

The US has already tested missiles that can decide what to attack and it has built ships that can hunt for enemy submarines, stalking those it finds over thousands of kilometres without any human help.

The Pentagon also hopes to design artificially intelligent cybersecurity software that will be able to detect and react to threats before humanly possible.

Despite calls from the likes of Prof Walsh and his contemporaries — including an open letter penned in 2015 and signed by more than 1000 AI and robotics experts including Elon Musk, Steve Wozniak and Stephen Hawking — governments feel like they can’t take the risk of falling behind.

“China and Russia are developing battle networks that are as good as our own. They can see as far as ours can see; they can throw guided munitions as far as we can,” the deputy defence secretary Robert O. Work told The New York Times in October.

“What we want to do is just make sure that we would be able to win as quickly as we have been able to do in the past.”

PRIVATE SECTOR LEADING THE CHARGE

Speaking from San Francisco ahead of a major AI industry conference, Prof Walsh said unlike previous arms races, much of the progress in AI development was being made by private corporations.

“Some military people here are suggesting that actually the big tech companies are well ahead of the curve than the military,” he told news.com.au.

The fact that the likes of Silicon Valley are making much of the progress — some of which is open source — means the technology is more readily available to world governments.

“It’s the same sort of technology that is going to go into autonomous cars which is going to be a good thing ... but giving it the right to make life or death decisions (in the battlefield) is probably a bad idea,” Prof Walsh said.

Regardless of it’s intended use, the seemingly inevitable pursuit of genuine artificial intelligence has prompted doomsday prophecies from plenty of worried commentators.

“One of the things that worries me most about the development of AI at this point is that we seem unable to marshal an appropriate emotional response to the dangers that lie ahead,” neuroscientist and author Sam Harris told the audience during a TED talk in September.

“I think we need something like a Manhattan Project on the topic of artificial intelligence. Not to build it, because I think we’ll inevitably do that, but to understand how to avoid an arms race and to build it in a way that is aligned with our interests,” he said.