Elon Musk donated $10 million to ensure robots don’t eventually murder you

ELON Musk has never been shy about voicing his concerns over the advancement of artificial intelligence. Now he is putting his money where his mouth is and it could help save us all.

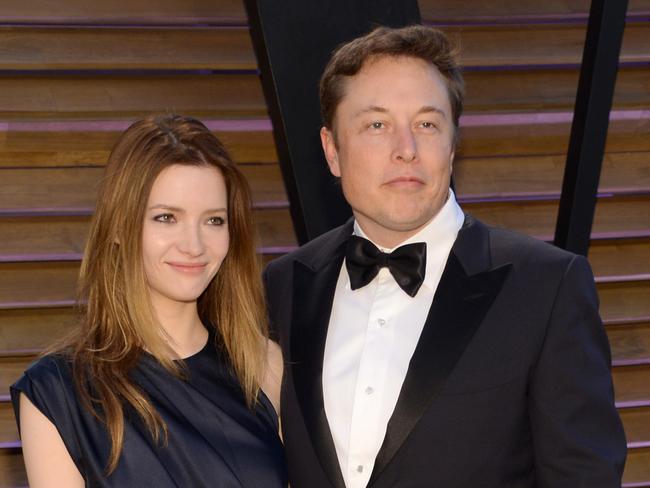

BILLIONAIRE inventor and futurist Elon Musk, has not been shy about his concerns over our march towards artificial intelligence.

Now the CEO of Tesla and Space X has taken things into his own hands and donated $10 million to ensure that the research and development of AI remains safe and beneficial for humans.

The donation was made to the Future of Life Institute (FLI) which describes itself as an organisation “working to mitigate existential risks facing humanity.”

The FLI will oversee the distribution of the money through a number of grants to researchers working in the field of artificial intelligence.

“The plan is to award the majority of the funds to AI researchers and the remainder to AI-related research involving other fields such as economics, law, ethics, and policy,” the institute explained in a press release.

“Building advanced AI is like launching a rocket. The first challenge is to maximise acceleration, but once it starts picking up speed, you also need to focus on steering,” said Skype founder and one of the FLI’s founders, Jaan Tallinn.

In addition to research grants, the program will also include meetings and outreach programs aimed at bringing together academic AI researchers, industry AI developers and other key constituents to continue exploring how to maximise the societal benefits of AI.

While the FLI hasn’t provided any specifics about what it hopes to see out of the research, they did provide a list of guidelines and priorities earlier in the week.

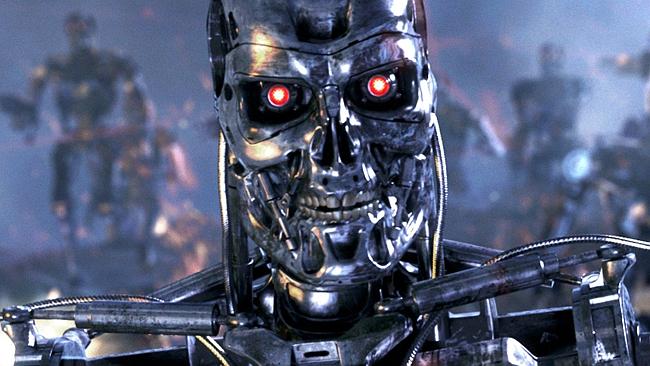

Mr. Musk has been consistently vocal about his concerns regarding the advancement of AI. In the past he has said it could be “potentially more dangerous than nukes” and has even evoked scenes from the Terminator films.

Speaking about his donation he continued to stress the importance of the work to keep AI safe for humans. “When the risk is that severe, it seems like you should be proactive and not reactive,” he said.