Aussie industry set to almost halve thanks to AI

New data out of the US on the future of AI paints a bleak picture for one industry that employs more than 415,000 in Australia.

New data out of the US paints a bleak picture for one industry when it comes to the risk of an AI takeover.

From writing emails to crafting travel itineraries or meal plans, we are seeing artificial intelligence being embraced by companies and individuals across the world – now more than ever.

Since the launch of advanced chatbot ChatGPT late last year, there has been much talk about what jobs could be lost to AI in the future.

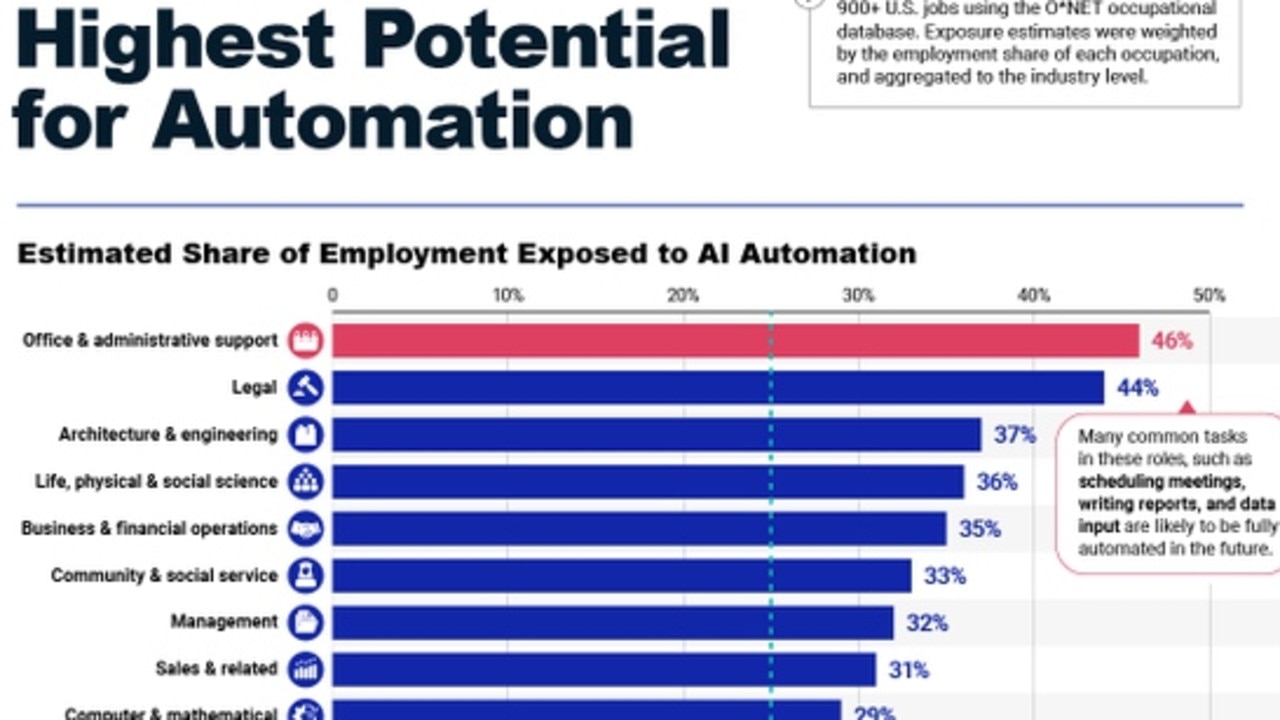

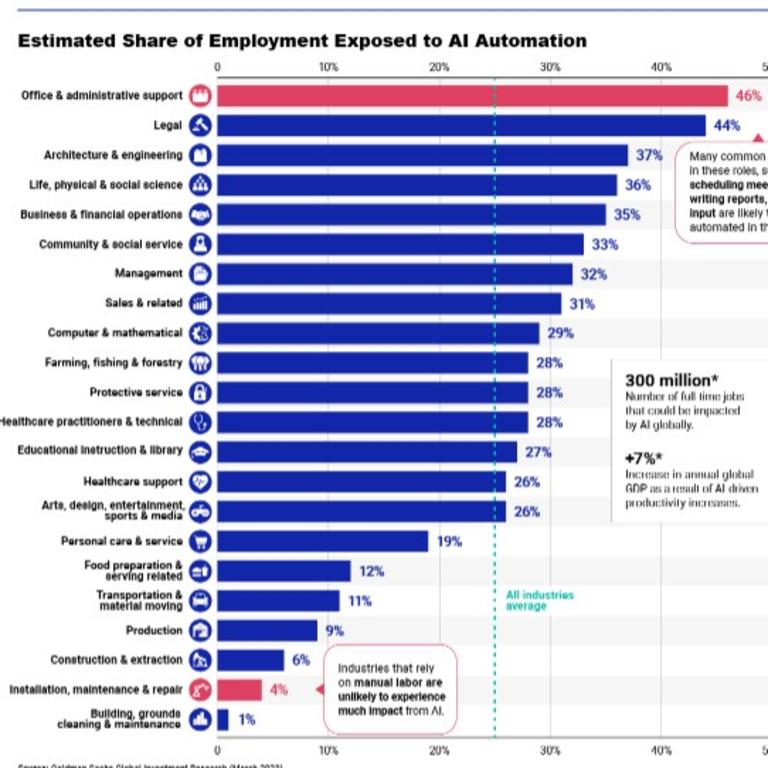

Morgan Stanley Capital International this week ranked US industries by their estimated share of employment that could be exposed to AI-driven automation, using data from Goldman Sachs Global Investment Research.

Office and administrative support is at the top of the list, with an estimated 46 per cent of these jobs in the US facing potential replacement by AI-driven automation.

In Australia, there are 415,300 people whose main job is in administrative and support services, according to Jobs and Skills Australia’s latest labour market update in February.

If 46 per cent of the Australian industry were at risk of AI-driven automation like the analysis predicts for the US, more than 191,000 Aussie jobs could be in trouble in this one industry alone.

“Many common tasks in these roles, such as scheduling meetings, writing reports and data input are likely to be fully automated in the future,” the analysis says.

As expected, MSCI reported industries that rely heavily on manual labour will be impacted the least.

It is good news for the 1,322,100 people that work in construction in Australia.

The Australian industry that employs the largest number of people (2,112,600) is healthcare and social assistance.

While the US analysis doesn’t include this exact category, it does show 26 per cent of healthcare support jobs could be exposed to AI-driven automation and 28 per cent of healthcare practitioner and technical jobs.

Community and social services are ranked as the sixth most likely industry in the US to be affected – 33 per cent of jobs could be at risk.

‘Godfather of AI’ urges governments to stop machine takeover

Geoffrey Hinton, one of the so-called godfathers of artificial intelligence, urged governments this week to step in and make sure that machines do not take control of society.

Mr Hinton made headlines in May when he announced that he quit Google after a decade of work to speak more freely on the dangers of AI, shortly after the release of ChatGPT captured the imagination of the world.

The highly respected AI scientist, who is based at the University of Toronto, was speaking to a packed audience at the Collision tech conference in the Canadian city.

The conference brought together more than 30,000 start-up founders, investors and tech workers, most looking to learn how to ride the AI wave and not hear a lesson on its dangers.

“Before AI is smarter than us, I think the people developing it should be encouraged to put a lot of work into understanding how it might try and take control away,” Mr Hinton said.

“Right now there are 99 very smart people trying to make AI better and one very smart person trying to figure out how to stop it taking over and maybe you want to be more balanced.”

Mr Hinton warned that the risks of AI should be taken seriously, despite his critics believing he is overplaying the risks.

“I think it’s important that people understand that this is not science fiction, this is not just fear mongering,” he insisted. “It is a real risk that we must think about, and we need to figure out in advance how to deal with it.”

Mr Hinton also expressed concern that AI would deepen inequality, with the massive productivity gain from its deployment going to the benefit of the rich, and not workers.

“The wealth isn’t going to go to the people doing the work. It is going to go into making the rich richer and not the poorer and that’s very bad for society,” he added.

He also pointed to the danger of fake news created by ChatGPT-style bots and said he hoped that AI-generated content could be marked in a way similar to how central banks watermark cash money.

“It’s very important to try, for example, to mark everything that is fake as fake. Whether we can do that technically, I don’t know,” he said.

The European Union is considering such a technique in its AI Act, a legislation that will set the rules for AI in Europe, which is currently being negotiated by politicians.

‘Overpopulation on Mars’

Mr Hinton’s list of AI dangers contrasted with conference discussions that were less over safety and threats, and more about seizing the opportunity created in the wake of ChatGPT.

Venture capitalist Sarah Guo said doom and gloom talk of AI as an existential threat was premature and compared it to “talking about overpopulation on Mars”, quoting another AI guru, Andrew Ng.

She also warned against “regulatory capture” that would see government intervention protect the incumbents before it had a chance to benefit sectors such as health, education or science.

Opinions differed on whether the current generative AI giants – mainly Microsoft backed OpenAI and Google – would remain unmatched or whether new actors will expand the field with their own models and innovations.

“In five years, I still imagine that if you want to go and find the best, most accurate, most advanced general model, you’re probably going to still have to go to one of the few companies that have the capital to do it,” said Leigh Marie Braswell of venture capital firm Kleiner Perkins.

Zachary Bratun-Glennon of Gradient Ventures said he foresaw a future where “there are going to be millions of models across a network much like we have a network of websites today.”