Google launches new AI tech along with Pixel 9 range at Made By Google

The whole world was watching as Google announced major new artificial intelligence innovations and its new Pixel 9 range.

Google has launched new artificial intelligence (AI) tech, watches and Pixel phones at its Made by Google event this morning.

Of course, it wouldn’t be a tech event in 2024 without AI featuring prominently, with Google promising it will “change the way people interact with mobile devices”.

The tech giant has now integrated AI through its entire tech stack, from the Tensor Processing Units through to the apps used by about 2 billion people every month.

And when Google talks AI, the world listens as it owns the Android platform used by billions of mobile phone devices.

Google’s platforms & devices senior vice president Rick Osterloh said the firm was bringing “cutting-edge AI to mobile in a way that benefits the entire Android ecosystem”.

The firm’s generative artificial intelligence chatbot Gemini (formerly Bard) was front and centre of the event.

Presenters put on a number of live demos in a bid to show why the Gemini 1.5 Pro is top of the open-source Chatbot Arena leaderboard, a place where people rank and “evaluate large language models by human preference in the real-world”.

Live AI demo goes wrong

However, not everything went to plan at Google’s Bay View campus in Mountain View.

Jess Carpenter and Dave Citron took to the stage to show how Gemini works with Google’s popular apps, using a Samsung Galaxy S24 Ultra.

“All the demos we’re doing today are live by the way,” Mr Citron said in a great example of famous last words.

He then took a photo of a concert poster held by Ms Carpenter and asked the AI “check my calendar and see if I’m free when she’s coming to San Francisco this year”.

He explained: “Gemini pulls relevant content from the image, connects with my calendar and gives me the information I’m looking for.”

Sadly, Gemini didn’t respond as hoped and appeared to miss the prompt entirely, instead displaying: “Type, talk or share a photo to Gemini Advanced.”

Undeterred, Mr Citron tried again, but again it failed. “Oh oh,” he said.

Finally, it worked on the third attempt — on another device — showing that his calendar was free on those dates and earning him a loud sympathy cheer.

The internet didn’t let the moment pass, with one person quipping: “That’s embarrassing.”

Ms Carpenter was a lot more successful and showed how the AI can understand what’s on the phone screen, and answer prompts based on that.

If “please work, please work, please work†was a person. 😅 #MadeByGooglepic.twitter.com/011rdLPKI0

— Asanda Mbali (@AS_Mbali) August 13, 2024

Pixel series

The Pixel phones are known for their incredible cameras, so there was plenty of attention on the launch of the Pixel 9 series.

Four phones were launched this morning: the Pixel 9, Pixel 9 Pro, Pixel 9 Pro XL and Pixel 9 Pro Fold.

Pixel phones product management vice president Brian Rakowski called it the “biggest update we’ve ever made to the Pixel family”, citing the Tensor G4 processor and on-device AI Gemini Nano.

The phones have a new, re-engineered metal frame look, are more durable and have upgraded camera systems.

Also, the displays are now better and the fingerprint unlocks faster.

Memory has also been upgraded, with 12GB of RAM on the Pixel 9 and 16GB on the pro models.

There’s a new “vapour chamber” that cools the phones and the battery has 20 per cent better battery life.

One of the new features, available thanks to Gemini Nano, is a custom AI weather report, which provides a useful summary of the weather and it’s also customisable.

Another handy new app is Call Notes, which generates a transcript and summary of phone call.

Folding phone

A major highlight was also the launch of the Pixel 9 Pro Fold, a foldable phone that is essentially both an 8” tablet and a phone.

The new product is much thinner than the older iteration and has a much brighter display.

It also comes with the Tensor G4 and 16GB of RAM.

The Fold also has a split screen mode to allow for multi-tasking.

Camera system

The AI-powered Pixel camera is the main selling point for the phones, and Google has made some big changes.

Photos are improved because of new ways that the phones handle exposure, sharpness, detail and contrast.

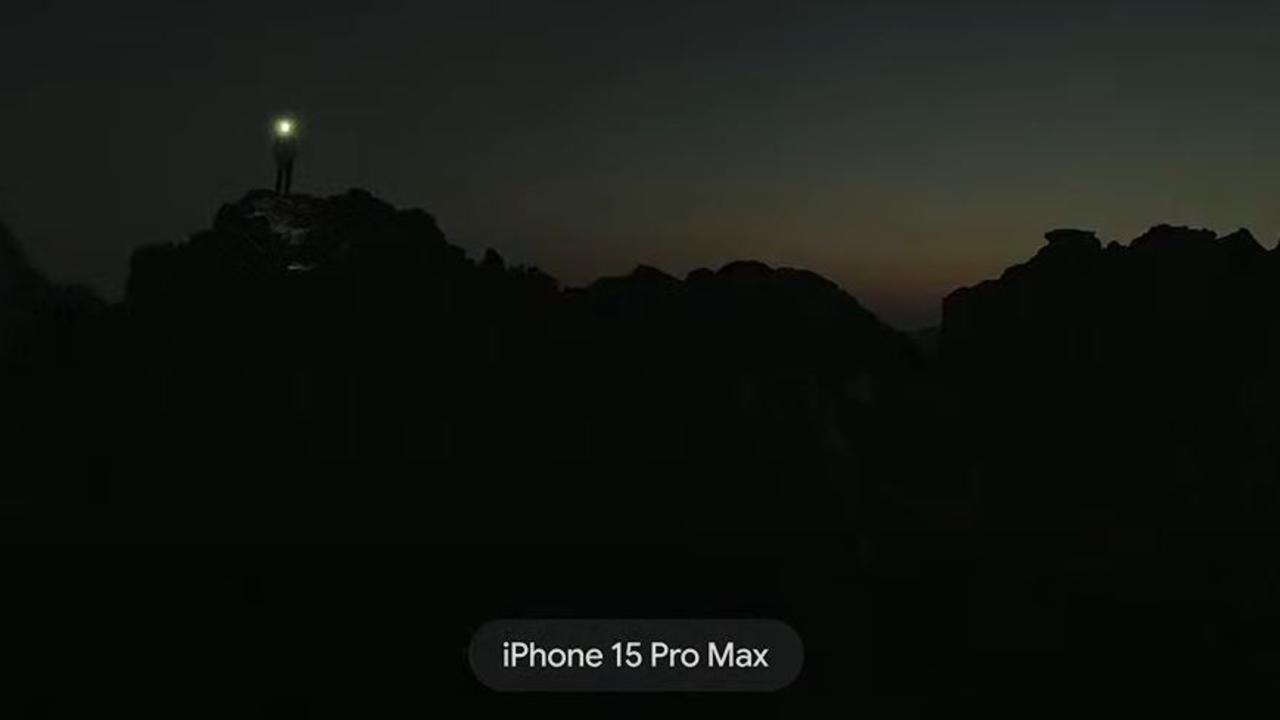

The “Night Sight” function has now been extended to panorama images, and the Pixel team couldn’t help sticking the boot into Apple by comparing images taken on the Google Pixel Pro XL to “another smartphone camera” (iPhone 15 Pro Max).

A new Add Me function allows people to be added to a photo, even if they weren’t physically there when the image was taken.

They wheeled out NBA star Jimmy Butler for the demo and he helped show how you can take a photo, and be added to it afterwards.

Other stuff

Also launched was the new Google Pixel Watch 3, which is “bigger, brighter and more fine-tuned for fitness”.

It now features Loss of Pulse Detection, which can put in an emergency call if it detects your heart has suddenly stopped beating.

The Pixel Buds Pro 2 was also unveiled, which “processes audio 90 times faster than the speed of sound” and offers “twice as much midband noise cancellation”.

They’re smaller and lighter than the Pixel Buds Pro.

Changes for everyone

Google is nothing if not ambitious.

It’s planning to bring AI to “everyone” via the Gemini’s integration with the Android operating system, according to Sameer Samat, Google’s VP of Product Management, Android.

Gemini is now available on hundreds of phone models including flagship and older phones.

And the focus is on AI-tech that people can actually use — something not everyone is convinced of.

Mr Samat said that if you grant Gemini access to your personal data, the AI can connect to Google’s vast reservoir of information answer complex personal queries. For example, it can create a daily workout routine based on an email from a person trainer.

This is done on the Google Cloud Platform, meaning third parties don’t have access to the data.

On-device generative AI is also possible through Gemini Nano, meaning no one else has access to the data.

“It’s the biggest leap forward since we launched Google Assistant,” Mr Osterloh said.