Algorithms used to enhance images give smartphones the power of professional cameras

Smartphone cameras are now so good they can rival professional gear, but the improvements aren’t actually to do with the cameras themselves.

You’ve likely noticed already that smartphone cameras have gotten insanely good, and some flagship phones now produce images that come close to rivalling professional cameras that cost as much if not more than the phones themselves.

But despite the massive improvements, the cameras actually haven’t advanced that much.

Many of them still use comparatively tiny sensors (the “eyeball” of the camera that captures light — usually the bigger the better) and simple lenses.

While recently we’ve witnessed the number of lenses on our smartphones grow to sometimes comical levels, the photos they now produce are no joke, and it doesn’t have much to do with the actual camera.

Software optimisation and what’s called computational photography are the main driver behind the increased quality.

The need to optimise software is something that’s existed since the start, but fell by the wayside for several years as technology advanced and computers became powerful enough that they could deal with less than stellar code without a problem.

But software optimisation is still around, and is becoming a renewed focus in computing.

Intel chief architect Raja Koduri recently explained the efficacy of optimisation for the processor giant.

“For every order of magnitude performance potential of a new hardware architecture there are two orders of magnitude performance enabled by software,” Mr Koduri said at the Computex expo earlier this year.

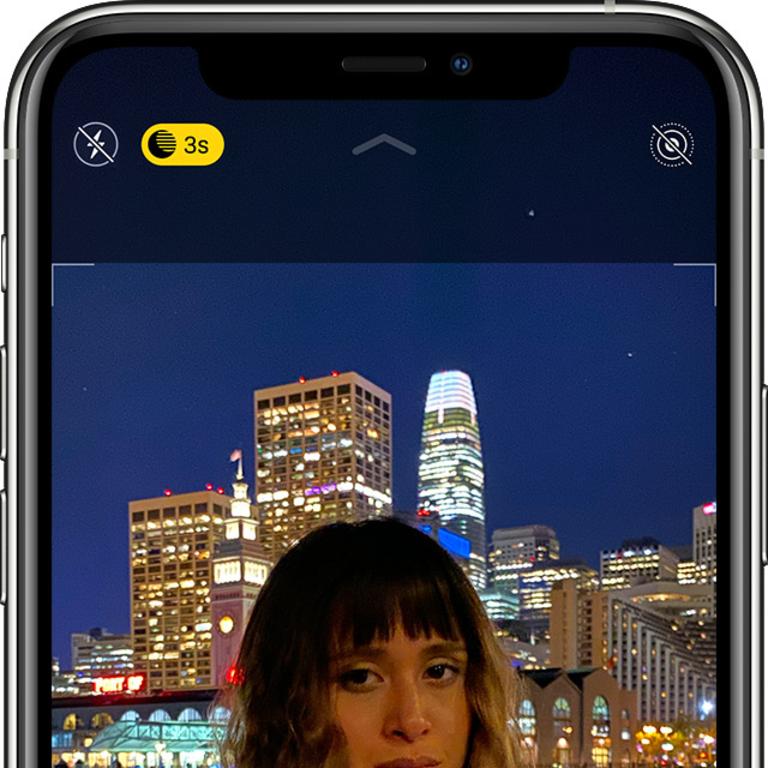

Software optimisation is the reason behind these advances in camera technology in our smartphones, through computational photography processes which are responsible for advancements like HDR (high-dynamic-range imaging, where multiple photos of different brightness are merged together to create images that align more closely with how the human eye sees) and the Panorama mode on your phone that takes multiple images as you pan across a scene and then stitches them together.

Both of these were possible in the past using sophisticated photo-editing software like Photoshop, but now smartphones are powerful enough to run these sorts of processes quickly and automatically.

But recently another advancement has been made in the world of computational photography, and this time the focus is on underwater imaging.

Israel’s University of Haifa engineer and oceanographer Derya Akkaynak and her research supervisor Tali Treibitz recently unveiled a new algorithm called Sea-thru that removes the water from underwater images.

Previously, underwater photographs all carried the same dull blue tint, thanks to the way light filters through the water, but the new algorithm has a way of negating this, and the results speak for themselves.

Again, this colour imbalance was previously fixable using photo-editing software by increasing the amount of red and yellow present in an image taken underwater.

But the new algorithm doesn’t simply compensate for the colour loss, it actively removes it by instead compensating for the distortion caused by the water.

As pointed out by Scientific American, the algorithm does require knowing the distance between the camera and the subject.

In her development of the algorithm, Ms Akkaynak took multiple photos of the same scene from a variety of angles, which were then used to figure out the distance.

But all these extra steps could soon be mitigated by things like the time-of-flight (ToF) camera systems already present in some smartphones.

ToF cameras work by using Infrared beams to detect distance between the camera and subject.

Currently this is used for things like the Portrait mode on some smartphone cameras by detecting which parts of the photo to blur.

But there’s a potential for it to be combined with an algorithm like Sea-thru (on a waterproof phone) to create underwater images with more vivid and accurate colourscapes.

Aside from the obvious consumer benefits possible in the future, right now Sea-thru is able to give scientists more useful data from underwater imagery to help in their research of coral reefs and marine life.