Sweeping new changes coming to Meta platforms for teen users

There will be sweeping new changes coming for teenage social media users as Meta continues to crack down on the content teens see every day.

Meta has announced it will be introducing sweeping new safeguards in an effort to protect teenage users on their platforms.

The social media service is expanding its protections for teen users on Instagram, with new built-in safeguards being rolled out for all users under the age of 16.

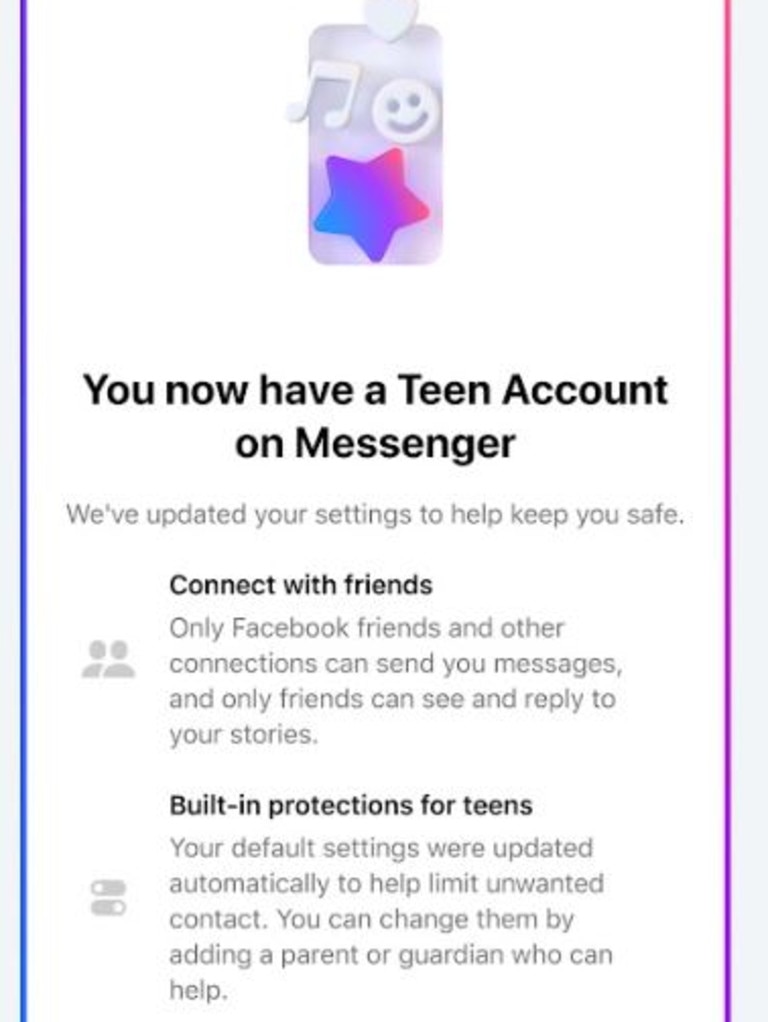

Expanding on its Teen Accounts, which is a “dedicated experience” for children using the platform, includes protections that limits who can contact them, the content they access and how long they spend using the app.

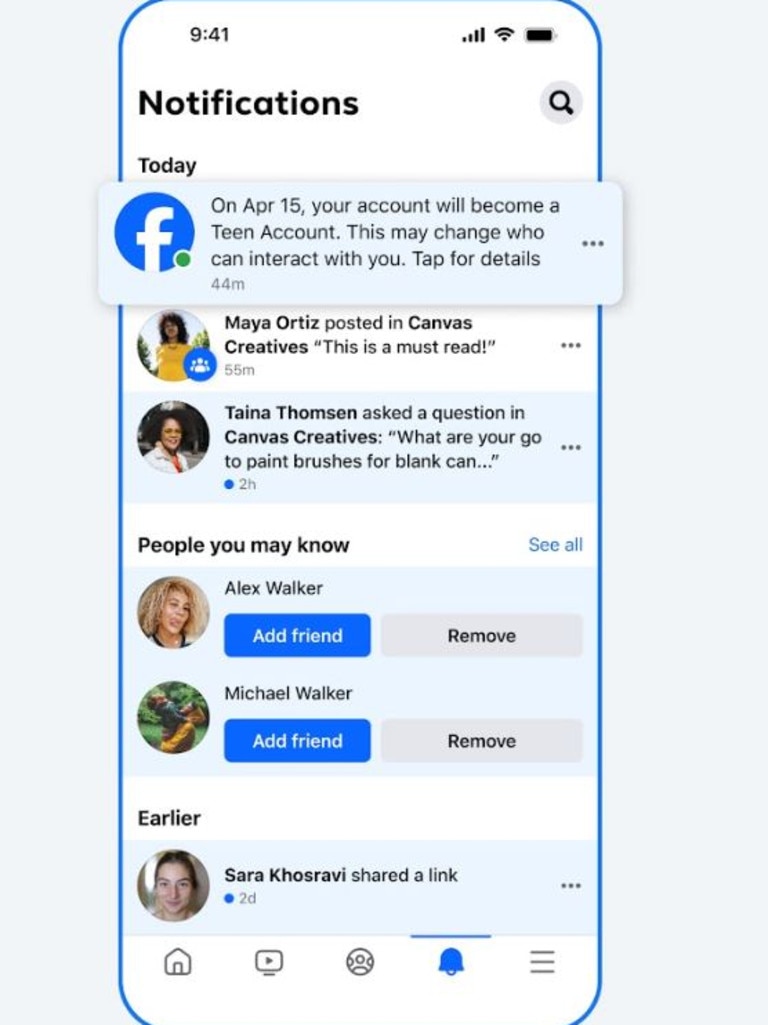

Meta has since unveiled a series of new features, while placing all teen under 16 into the Teens Account and requiring a parent’s permission to change any settings and reduce the regulations.

The service will mean teenagers under 16 will not be able to begin livestreaming on the app without parental permission, nor will they be able to switch off the automatic blur of suspected nudity in direct messages.

According to preliminary results from the social media platforms, 97 per cent of users aged between 13 and 15 continued using the default Teen Account protections, and 94 per cent of parents said the platform protections were helpful in safeguarding their children.

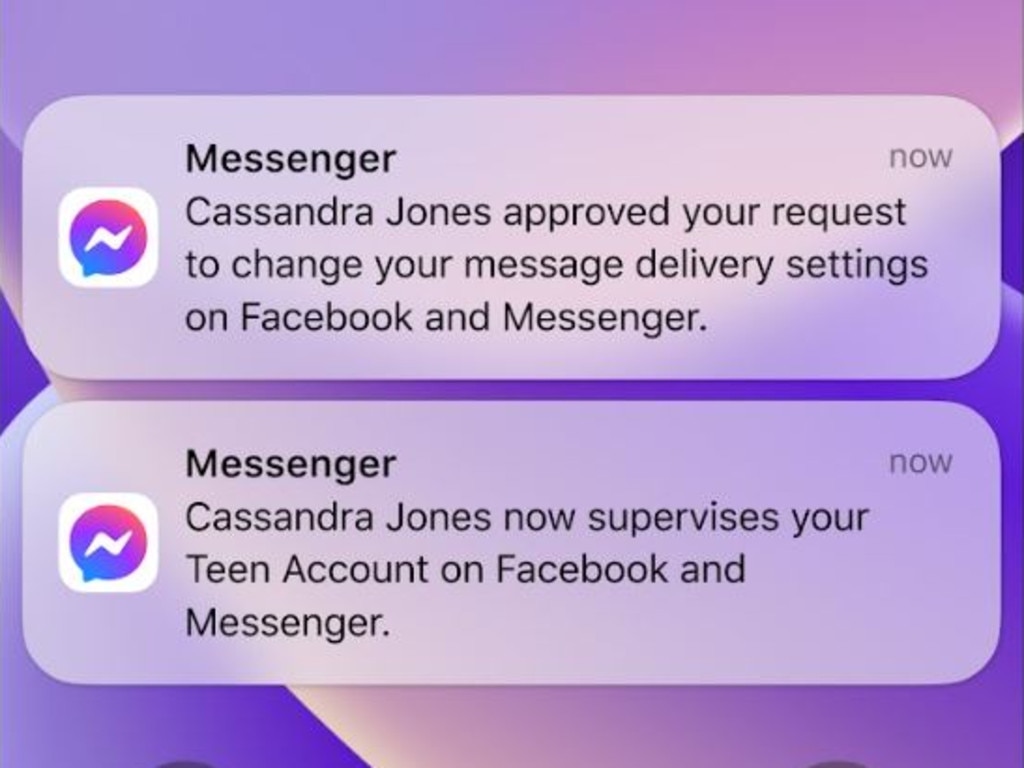

The Teen Account services will now be expanded to other Meta platforms, including Facebook and Messenger.

Some of these features will include screen time nudges, message limits and content controls.

“These are major updates that have fundamentally changed the experience for teens on Instagram,” a Meta spokesman said.

“Meta is encouraged by the progress, but the work to support parents and teens doesn’t stop here, so these additional protections and expansion of Teen Accounts to Facebook and Messenger will help give parents more peace of mind across Meta apps.”