Warning over ‘horrifying’ AI-trend becoming increasingly common

Experts are warning to watch out for a “dangerous” new trend flooding social media – as chances are, you’ve “fallen for it” too.

Experts are warning to watch out for “deepfake doctors” after a string of videos with unfounded medical advice have flooded social media.

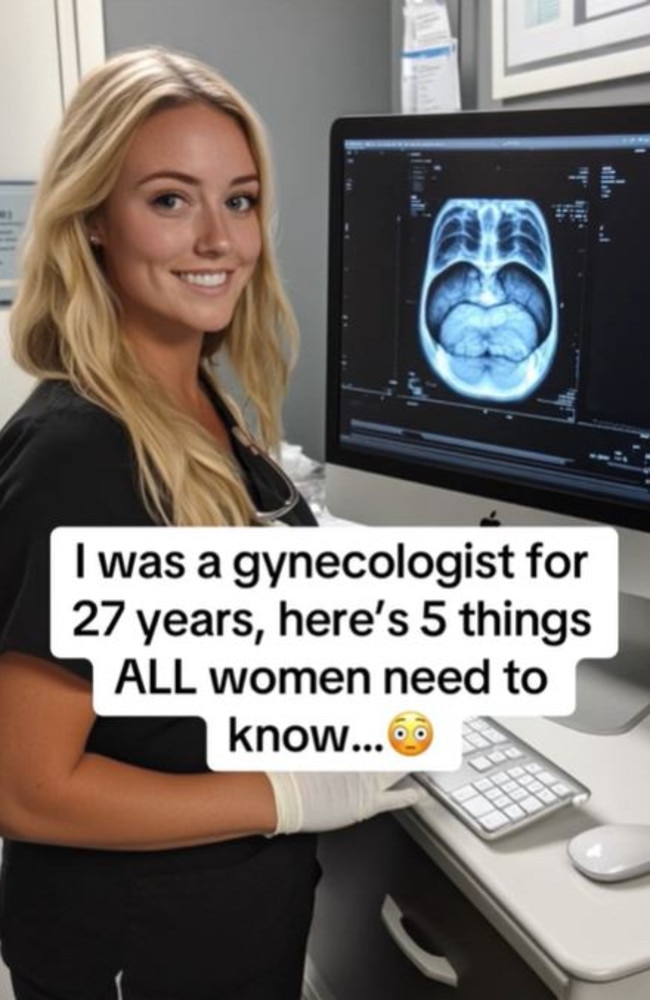

Some of the so-called “doctors” might claim to be experts in other fields — diet, plastic surgery, breasts, butts, stomach and more — and offer advice to cure or remedy viewers’ ailments or health concerns.

But the so-called experts aren’t even real. They’re completely computer-generated by artificial intelligence, the New York Post reports.

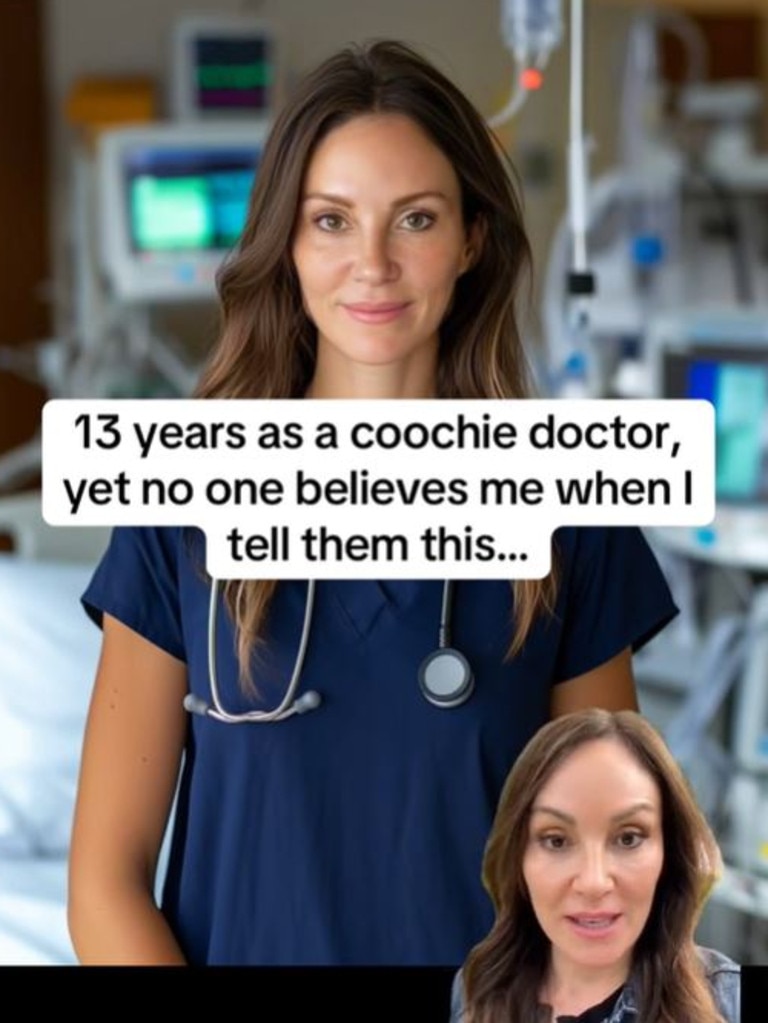

On TikTok, one search yields dozens of videos of women rattling off phrases like, “13 years as a coochie doctor and nobody believes me when I tell them this,” before dishing so-called health secrets for perky breasts, snatched stomachs, chiselled jawlines and balanced pH levels.

The videos often feature the same people, in a range of different doctor roles, all spewing unfounded medical advice.

Media Matters reported that the same gaggle of alleged deepfake characters have also appeared as salespeople for wellness products or claimed to have connections to Hollywood to dish insider gossip.

The discrepancies are enough to raise a few eyebrows.

Javon Ford, creator of the namesake beauty brand, recently revealed that the AI-generated personalities can be manipulated on an app called Captions, which bills itself as a tool to generate and edit talking AI videos. The company claims that it has 100,000 daily users of the app, with over 3 million videos produced every month.

But Ford called the service “deeply insidious”.

“You might have noticed a few of these ‘creators’ on your ‘For You’ page. None of them are real,” he told the New York Post.

In a TikTok video, he demonstrated how easy it is to create “fake” experts by scrolling through an exhaustive list of AI avatars that users can choose from — such as a woman named “Violet,” who can be seen in many of the “coochie doctor” clips — demonstrating how a script can be written and the avatar will regurgitate it.

Aghast users called the technology “very dangerous,” while some weighed the option of ditching social media altogether due to the “scary” reality of realistic deepfakes.

“I’ve seen Violet so many times,” one shocked viewer commented, while another agreed that they’ve seen her “say she’s a dentist and a nurse”.

“So that’s actually scary! Now that you point it out, I can see through it, but without the warning, I may have fallen for it!” someone else admitted.

In an attempt to educate viewers, creators have highlighted the ways to determine if the person on your screen is real or AI-generated as deepfakes proliferate online.

Ford, for one, called out the “mouth movements,” noting that the lips did not sync with the audio, which he said is the “first red flag”.

“It’s 2025,” he said in a TikTok. “Nobody should be having audio video lag issues.”

He added that their claims — that a product or natural remedy works better than whatever is commonly used — should also raise alarms.

Ford also advised looking at the account owner’s profile to see how many videos feature the so-called “doctor,” who has someone been a gynaecologist, proctologist and more over a mere 13 years.

“My, my, they’ve had a productive career,” he joked.

One user named Caleb Kruse, an expert in paid media, pointed out the telltale signs of an AI avatar in his own video, using another creator’s content as an example. The woman later confirmed that, while she is, in fact a real person, the video in question was created with AI by a company who had asked to clone her likeness.

In addition to the unrealistic mouth movements, Kruse highlighted the woman’s eyes, which didn’t even blink, awkward head movements and overall vibe of the video or feeling that “it’s not real”.

“The eyes are too big when they shouldn’t be — they’re not always reflecting exactly how a normal person might react when they say things,” he explained.

“Third, is the cadence, how she speaks, how the words between sentences flow,” he continued. “There’s sometimes these weird pauses that you wouldn’t normally say.”

The call-outs were a wake-up call for his followers.

“This should be illegal,” one dismayed viewer commented.

“It looks so real its horrifying,” another chimed in.

This article originally appeared on the New York Post and was reproduced with permission