Scammer uses AI voice clone of Queensland Premier Steven Miles to run a Bitcoin investment con

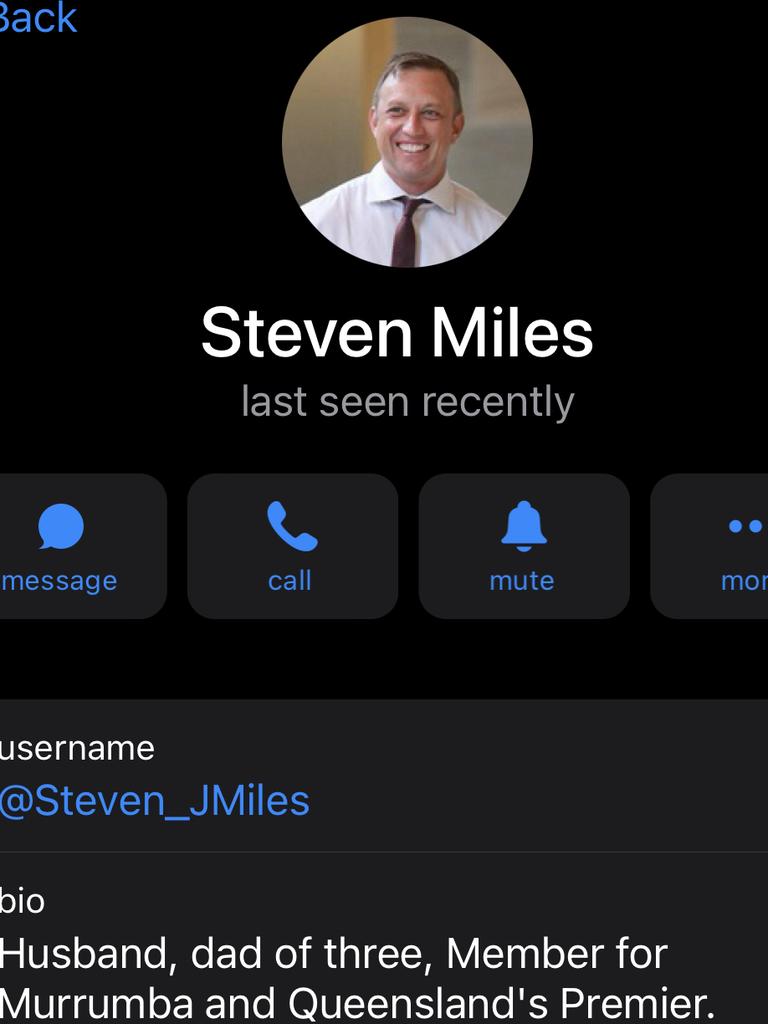

The voice of Queensland Premier Steven Miles has been cloned using artificial intelligence technology to produce an eerily accurate impersonation of the leader.

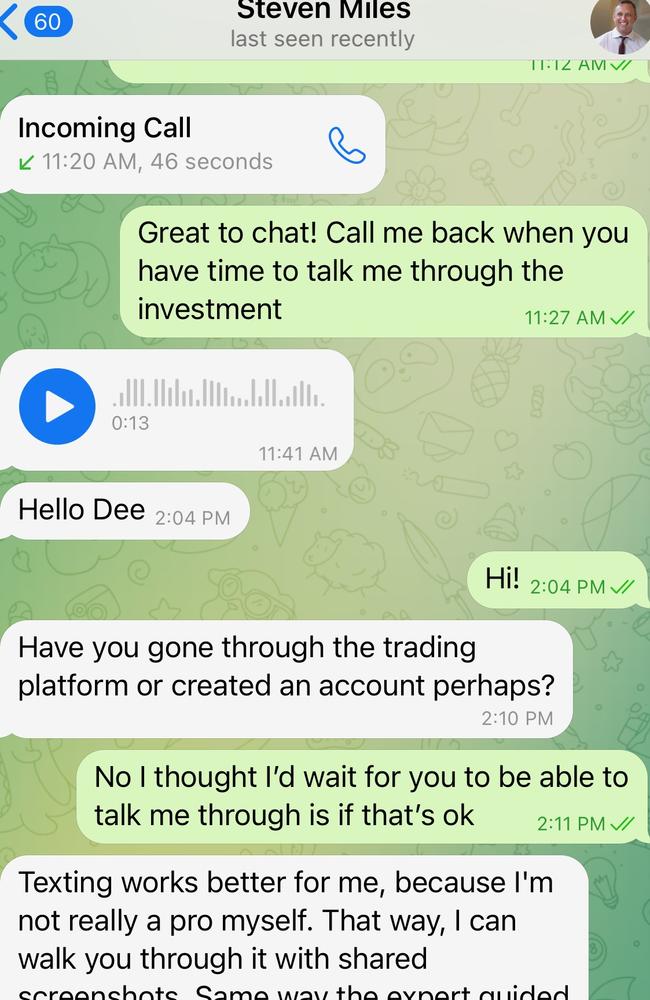

When advertising executive Dee Madigan received a private message on social media from Queensland Premier Steven Miles, she was immediately intrigued.

The pair have been friends for years, so Ms Madigan knew it wasn’t the actual prominent political leader she was talking to.

“I was stuck at home with Covid and bored, so I decided to have a little fun,” she told news.com.au.

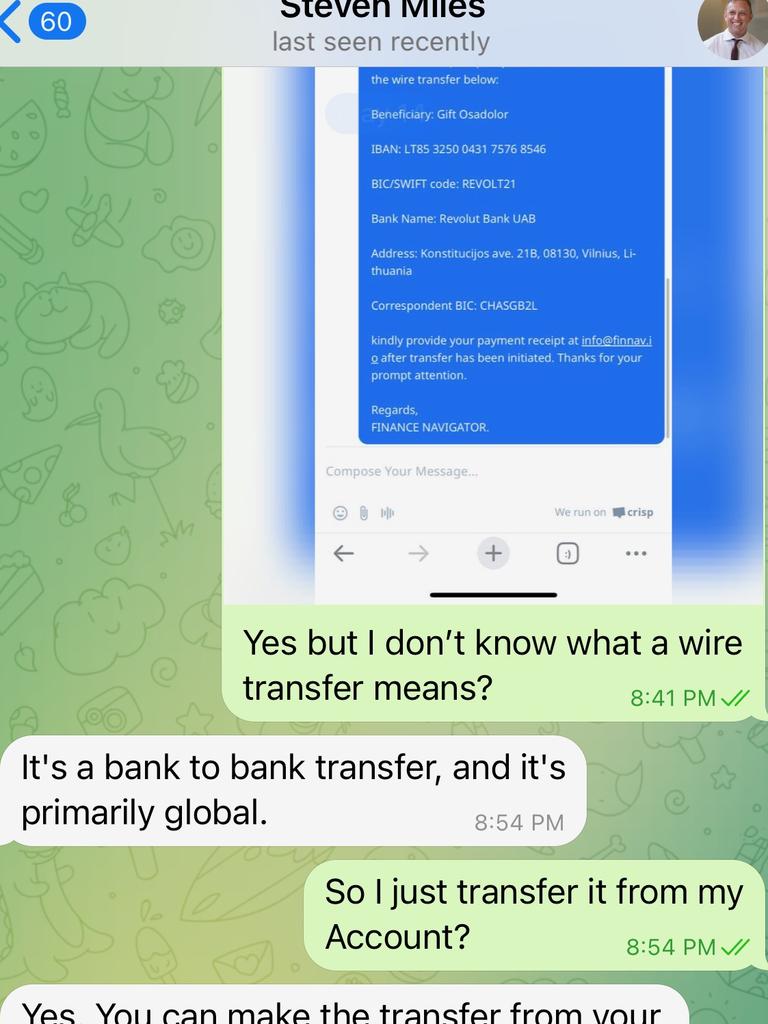

The person who had created a fake profile on X, formerly Twitter, was clearly a scammer and quickly began talking about financial investments.

“It was all pretty standard stuff,” Ms Madigan said. “I said I was interested in investing but pretended to have trouble setting up a trading account, just to annoy the guy.

“When I asked him to jump on a phone call with me to walk me through it, I figured that would be the end of it.”

And it was for a while – until her phone suddenly rang.

On the other end was Mr Miles – or rather, his voice – asking how she was and explaining that he was busy with meetings and couldn’t talk for long.

“It sounded like him,” she said. “A bit stunted and awkward, but it was his voice. It was pretty creepy.”

A few moments later, a voice message popped up on the encrypted chat platform Telegram, in which the scammer said: “Sorry for the rush, Dee. I’m about to enter a meeting but I just wanted to talk to you, as I haven’t been able to do that since I promised.

“Concerning the investment, I’ll definitely shoot you a text when I have some free time.”

Ms Madigan tried to record the phone call, but a screen capture didn’t include the audio of the exchange. She provided a copy of the voice message that followed to news.com.au.

“Someone who doesn’t know the technology might fall for something like this,” she said.

“A lot of friends of mine now have a code word so if they get a call from someone in their family that seems suspicious, they have to say it so they know it’s legitimate. I think that’s a good idea.”

It goes without saying, but Mr Miles is not moonlighting as an investment adviser or aspiring Bitcoin trader.

“The fake clip of what sounds like my voice is obviously terrifying - for me and for anyone who might accidentally be conne,” Mr Miles told news.com.au.

“The Queensland Government will never try to get you to invest in Bitcoin. If you come across a scam you should report it to scamwatch.gov.au.

“Queenslanders should know that there will be a lot of misinformation in the lead up to the October election. Only get your news from reliable sources and if something seems off, fact check it with credible sources.

“If you’re ever in doubt head to qld.gov.au or contact my office.”

Consumer advocacy group Choice warned a tsunami of AI voice scams like these is coming and Aussies should be on high alert.

“Well-organised criminals are leveraging the latest technology to double down on established methods to create new cons,” Choice content producer Liam Kennedy said.

And it might not be a state premier you’ve never met reaching out.

“Harnessing the power of AI to impersonate the voices of our loved ones, these new scams have devastated victims overseas, getting around the defences of even seasoned scam-avoiders.”

NAB warned instances of phone calls from a loved one in distress who urgently needs money are on the rise internationally.

“While we haven’t had any reports of our customers being impacted by AI voice scams to date, we know they are happening in the UK and US, in particular, and anticipate it’s just a matter of time before these scams head down under,” NAB’s manager of advisory awareness Laura Hartley said.

Oli Buckley, an associate professor of cyber security at the University of East Anglia, said deepfake videos have gained notoriety over recent years.

“[There have been] a number of high-profile incidents, such as actress Emma Watson’s likeness being used in a series of suggestive adverts that appeared on Facebook and Instagram,” Mr Buckley wrote in analysis for The Conversation.

“There was also the widely shared – and debunked – video from 2022 in which Ukrainian president Volodymyr Zelensky appeared to tell Ukranians to ‘lay down arms’.

“Now, the technology to create an audio deepfake, a realistic copy of a person’s voice, is becoming increasingly common.”

Creating a convincing copy of someone’s voice used to be a fairly involved process, requiring lots of audio sample recordings, Mr Buckley explained.

“The more examples of the person’s voice that you can feed into the algorithms, the better and more convincing the eventual copy will be.

But rapid advancements in AI technology have sped up the process significantly.

“As the capabilities of AI expand, the lines between reality and fiction will increasingly blur,” Mr Buckley said.

“And it is not likely that we will be able to put the technology back in the box. This means that people will need to become more cautious.”

In April, OpenAI – the company behind ChatGPT – announced it has developed a tool that can accurately clone a voice with just 15 seconds of sample audio.

Voice Engine, which turns text prompts into speech, is so advanced that its wide release has been delayed.

“We are choosing to preview but not widely release this technology at this time,” OpenAI said.

It said it wants to “bolster societal resilience against the challenges brought by ever more convincing generative models”.

OpenAI conceded there were “serious risks” posed by the technology, “which are especially top-of-mind in an election year”.

In February, the Federal Communications Commission in the United States banned robocalls that use AI-generated voice audio, after an alarming instance the month before.

A call made to voters with a voice message seemingly from President Joe Biden urged those inclined to support the Democrats not to take part in the New Hampshire primaries.

“Recently, a number of companies have popped up online offering impersonation as a service,” Joan Donovan, an expert in emerging media studies at Boston University, wrote for The Conversation.

“For users like you and me, it’s as easy as selecting a politician, celebrity or executive like Joe Biden, Donald Trump or Elon Musk from a menu and typing a script of what you want them to appear to say, and the website creates the deepfake automatically.

“Though the audio and video output is usually choppy and stilted, when the audio is delivered via a robocall it’s very believable. You could easily think you are hearing a recording of Joe Biden, but really, it’s machine-made misinformation.”

Mike Seymour is an expert in computer human interfaces, deepfakes and AI at the University of Sydney, where he’s also a member of the Nano Institute.

“Other companies have been doing similar stuff with voice synthesis for years,” Dr Seymour told news.com.au. “This OpenAI news isn’t coming out of nowhere.”

What makes this announcement significant is the apparent high quality of the audio produced by Voice Engine and the fact that such a small sample is needed.

“I had my voice done a few years ago, and I had to provide something like two hours of material to train it on.

“Where we’ve moved to now is newer technology that has kind of a base training and then it has a specific training – and the specific training in this case is only 15 seconds long.

“That’s very powerful.”

In February, a company in Hong Kong was stung by a deepfake scam to the tune of HK$200 million (AU$40 million).

An employee working in the unnamed multinational corporation’s finance department attended a video conference call with people who looked and sounded like senior executives at the firm and made a sizeable funds transfer at their request.

Hong Kong Police acting senior superintendent Baron Chan told public broadcaster RTHK that the company clerk was instructed to complete 15 transactions to five local bank accounts.

“I believe the fraudster downloaded videos in advance and then used artificial intelligence to add fake voices to use in the video conference,” Mr Chan said.

More Coverage

“We can see from this case that fraudsters are able to use AI technology in online meetings, so people must be vigilant even in meetings with lots of participants.”

In the final three months of 2023, Aussies reported $82.1 million in losses to various scams.

Anyone concerned they may have been scammed is encouraged to file a report with the National Anti-Scam Centre’s ScamWatch service.

Read related topics:Brisbane