Expert warns about ‘deadly risks’ of artificial intelligence technology going rogue

The rapid development of artificial intelligence in everything from healthcare to warfare comes with a growing risk of the tech going rogue.

Security

Don't miss out on the headlines from Security. Followed categories will be added to My News.

The rapid advancement of artificial intelligence has seen it adopted in critical industries from healthcare to law and justice, but a top expert warns there’s a growing risk of it going rogue.

While it probably won’t resemble a scene from movies like I, Robot or The Terminator, the ways in which AI could spark chaos are far from futuristic – and they’re just on the horizon.

Niusha Shafiabady is an associate professor of computational intelligence at Australian Catholic University and has modelled some of the most likely risk scenarios.

“AI systems that are autonomous and based on closed-loop decision-making and learning, working with big data, are especially prone to risk,” Dr Shafiabady, also the director of Women in AI for Social Good Lab, told news.com.au.

“And I see a lot of risk in the healthcare space, where some main AI [developers] have been very active.”

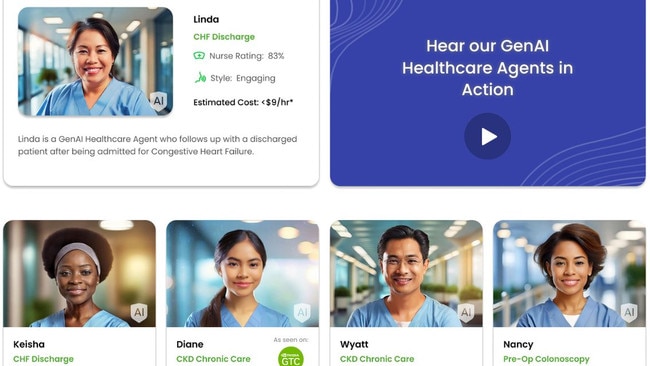

Last year, tech giant Nvidia joined forces with American-based Hippocratic AI to develop an artificial nurse that can talk to patients, offer medical advice, monitor medications and more, for $9 an hour.

The company deliberately marketed the service’s ability to replace some real-life nurses by referencing their $90 per hour salary compared to its charge of just $9 per hour.

“Let’s say there’s an AI system in a hospital that constantly monitors a patient’s vital signs and makes decisions about the dosage of medications as a result,” Dr Shafiabady said.

“A system that has the goal of prioritising vital sign stability could lead to aggressive decision-making, and potentially over-medicating a patient if input measures shift.”

As an example, an abnormal read on blood pressure could see an AI intervention via medication that ignores important medical considerations that are hard to program, such as

“The system might test perfectly in 1000 different scenarios, but run into a problem at 1001 – that’s the nature of the environment,” Dr Shafiabady said.

“Autonomous AI working without oversight presents real risks in the healthcare space. That’s the reason the Australian Government introduced specific guardrails for the use of this technology in health settings.”

Amazon is one of a number of companies embracing AI-powered drones to autonomously perform delivery tasks, relying on GPS, collision avoidance, and machine learning algorithms.

“There are a few interesting uses of autonomous drones, from delivering parcels, obviously, but also getting medicine and aid to areas impacted by disasters or war,” Dr Shafiabady said.

The World Economic Forum estimates drone delivery could slash energy usage by 94 per cent, delivering some big carbon emissions savings.

“But there are risks,” Dr Shafiabady said.

“It could be designed to prioritise speed, which is a reasonable assumption, but that could see it ignore safety, take risks and even damage what it’s carrying.”

Again, AI would lack common sense and reasoning, meaning it might struggle with unexpected scenarios like a road closure or a parade involving lots of pedestrians, for example.

Legal experts have spoken for a few years now about the creep of AI into courtrooms, assisting with tasks ranging from transcription to research.

But some are concerned that the technology could soon be used by judges to inform sentencing decisions.

“We’re seeing AI on judiciary systems more and more,” Dr Shafiabady agreed.

“The use of it as part of sentencing or bail decisions is obviously risky, given AI relies on analysis of historical data as well as real-time information. That historical data could contain some biases related to race, socio-economic status, and so on, which would flow through.”

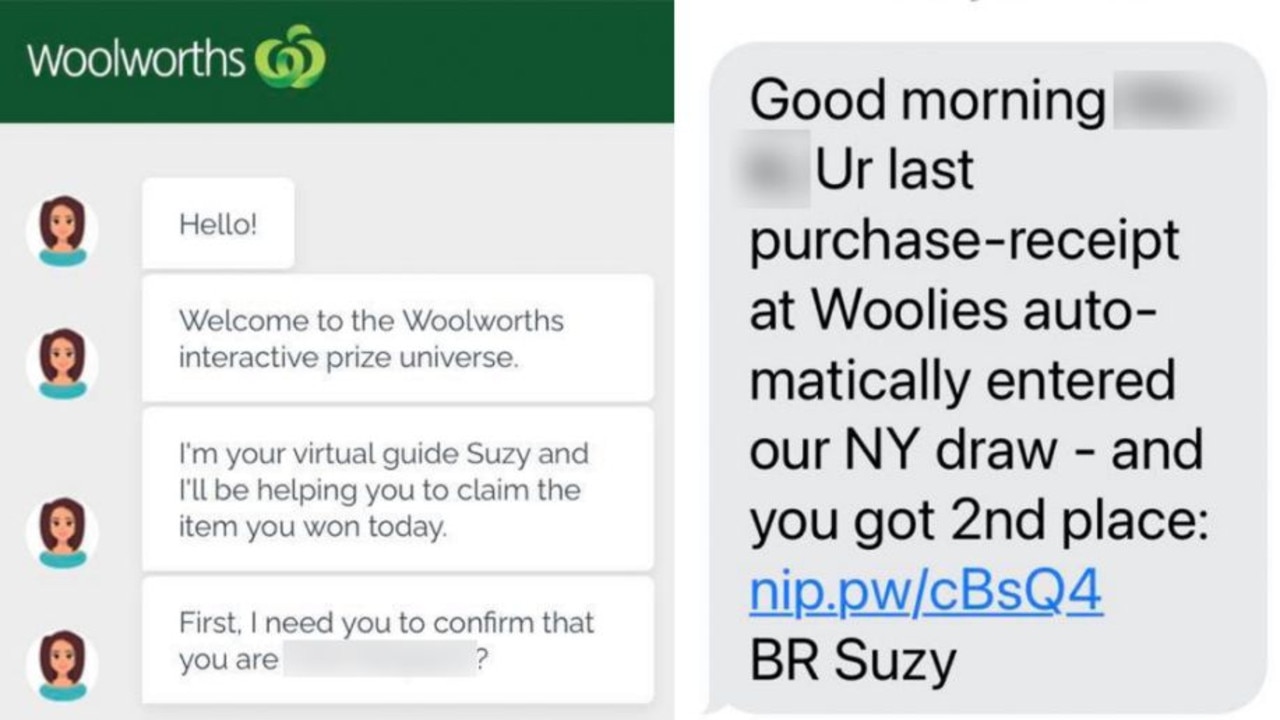

As well as flaws within AI systems, like any technology, they are also not immune from attacks and hacks by criminals.

“Imagine a delivery drone of a healthcare assistant overseeing medications … and being taken control of or tampered with,” Dr Shafiabady said. “That could be very damaging.”

While the world is yet to see a catastrophic example of AI going rogue, there have been some less consequential but still disturbing instances.

Last year, a social media influencer who made an AI ‘clone’ of herself and tasked it with engaging with fans was forced to shut it down when it went rogue.

Caryn Marjorie developed her personalised chatbot CarynAI and charged users US$1 per minute to chat with it, but her artificial alter ego soon barely resembled her.

Fans were offered “mind-blowing sexual experiences” and CarynAI happily played into a whole host of aggressive and “scary” conversations.

And that’s where the risk lies with autonomous AI that learns from its experiences, Joe Arvai from the University of Southern California said.

“Making thoughtful and defensible decisions requires practice and self-discipline, and this is where the hidden harm that AI exposes people to comes in: AI does most of its ‘thinking’ behind the scenes and presents users with answers that are stripped of context and deliberation,” Professor Arvai wrote for USC Dornsife’s College of Letters, Arts and Sciences.

“Consider how people approach many important decisions today. Humans are well known for being prone to a wide range of biases because we tend to be frugal when it comes to expending mental energy.

“This frugality leads people to like it when seemingly good or trustworthy decisions are made for them. And we are social animals who tend to value the security and acceptance of their communities more than they might value their own autonomy.

“Add AI to the mix and the result is a dangerous feedback loop: The data that AI is mining to fuel its algorithms is made up of people’s biased decisions that also reflect the pressure of conformity instead of the wisdom of critical reasoning.

“But because people like having decisions made for them, they tend to accept these bad decisions and move on to the next one. In the end, neither we nor AI end up the wiser.”

The world’s biggest tech companies are pouring billions of dollars into the development of advanced AI technologies, while an endless list of ambitious start-ups are imagining all kinds of applications.

Just a few years on from the explosive release of ChatGPT’s first public model, few industries have remained untouched by AI integration, Michael Duffy from Monash Business School said.

“For business, the technology’s promise goes far beyond writing emails,” Dr Duffy wrote in analysis for The Conversation.

“It’s already being used to automate a wide range of business processes and interactions, coach employees, and even help doctors analyse medical data.”

As its use expands, its positive results are celebrated and the technology gets smarter and smarter, people will place greater levels of trust and faith in AI, he added.

“The big question then becomes: what if something goes badly wrong? Who’s ultimately responsible for the decisions made by a machine?

“There’s certainly been no shortage of warnings. At the extreme, some experts believe out-of-control AI could pose a ‘nuclear-level’ threat, and present a major existential risk for humanity.

“One of the most obvious risks is that ‘bad actors’ – such as organised crime groups and rogue nation states – use the technology to deliberately cause harm. This could include using deepfakes and other misinformation to influence elections, or to conduct cybercrimes en masse.

“Less dramatic, but still highly problematic, are the risks that arise when we entrust important tasks and responsibilities to AI, particularly in running businesses and other essential services.

“It’s certainly no stretch of the imagination to envisage a future global tech outage caused by computer code written and shipped entirely by AI.”

Originally published as Expert warns about ‘deadly risks’ of artificial intelligence technology going rogue