Artificial intelligence experts continue to raise alarm about the existential risk of the global AI arms race

The UN has put its foot down as AI companies continue to blast full steam ahead towards a so-called utopia. But we may already be too late.

Innovation

Don't miss out on the headlines from Innovation. Followed categories will be added to My News.

The United Nations has hit the pause button on letting the unchecked powers of artificial intelligence rule the roost, urging global cooperation instead of simply letting market forces steer the way forward.

In a report published ahead of the UN’s highly anticipated “Summit of the Future,” experts are sounding the alarm about the current lack of international oversight on AI, a technology that’s stirring up concerns around misuse, biases, and humanity’s growing dependence on it.

Numerous figures in the AI field have already sounded alarm on the frightening global race towards technological supremacy, loosely comparing it to the frantic efforts in the 1940s to produce the world’s first nuclear bomb.

One man known as the “godfather of AI” famously quit Google in 2023 over concerns the company was not adequately assessing the risks, warning we could be walking into a “nightmare”.

While the immediate benefits are already being seen in terms of productivity, the main concern is that we are charging full steam ahead towards an event horizon that is impossible to predict the outcome of.

What we do know is that those spearheading AI development are becoming absurdly wealthy incredibly quickly and thus hold more and more power over the trajectory of the planet as each day passes.

Around 40 experts, spanning technology, law, and data protection, were gathered by UN Secretary-General Antonio Guterres to tackle the existential issue head-on. They say that AI’s global, border-crossing nature makes governance a mess, and we’re missing the tools needed to address the chaos.

The panel’s report drops a sobering reminder, warning that if we wait until AI presents an undeniable threat, it could already be too late to mount a proper defence.

“There is, today, a global governance deficit with respect to AI,” the panel of experts warned in their report, stressing that the technology needs to “serve humanity equitably and safely”.

Guterres chimed in with his own concerns this week, declaring that the unchecked dangers of AI could have massive ripple effects on democracy, peace, and global stability.

The report also called for a new scientific body, modelled after the Intergovernmental Panel on Climate Change (IPCC), to keep the world up-to-date on AI risks and solutions.

A dream team of AI experts would pinpoint new dangers, guide research, and explore how AI can be harnessed for good, like tackling global hunger, poverty, and gender inequality.

The proposal for this group of AI brains is already being discussed as part of the draft Global Digital Compact, which could get the green light during Sunday’s summit.

But while Guterres is pushing for an AI watchdog in the vein of the UN’s nuclear watchdog (IAEA), the report didn’t go that far. Instead, it recommends a lighter “co-ordination” structure within the UN secretariat for now.

The panel acknowledged that if AI risks become more concentrated and serious, a beefier, full-on global AI institution might be necessary to handle monitoring, reporting, and enforcement duties.

The risks of generative AI content have already been made abundantly clear, especially in regard to deepfakes and voice replication. With increasingly lifelike video and image generation, the task of weeding out the lies from the facts in a pinch is becoming more challenging by the day.

The potential for scams targeting the elderly has surged, while modern journalism has to come to terms with the fact that some videos they view online of major events could be altered by AI to influence certain agendas.

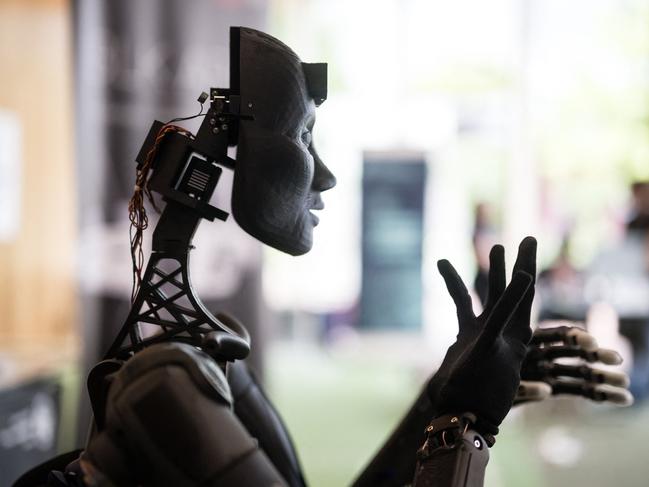

Nevertheless, tech giants are now in a technological race to achieve AGI, which, in theory, could understand the world as well as humans do and teach itself new information at a rapid pace. Once that is achieved, there is no telling how fast it will evolve and whether its actions will always be in the best interests of human beings.

Speaking at a May AI Summit in Seoul, leading scientist Max Tegmark stressed the urgent need for strict regulation on the creators of the most advanced AI programs before it’s too late.

He said that once we have made AI that is indistinguishable from a human being, otherwise known as passing the “Turing test”, there is a real threat we could “lose control” of it.

“In 1942, Enrico Fermi built the first ever reactor with a self-sustaining nuclear chain reaction under a Chicago football field,” Tegmark said.

“When the top physicists at the time found out about that, they really freaked out, because they realised that the single biggest hurdle remaining to building a nuclear bomb had just been overcome. They realised that it was just a few years away – and in fact, it was three years, with the Trinity test in 1945.

“AI models that can pass the Turing test are the same warning for the kind of AI that you can lose control over. That’s why you get people like Geoffrey Hinton and Yoshua Bengio – and even a lot of tech CEOs, at least in private – freaking out now.”

There is also a real concern that AI will influence widespread job losses globally.

Last year, the World Economic Forum’s Future of Jobs Report predicted that 23 per cent of jobs will go through a tectonic AI shift in the next five years.

The report summed up the next chapter in one word. Disruption.

The paper said that advancements in technology and digitisation are at the forefront of this labour market downturn.

Of the 673 million jobs reflected in the dataset in the report, respondents expect structural job growth of 69 million jobs and a decline of 83 million jobs.

The data claims 42 per cent of business tasks will be automated by 2027, estimating that 44 per cent of the current workforce’s skills “will be disrupted in the next five years”, with as many as 60 per cent “requiring more training” within five years.

Originally published as Artificial intelligence experts continue to raise alarm about the existential risk of the global AI arms race