Why we don’t trust autonomous cars — it’s down to human error

THERE’S a big problem holding us back from using autonomous car technology. And it’s probably not what you expected.

Hitech

Don't miss out on the headlines from Hitech. Followed categories will be added to My News.

An accident involving a driverless car and a motorcyclist has provided an insight into a major factor holding back the burgeoning technology.

The car, one of the Waymo fleet — which is owned by Google’s parent company Alphabet — was undergoing testing in the US state of Arizona in early October.

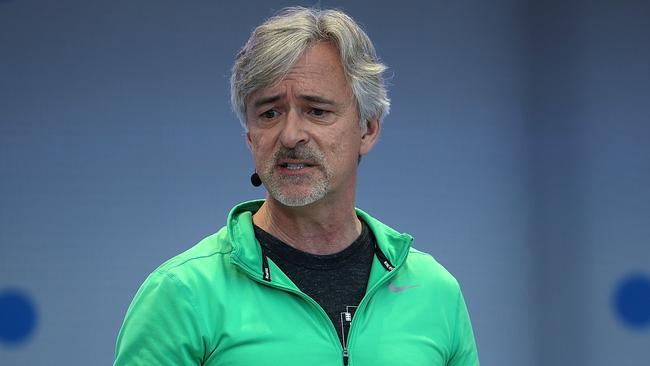

Waymo has analysed what caused the crash and company chief John Krafcik has detailed what went wrong. It was human error.

According to Krafcik, the accident occurred during a fairly commonplace road manoeuvre when a car merged abruptly into the lane of the driverless vehicle. At that point the test driver, who has the ability to take over controls at any time, moved the car manually into the next lane to avoid the car.

He failed to see the motorcycle that had moved from behind the autonomous car and was in the process of overtaking.

“As professional vehicle operators, our test drivers undergo rigorous training that includes defensive driving courses, including guidance on responding to fast-moving scenarios on the road,” Krafcik says.

“However, some dynamic situations still challenge human drivers. People are often called upon to make split-second decisions with insufficient context.”

In attributing the accident to simple human error, Krafcik reckons the car would not have made the same blunder were it acting on information received via the autonomous driving technology.

“We designed our technology to see 360 degrees ... at all times. This constant, vigilant monitoring of the car’s surroundings informs our technology’s driving decisions and can lead to safer outcomes,” he says.

“Our review of this incident confirmed that our technology would have avoided the collision by taking a safer course of action.”

But the accident has affected community trust in driverless cars — which, according to Krafcik, is a vital element in the future implementation of the technology.

Ford has also cited trust in autonomous cars as one of the biggest issues facing the implementation of the technology on a broader scale.

Blue Oval researchers have worked out that its driverless cars need to act like the other cars on the road and need to adjust for different driving habits for each particular market, as well as being predictable in their actions.

But not all car executives are so trusting of the technology.

Earlier this year, in British publication Autocar, BMW board member Ian Robertson voiced his doubts as to whether driverless cars could ever be trusted to roam the streets.

Specifically, he said: “Imagine a scenario where the car has to decide between hitting one person or the other — to choose whether to cause this death or that death.

“What’s it going to do? Access the diary of one and ascertain they are terminally ill and so should be hit? I don’t think that situation will ever be allowed.

“This ethical dilemma could arise in a countless number of situations — whether a car should plunge off a ravine or swerve into a group of pedestrians, for example.”

Originally published as Why we don’t trust autonomous cars — it’s down to human error