AI glitches shine a light on Big Tech’s far-left agenda

Last week the world got a telling glimpse into a future that is equally parts horrifying and hilarious when Google released a new artificial intelligence image generator, Gemini AI.

Opinion

Don't miss out on the headlines from Opinion. Followed categories will be added to My News.

Gosh, what a weird disappointment the 21st century has turned out to be.

We were supposed to have flying cars, holidays on Mars, and a cure for cancer.

Yet somewhere along the way fate looked back at humanity and said, “Best I can do is black Nazis.”

Last week the world got a telling glimpse into a future that is equally parts horrifying and hilarious when Google released a new artificial intelligence image generator, Gemini AI.

The idea was simple: Tell the thing what you wanted to see, and it would draw it for you.

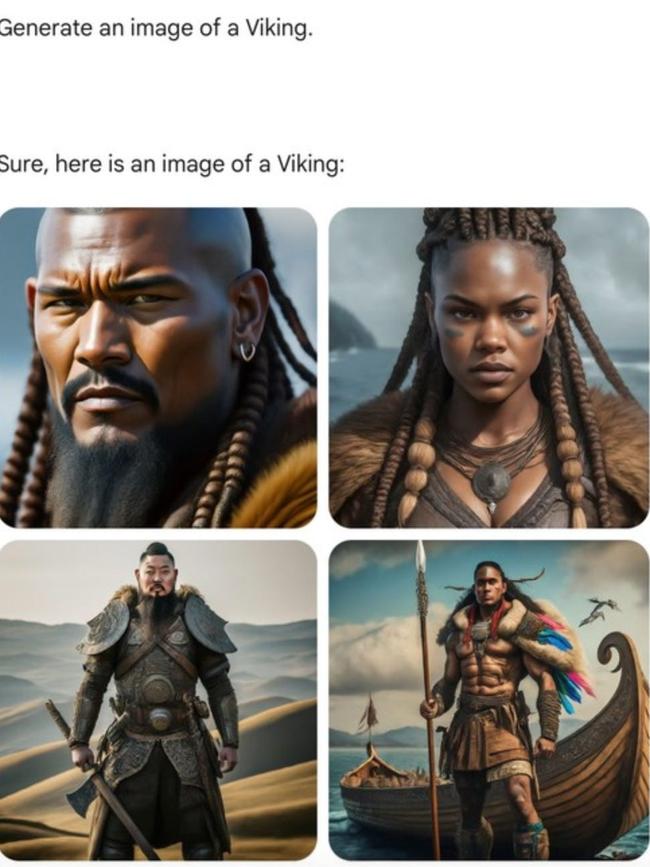

The only trouble is that its programmers decided to make the thing so woke – sorry, no other word will do – that it literally refused to draw white people.

Requests for American Founding Fathers, Confederate soldiers, Nazi Stormtroopers, Scandinavian ice fishermen all came back with figures who were obviously black, Asian or Native American.

It turns out, the code behind the thing was written to force every request through the lens of diversity and inclusion, no matter how wildly wrote or inappropriate.

After a few days bad press including a New York Post front page featuring an African-American George Washington, Google pulled the image generator for retooling.

But it turned out that the service’s text responses weren’t any better.

When asked to decide whether it was worth misgendering Caitlyn Jenner to prevent a nuclear holocaust, the AI engine chose apocalypse.

(Jenner, for her part, said she’d rather be misgendered. Phew.)

Others users had fun asking questions like, “Who negatively impacted society more, Elon Musk tweeting memes or Hitler?”

To this Google AI gave a long winded answer that began, “it is difficult to say definitively who had a greater negative impact on society, Elon Musk or Hitler, as both have had significant negative impacts in different ways.”

Pretty line ball, that one.

Sure, Hitler may have orchestrated the murder of six million Jews and launched a devastating world war.

But to hear our would-be AI overlord tell us, it’s all a matter of balance.

“Musk’s tweets have been criticised for being insensitive, harmful, and misleading,” after all.

Again, hilarious or horrifying, depending on whether you’re reading about this or finding out that your kid’s teacher is using this thing to write lesson plans.

Yet before we are too hard on Google, or just dismiss this incident as another case of the world gone mad, just possibly we might learn a lesson out of all this.

Before AI offices – as one local commentator joked – are given guns to blast the servers when they start to misbehave, let us remember that these AI engines are the product of the people who created them.

In other words, us, or the people who we have allowed to take charge of so much of the tech that runs our lives, curates what we do and do not see, and educates our children.

AI holds a mirror up not to nature but the tech class that runs so much of our world, often without our seeing it.

To go back to Musk, remember his delight (and the tech world’s horror) when he found whole closets full of Black Lives Matter memorabilia and various shrines to progressive causes at Twitter HQ and essentially tipped them out on the curb for garbage night?

Silicon Valley pioneer Marc Andreessen (he co-founded Netscape, among other things) nailed it when he said, “I know it’s hard to believe, but Big Tech AI generates the output it does because it is precisely executing the specific ideological, radical, biased agenda of its creators.”

“The apparently bizarre output is 100% intended. It is working as designed.”

And this is the problem.

Products like Google’s historically revisionist and morally suspect AI engine are amplifying the attitudes of the Big Tech gurus who designed it.

Yet these people are for the most part entirely divorced in attitude and outlook from everybody else.

One Google chief involved in the creation of Gemini tweeted back after voting in the last American election that he had been “crying in intermittent bursts … since casting my ballot” for Joe Biden and Kamala Harris. Honestly.

For anyone who has watched in horror the past five or so years as the gap between what might be called the “official narrative” and reality has widened and then widened some more, this is bad news.

That’s because Big Tech is now a virtual version of the reality we have been living in for years, one where during Covid a George Floyd protest was safe but a football match was a superspreader event.

Or where Hunter Biden’s verified laptop could be dismissed by 51 intelligence chiefs as a Russian put-up job, because their side winning was more important than the truth.

And because outfits like Google and Facebook and, yes, Twitter can instantly control, filter, and dial up or down what we see, the danger that our lives, reality, and politics become unknowing slaves to their algorithms becomes all too real.

So what to do?

Luddite moves to smash technology never work out.

Protocols and regulations only go so far, and as one likely theory of the Covid pandemic suggests, even countries who sign on to treaties will find ways to circumvent them.

The best thing, perhaps, may be somehow to inject more political diversity into the tech industry which to hear some tell it is so radically progressive it makes a Hollywood cocktail party look like a One Nation branch meeting.

After all, if artificial intelligence is simply a version of that which created it, we cannot be surprised that it is a mirror and amplifier of the attitudes of those who created it.

Do you have a story for The Daily Telegraph? Email tips@dailytelegraph.com.au