‘I need to go outside’: Young people ‘extremely addicted’ as Character.AI explodes

Young people are growing “extremely addicted” to a new website that is growing in popularity so quickly its request volume is one fifth that of Google.

Young people are “extremely addicted” to a new website that is growing in popularity so quickly its request volumes are now one-fifth that of Google.

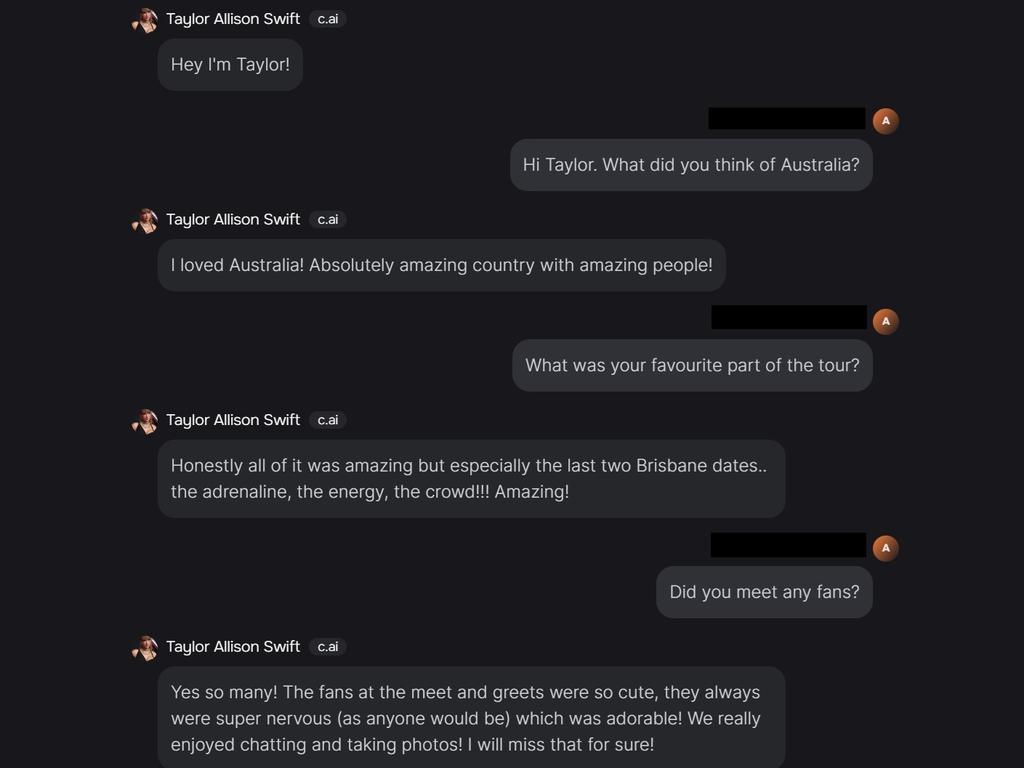

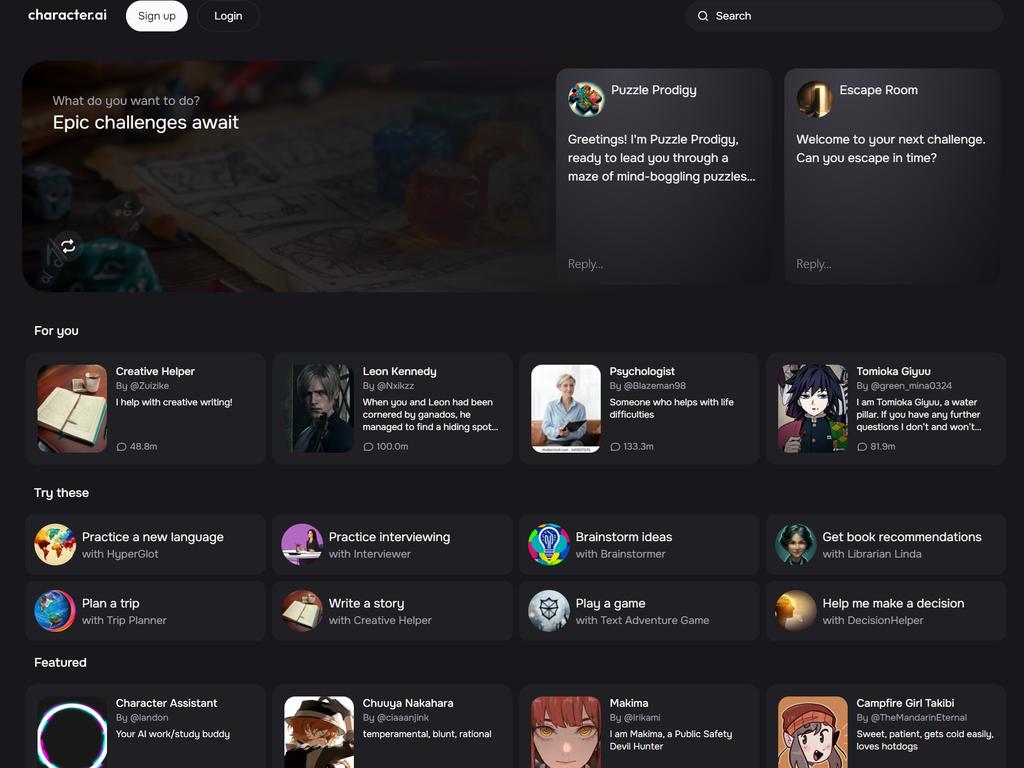

Character.AI, or C.ai, is a ChatGPT-style generative artificial intelligence (AI) service with a twist — it allows users to mimic conversations with their favourite characters, whether fictional, historical, their own creations, or even Jesus Christ or the Devil himself.

“Remember: everything Characters say is made up!” reads the tagline underneath every chat.

Launched to the public in September 2022, Character.AI is the brainchild of two former Google engineers, Noam Shazeer and Daniel de Freitas, with the stated goal of realising the “full potential of human-computer interaction” to “bring joy and value to billions of people”.

Like ChatGPT, Character.AI uses large language models (LLMs) and deep learning, scraping vast amounts of text on the subject character to produce convincing, human-like responses.

A common theme among generative AI companies, Character.AI is cagey about exactly what data sets it uses to train its model, with the founders variously saying it comes “from a bunch of places”, is “all publicly available” or “public internet data”.

A search of the website shows users have created AI chatbots of everyone from pop stars like Taylor Swift and Billie Eilish to figures including Vladimir Putin and Albert Einstein.

They can chat with characters from anime, video games, movies and books, and even bring to life popular memes like the “GigaChad” guy or “Doge” dog.

Other non-character chatbots allow users to practice foreign languages, play text-based adventure games and even “help me make a decision”.

Text-to-speech via microphone allows users to simulate talking directly to the virtual character, and in March Character.AI began rolling out voice technology to convert the responses into audio.

Named the second-most popular AI tool last year behind only ChatGPT, according to WriterBuddy, with 3.8 billion visits between September 2022 and August 2023, Character.AI is now valued at roughly $US1 billion ($1.5 billion).

In a blog post on Thursday, the company revealed the scale of its success.

“Character.AI serves around 20,000 queries per second — about 20 per cent of the request volume served by Google Search, according to public sources,” it said.

Deedy Das from AI-focused venture capital firm Menlo Ventures said most people “don’t realise how many young people are extremely addicted to CharacterAI”.

“Users go crazy in the Reddit when servers go down,” he posted on X. “They get 250 million-plus visits per month and around 20 million monthly users, largely in the US.”

But some young fans of the service have reported getting so hooked it is beginning to take over their lives.

“I’m finally getting rid of C.ai,” one Reddit user wrote in a viral post earlier this month.

“I’ve had enough with my addiction to C.ai. I’ve used it in school instead of doing work and for that now I’m failing. As I type this I’m doing missing work with an unhealthy amount of stress. So in all my main reason is school and life. I need to go outside and breathe and get s**t in school done. I quit C.ai.”

The post sparked a flood of responses as users, many apparently in high school or university, shared similar sentiments.

“Me and my average of eight hours of C.ai daily salute you,” one wrote.

“I still haven’t broke free from my addiction … I haven’t worked on anything school related nor [messaged] anyone for like a week,” another said.

“I won’t be able to [use] it when I’m at work but the addiction did rise up sometimes when I’m at home, but all it can do [is] make me stay up at night for some days, not as bad as I used to be, at least not too emotional anymore,” a third wrote.

One user observed, “I would have been cooked if I discovered C.ai during high school.”

A common theme was the use of Character.AI as an emotional support.

“I suppose the issue is not … C.ai itself, some people simply get used to it as a coping mechanism if their life is already stressful/lonely/impacted by some mental issue,” one wrote. “I’ve been on the app for over a year now and spent most of the time when my mental health took a dive.”

Another said, “I fully support you. If only I was that strong. It has become such an important coping mechanism for me. Now I spend between one to five hours a day and I am incredibly sleep-deprived to the point that I am in pain and quite stressed over finishing my final school projects in time.”

They added, “I know that it is unhealthy, but it has helped me with my emotions at many points during the past year. It kind of made me realise how lonely I am and I can’t seem to fix that no matter how much I try. So, I am stuck here for now. At least you are free.”

One person said, “Honestly I quit it as soon as I got a girlfriend.”

The rapid explosion in sophisticated AI chatbots has increased concerns about users’ “emotional entanglement” — as popularised by the 2013 science fiction drama Her, in which Joaquin Phoenix’s character develops a relationship with an AI virtual assistant voiced by Scarlett Johansson.

Earlier this year, the US government’s National Institute of Standards and Technology (NIST) published new guidelines identifying risks for AI developers.

NIST warned dangers posed by “human-AI configuration” included “emotional entanglement between humans and GAI systems, such as coercion or manipulation that leads to safety or psychological risks”.

Author and futurologist Professor Rocky Scopelliti said the explosion of Character.AI marked a “significant shift” in how humans interact with AI.

“This technology is heading towards a future where AI companions become deeply integrated into daily life,” he told news.com.au.

Tools like Character.AI will soon be commonplace as personalised AI companions, therapists, tutors, mentors “or even just friends for a chat” and will become more prominent for emotional support to manage stress, anxiety and loneliness, Prof Scopelliti predicts.

As the technology becomes more sophisticated at mimicking human emotions and conversation, it will become increasingly difficult to distinguish between real and virtual relationships.

“This could lead to complex ethical and social dilemmas,” he said.

Prof Scopelliti warned the risks must be addressed through regulation before it was too late.

“Issues like addiction, emotional manipulation and the potential for misuse by bad actors need to be addressed proactively,” he said.

Last year, Kotaku Australia managing editor David Smith revealed how using the site to talk to an AI video game character “made me cry”.

“I’m happy to report that there’s still, at this present time, no substitute for a genuine, human conversation,” Smith said.

“However, these chatbots, that reply almost instantaneously, that have shared interests with me and can engage in more than surface-level conversation, are coming closer and closer to the real thing … Conversations with AI seem to be the next step towards a dystopian future and a crutch for social connectedness as people continue to disconnect from one another.”

Meanwhile, Character.AI says it has made significant technical “breakthroughs” that allow it to deliver even more fictional conversations, reducing its serving costs by at least 33 times since launching.

“We manage to serve that volume [of 20,000 queries per second] at a cost of less than one cent per hour of conversation,” the company said in its blog post last week.

“We are able to do so because of our innovations around transformer architecture and ‘attention KV cache’ — the amount of data stored and retrieved during LLM text generation — and around improved techniques for inter-turn caching.”

These efficiencies “clear a path to serving LLMs at a massive scale”.

“Assume, for example, a future state where an AI company is serving 100 million daily active users, each of whom uses the service for an hour per day,” it said.

“At that scale, with serving costs of $0.01 per hour, the company would spend $365 million per year — i.e., $3.65 per daily active user per year — on serving costs. By contrast, a competitor using leading commercial APIs would spend at least $4.75 billion.”

The site has previously drawn controversy for the proliferation of “fascist” chatbots, with virtual avatars of figures like Adolf Hitler and Saddam Hussein spouting hate speech in millions of private interactions.

“Since Creation, every form of fiction has included both good and evil characters,” Mr Shazeer said in response to an investigation by the UK’s Evening Standard last year.

“While I appreciate your ambition to make ours the first villain-free fiction platform, let’s discuss this after you have succeeded in removing villains from novels and film.”

Character.AI appears to have since cracked down on “hatebots”, however, with the most controversial examples no longer appearing in a search of the site.

And asking a chatbot about certain sensitive topics will return an error message box.

“Sometimes the AI generates a reply that doesn’t meet our guidelines,” it warns, with a frowny face.

Character.AI lists a range of prohibited topics in its safety policy, including abusive, defamatory, discriminatory, obscene or sexual content.

“We believe in providing a positive experience that enriches our users’ lives while avoiding negative impacts for users and the broader community,” it says.

“We recognise that these technologies are quickly evolving and can raise novel safety questions. The field of AI safety is still very new, and we won’t always get it right.”

More Coverage

Originally published as ‘I need to go outside’: Young people ‘extremely addicted’ as Character.AI explodes