AI campaigner says CEOs are ‘freaking out’ about rapid advancement as humanity approaches point of no return

There are fears that some of the world’s most powerful corporations are “hiding the risk” of the seemingly unstoppable rise of AI.

Innovation

Don't miss out on the headlines from Innovation. Followed categories will be added to My News.

Skeptics of the seemingly unstoppable rise of AI have continued to rally against some of the world’s most powerful corporations as artificial intelligence continues its rapid march into our daily lives.

A leading scientist and artificial intelligence campaigner has smashed the major companies for ushering in what they believe is our inevitable future, claiming they have hoodwinked the world into ignoring the very real existential threat posed by the development of exceedingly powerful technologies.

The proposed benefits are incredible to say the least. Artificial intelligence is already being tipped to revolutionise a number of industries, aiming to eradicate human error and make the world safer.

But there is an elephant in the room being dodged by the major players in the AI space, according to leading scientist Max Tegmark.

We are now sitting before an event horizon unlike anything we’ve seen before. There are comparisons to the development of the nuclear bomb, which sent an almighty shiver through the world in the 1940s when the US flattened Hiroshima and Nagasaki.

But the development of a supercomputer that can understand us better than ourselves is much more complex, even more so than the most powerful weapon of war ever developed.

In short, nobody on the planet can say for certain what will happen once we develop artificial general intelligence (AGI) and deploy it across the globe.

Tech giants are now in a technological race to achieve AGI, which in theory could understand the world as well as humans do and teach itself new information at a rapid pace. Once that is achieved, there is no telling how fast it will evolve, and whether its actions will always be in the best interests of human beings.

Speaking at an AI Summit in Seoul, Tegmark stressed the urgent need for strict regulation on the creators of the most advanced AI programs before it’s too late.

He said that once we have made AI that is indistinguishable from a human being, otherwise known as passing the “Turing test”, there is a real threat we could “lose control” of it.

“In 1942, Enrico Fermi built the first ever reactor with a self-sustaining nuclear chain reaction under a Chicago football field,” Tegmark said.

“When the top physicists at the time found out about that, they really freaked out, because they realised that the single biggest hurdle remaining to building a nuclear bomb had just been overcome. They realised that it was just a few years away – and in fact, it was three years, with the Trinity test in 1945.

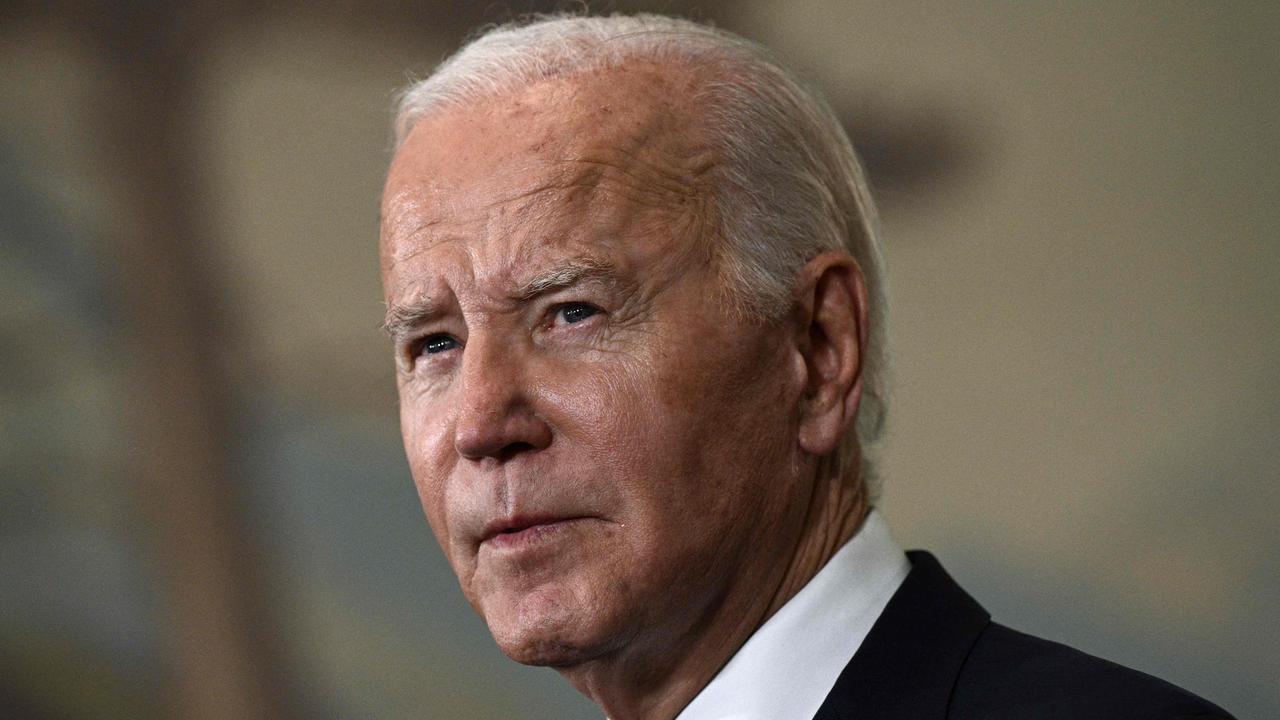

“AI models that can pass the Turing test are the same warning for the kind of AI that you can lose control over. That’s why you get people like Geoffrey Hinton and Yoshua Bengio – and even a lot of tech CEOs, at least in private – freaking out now.”

The release of OpenAI’s GPT-4 model in March showed how close the risk had come. Despite thousands of signatures from experts, including Hinton and Bengio, two of the three “godfathers” of AI, no pause was achieved.

Hinton made headlines last year after announcing his resignation from Google, citing growing concerns about the potential dangers in the industry.

The 75-year-old expressed his regret about his work in a statement to The New York Times, warning that chatbots powered by AI are “quite scary”.

He described the “existential risk” AI poses to modern life, saying there was a very real possibility for corrupt leaders to interfere with democracy, among several other concerns.

“Our letter legitimised the conversation. Seeing figures like Bengio express concern made others feel it was okay to worry too. Even my local gas station attendant told me he’s worried about AI replacing us after that,” Tegmark continued.

But the focus of AI regulation has shifted. In Seoul, only one of the three “high-level” groups addressed safety, examining a wide range of risks from privacy breaches to potential catastrophic outcomes.

Tegmark argues that this dilution of focus is a deliberate strategy as the industry goes through an astronomical growth period. By 2032, the artificial intelligence industry is expected to hit around $3.79 trillion.

“That’s exactly what I predicted would happen due to industry lobbying,” he said. “In 1955, the first studies linking smoking to lung cancer were published, but it wasn’t until 1980 that significant regulation was introduced, thanks to intense industry pushback. I see the same happening with AI.”

On the other side of the fence, there are “accelerationists”, who believe the world’s AI developers must be pushing forward as fast as they can because “artificial superintelligence is inevitable”.

“I’m absolutely an accelerationist and very anti-pausing because it achieves nothing,” one expert working in the field who wished to remain anonymous told news.com.au.

“I’m building as fast as I can and everyone else should be too.”

The source said that while they “broadly agreed” that some major corporations are “hiding the threat”, some of the large players are taking safety “extremely seriously”.

“This is obviously a conversation that is going to go on for the rest of our lives,” the source said.

The same developer disclosed to news.com.au that “50 per cent of the workforce will be automated in five years”, meaning governments will have to scramble for solutions to address what will be one of the greatest societal shifts since the introduction of the internet.

Last year, the World Economic Forum’s Future of Jobs Report gave a more conservative warning, predicting that 23 per cent of jobs will go through a tectonic AI shift in the next five years.

The report summed up the next chapter in one word. Disruption.

The paper said that advancements in technology and digitisation are at the forefront of this labour market downturn. Of the 673 million jobs reflected in the dataset in this report, respondents expect structural job growth of 69 million jobs and a decline of 83 million jobs.

The rise of artificial intelligence and other technologies is the main culprit behind this wave of predicted unemployment.

A whopping three-quarters of the 803 companies surveyed are planning to adopt big data, cloud computing, and AI technologies in the next five years.

Respondents predicted that 42 per cent of business tasks will be automated by 2027, estimating that 44 per cent of the current workforce’s skills “will be disrupted in the next five years”, with as many as 60 per cent “requiring more training” within five years.

Strap in, folks.

Originally published as AI campaigner says CEOs are ‘freaking out’ about rapid advancement as humanity approaches point of no return