Des Houghton: Premier Steven Miles’ deepfake incident a harbinger of incoming AI ‘tsunami’

Are we about to become slaves to the machine? Artificial intelligence offers countless benefits, but it doesn’t come without risks as Premier Steven Miles discovered, writes Des Houghton.

Des Houghton

Don't miss out on the headlines from Des Houghton. Followed categories will be added to My News.

First the good news: Artificial intelligence will make countless meddling government bureaucrats, clerks and university academics redundant.

The bad news is that those left with jobs will have a huge welfare bill to pay until AI starts to create more jobs to replace them.

The International Monetary Fund warns an AI “tsunami” will leave 300 million unemployed within two years.

More bad news is that AI models can generate false information, which could lead to disinformation and harm the democratic process, a University of Queensland expert warns.

More good news: Microsoft founder Bill Gates is optimistic that artificial intelligence can solve just about everything from improving crop yields to end famine, and enhancing renewables to ease climate change.

His only fear was that too many “bad guys” would use artificial intelligence for sinister purposes.

Gates told US print and electronic media that the arrival of artificial intelligence was a significant moment in the history of technology.

Artificial intelligence is already heralding a new age for journalism.

I’ll take a punt and say it will be the biggest advance since the invention of the printing press.

More good news: News Corporation (publisher of The Courier-Mail) has signed a historic agreement to deliver news content from its publications to OpenAI, one of the leading players in artificial intelligence.

OpenAI will have access to our extensive archive which includes The Times, The Sunday Times, The Australian, The Wall Street Journal, The Sun and the New York Post.

As artificial intelligence expands, so does the spread of disinformation and deepfakes. So the media’s watchdog role will be more important than ever.

“Social media can help a lie make several circuits of the Earth before the truth has got its shoes on,” Ben Wright warned in The Telegraph in London this week.

The jobs threat dominated the media in the US and Europe this month.

Kristalina Georgieva of the International Monetary Fund said AI was hitting the global labour market “like a tsunami” and likely to impact 60 per cent of jobs in advanced economies and 40 per cent of jobs around the world in the next two years.

“We have very little time to get people ready for it, businesses ready for it,” she told the Swiss Institute of International Studies.

“It could bring a tremendous increase in productivity if we manage it well, but it can also lead to more misinformation and, of course, more inequality in our society.”

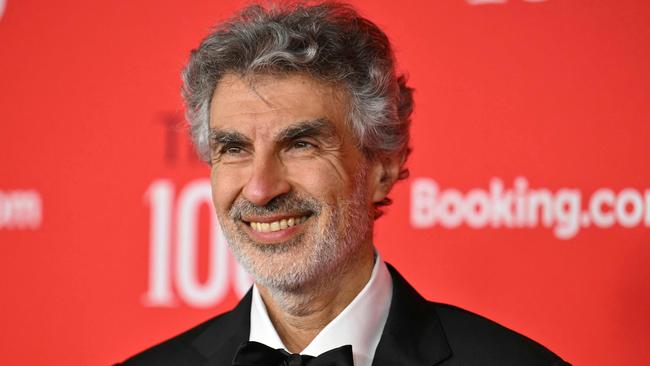

The world got another wake-up call this week when Professor Yoshua Bengio, an AI expert, told London journalists he was concerned most of governments were “sleepwalking and not sufficiently conscious of the magnitude of the risks”.

Bengio heads a team of 75 experts from 30 nations studying the impacts of artificial intelligence for the United Nations and the European Union.

A computer scientist described by the BBC as the “godfather of artificial intelligence” says the government will have to establish a universal basic income for workers left behind.

Professor Geoffrey Hinton told the BBC that a benefits reform giving fixed amounts of cash to every citizen would be needed because he was “very worried about AI taking lots of mundane jobs”. While AI would increase productivity and wealth, the money would go to the rich, he said and not the people whose jobs get lost. Hinton was also concerned that governments were unwilling to rein in the military use of AI.

Meanwhile, debate rages in the British Parliament about whether AI can be engaged to contain terrorism, or enable it.

Law enforcement agencies warn that cybercriminals who steal identities and hack data bases could have a field day with AI if it is not properly regulated. Which will be the first bank robbed by AI?

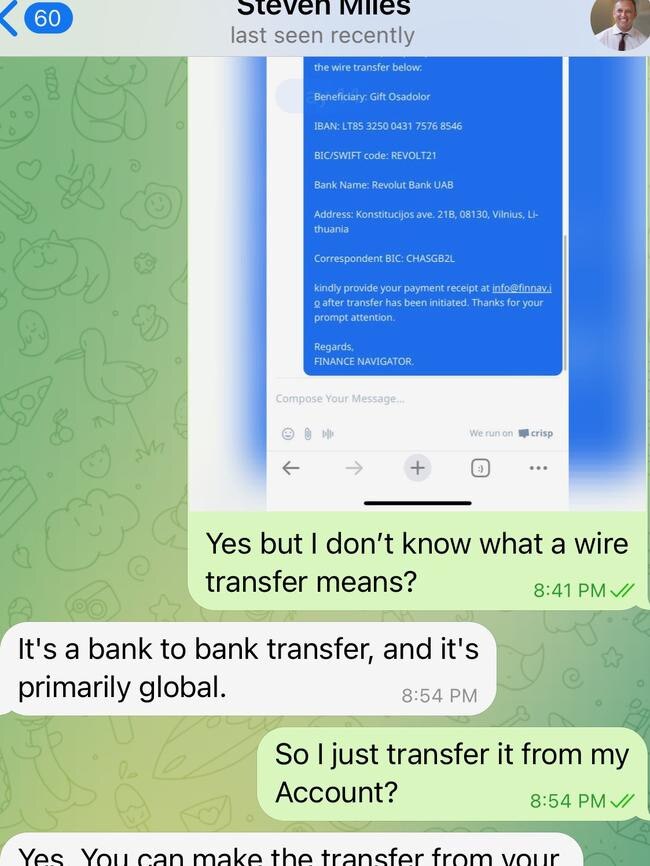

We got a glimpse of the dangers ahead when it was revealed AI was used to create a fake phone message by Premier Steven Miles whose voice was stolen and remixed.

CHOICE consumer data advocate, Kate Bower says all of us are at risk.

“AI systems can supercharge scams, create misinformation and harmful deepfakes, and when used in essential services like insurance, can exacerbate discrimination and unfairness,” she said.

“They are also notoriously opaque, making it difficult for consumers to know the risks by simply interacting with a product or service.”

Nevertheless, University of Queensland artificial intelligence lecturer Alina Bialkowski says there will be great benefits from its use.

“Artificial Intelligence can bring potential benefits to government and private industry by automating routine tasks, analysing large data sets for insights, improving decision-making, enhancing customer service, and increasing operational efficiency,” she said. “In education, healthcare and transportation, AI can provide insights for better planning, resource allocation, and service delivery. For example, AI-powered traffic management systems could help reduce congestion, while smart healthcare solutions could aid in diagnosing diseases faster and more accurately.

“Much of the hype at the moment is around ‘Generative Models’ that learn data distributions, and are then able to generate new data based on a user’s input prompt. These include Large Language Models (LLMs) such as ChatGPT which take text-based prompts as input and generate detailed text-based responses and image generators that allow you to describe the image you want and create it.

“These models have already been widely adopted in applications including creative tasks (art, music, video), programming code, generating documentation in legal services, as well as journalism, marketing, and education.”

Bialkowski says there are also risks in AI applications, particularly around bias and verifying correctness of the outputs.

“For example, LLMs may appear to write very well, but confidently output incorrect facts, perform errors in math calculations and even generate false or misleading things that haven’t occurred, which is known as ‘AI hallucination’,” she says.

“Users should be careful to verify the outputs and not use AI blindly. The outputs of the models depend on the data they are trained on and can perpetuate existing inequalities or replicate existing biases in data, resulting in errors or exclusion of minority groups.

“For example, speech recognition models may struggle to understand accents, or skin cancer detection models may miss suspicious lesions in different skin tones.’’

“Privacy is another important consideration. Vast amounts of data are required to train and operate AI-based models, and the data may contain sensitive information about individuals, including personal details, location data, and health records. For example, ChatGPT and other LLMs offer free tiers, but at the cost of using your data for training future models.”

The Albanese government has set up a temporary expert group to advise on “testing, transparency and accountability measures for AI”. It is headed by Professor Bronwyn Fox, the CSIRO’s Chief Scientist.

SLAVES TO MACHINES

Are we about to become slaves to machines that hire and fire by algorithm? ACTU assistant secretary Joseph Mitchell was outspoken at a Senate inquiry last week when he called for workers to be at the centre of moves to regulate AI in Australia.

“We risk a future where the rights fought for over generations by working people are undermined by the adoption of new technologies,” he said.

“The risks are clear: workers are being subjected to unreasonable, unblinking surveillance, being hired and fired by algorithm, having their creative output stolen by companies, and being discriminated against by bosses’ bots.”

NUCLEAR CONTROL WARNING

The British Parliament was told last week AI could spark a new arms race. Lord Lisvane told the House of Lords: “A key element in pursuing international agreement will be prohibiting the use of AI in nuclear command, control and communications. On one hand, advances in AI offer greater effectiveness.

“For example, machine learning could improve detection capabilities of early warning systems, make it easier for human analysts to cross-analyse intelligence, surveillance and reconnaissance data, and improve the protection of nuclear command, control and communications against cyberattacks.

“However, the use of AI in nuclear command, control and communications could spur arms races or increase the likelihood of states escalating to nuclear use during a crisis. AI will compress the time for decision-making. Moreover, an AI tool could be hacked, its training data poisoned or its outputs interpreted as fact when they are statistical correlations.”