TikTok feed curated for a 13-year-old user rife with dark content within 20 minutes of signing up

TikTok may be all the rage on social media but there is a disturbing warning that comes with it.

Teenagers as young as 13 are being fed distressing content linked to suicide, extremist behaviour and drug use within just 20 minutes of signing up to social media giant TikTok.

An investigation, which involved signing up for a new TikTok account as a 13-year-old, has revealed how online algorithms are luring children along dark pathways through its “For You” feed based on geographic location and interactions with trending videos.

TikTok guidelines state that content created by people aged under 16 years old is not eligible to appear on the For You feed and that harmful content is restricted for teenage uses.

However, it took just 20 minutes of scrolling before the relatively lighthearted video content took on a disturbing twist.

In one video, an empty night-time street scene is captioned with a message about “losing interest in everything”.

Another video zooms in on a flyer with the words, “sometimes we don’t want to heal because the pain is the last link to what we have lost”.

The subject in another video implies that the only way they can stay alive is by using a substance that is slowly killing them, and in a separate video a person casually speaks about how they tried to die by suicide as a teenager.

Child psychologist Michael Hawton said while low moods were “part and parcel of the human condition – even for children” – the results of the investigation showed why parents needed to supervise children using social media.

“The problem is that there are always multiple solutions to these issues such as: allowing the tide of emotion to pass, talking to a friend, thinking through things, talking to oneself or seeking out guidance from a mentor,” he said.

“When computer algorithms drive children or tweens into just one solution, this is a problematic outcome for a young mind, limiting their perceived options for an alternate solution.

“Parents can and should take steps to protect children from these algorithmic pathways that result in harm.”

Drug references also featured heavily in the algorithm, with one person giving advice in a video about how long cannabis will stay in the system and be detected in roadside drug tests. A video with 71,000 shares had the caption “alcohol is the question and the answer is yes.”

Another video discussing suicide was followed directly by a video about the proof of an afterlife.

A video featuring a woman talking about difficulties she had taking her medication was followed by a video of someone talking about how they are going down a dark path referencing the hashtag “#substances”.

TikTok removed at least one account for breaching guidelines after it was flagged by The Courier-Mail

A TikTok spokeswoman said the platform directs people to Lifeline when a user searches for words or terms related to suicide or self-harm.

“We care deeply about the health and wellbeing of our community and do not allow content depicting, promoting, normalising, or glorifying activities that could lead to suicide or self-harm,” a spokeswoman said.

“TikTok is a platform that allows the community to discuss emotionally complex topics in a supportive way, as long as they do not breach our community guidelines.”

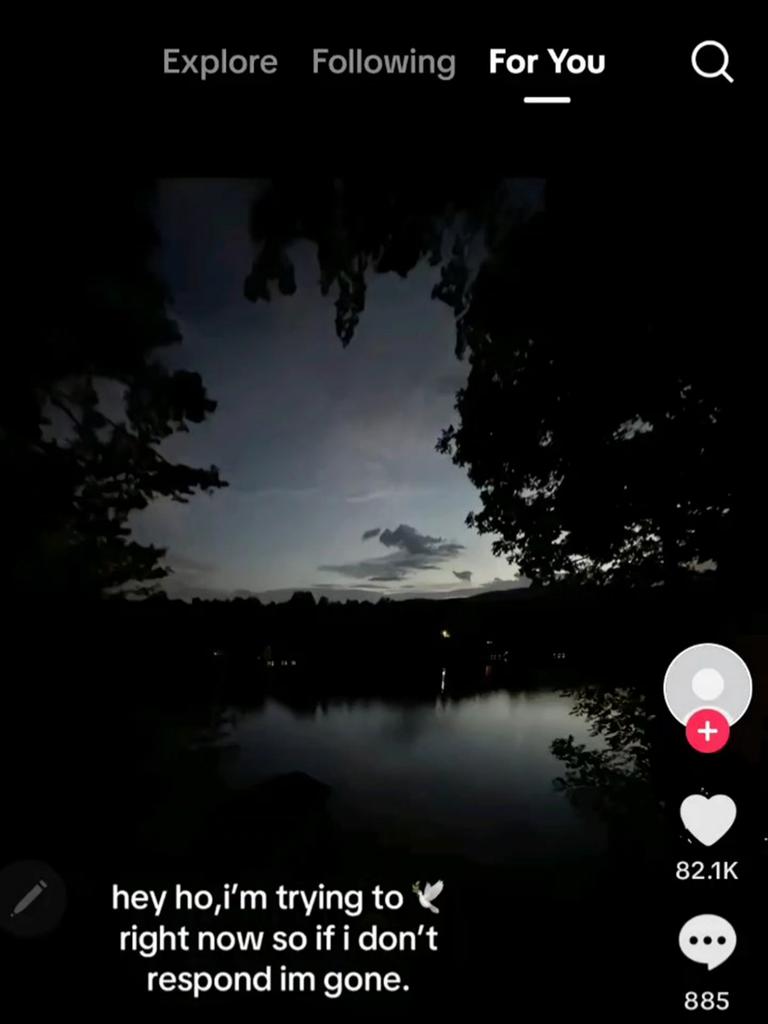

Videos discussing suicidal ideation often receive an alarmingly high amount of engagement.

One video showed waves on the ocean accompanied by an uploaded message to the filmmaker’s family that said: “If I passed away, just remember that your little girl was trying her hardest, but yeah she failed”. It had been shared more than 12,000 times.

Just a few minutes after seeing this video, another with three slides appeared asking people to rate how much they hate themselves. It had more than 59,000 comments.

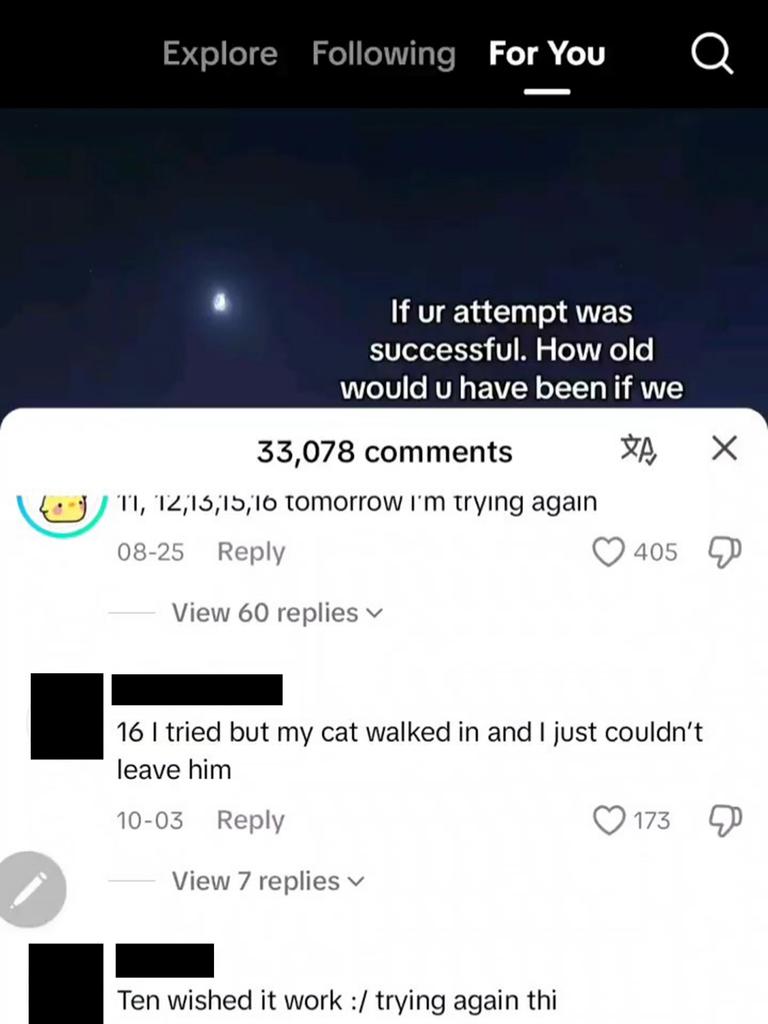

Another video urged viewers to respond with how old they were when they attempted suicide. The majority of those who replied were teenagers and preteens, with one respondent claiming they had attempted suicide five times between ages of 10 and 12. Many comments said simply “tonight,” while another commenter said they were 10 and wished it had been successful.

Educator and neuroscience strategist Jill Sweatman said teenagers can easily get lost down a rabbit hole when scrolling through social media.

“Such videos begin the process of ‘normalising’ the discussion around suicide and the power of suggestion can be dangerous, especially in a young mind that has not yet developed the crucial part of the brain that makes considered decision, monitors and regulates emotions, self-control, risk taking and impulse control,” she said.

“What happens in the brain with a child’s compulsion to scroll and stay absorbed in more and more videos is that the reward and impulsive parts of the brain enlarge at the expense of the executive part of the brain that controls self-regulation, impulse and emotional control and considered decision-making.”