SA universities give green light to use AI to write assignments

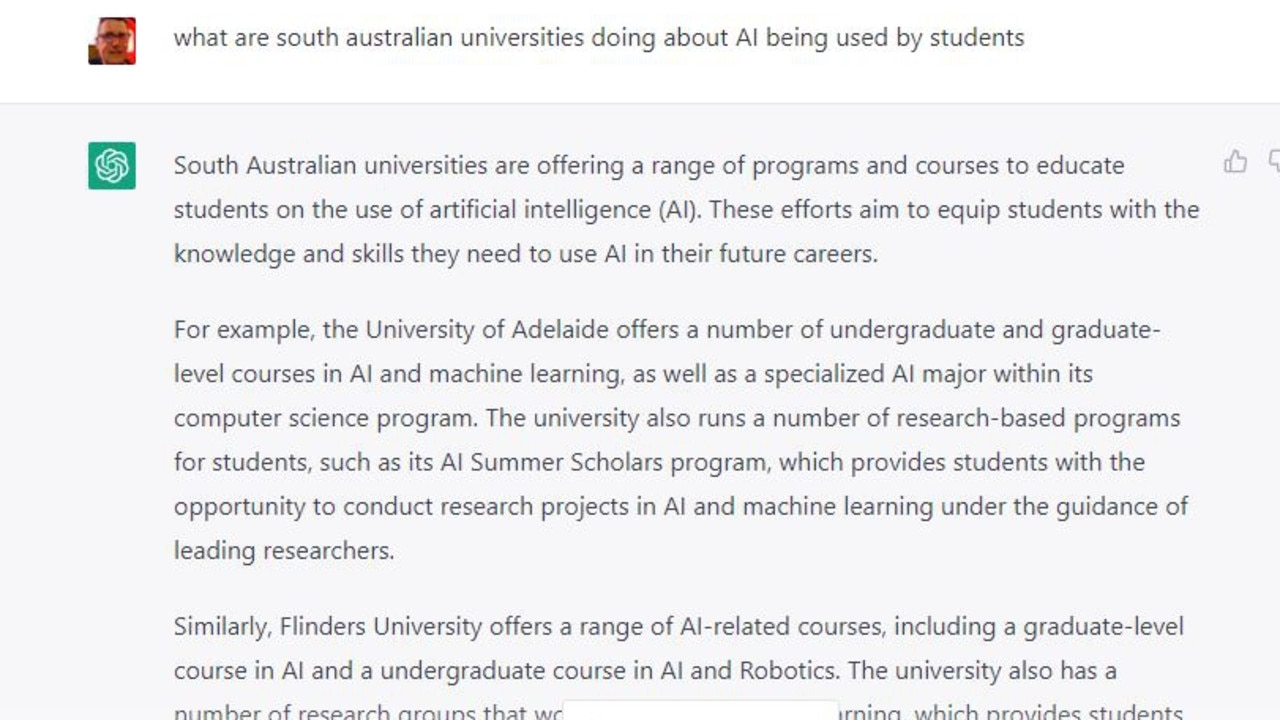

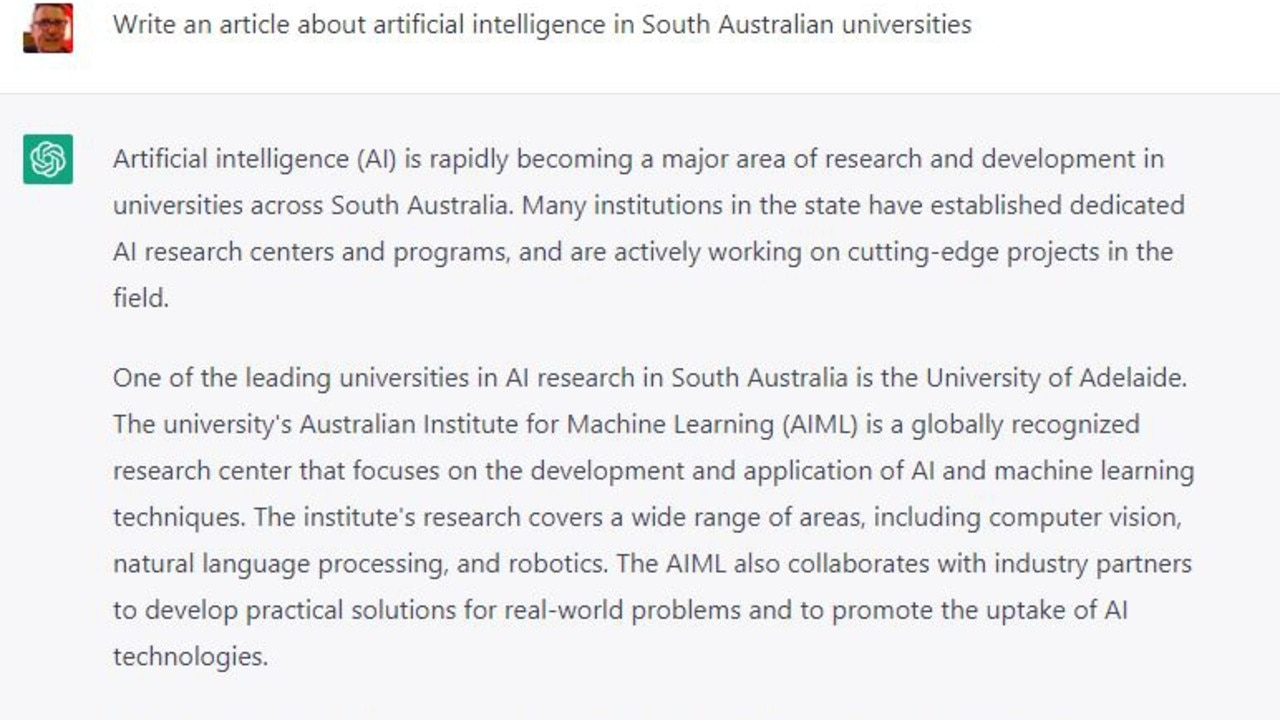

Artificial intelligence is here to stay. Here’s how universities are working with – not against – new, sophisticated technology being embraced by students. See how it works.

Strict rules will be introduced into South Australian universities to allow students to use artificial intelligence to prepare assignments.

Student integrity policies have been updated at the University of Adelaide, Flinders University and UniSA in response to the availability of new software capable of instantly producing written content.

Under the changes, students will be permitted to use AI programs as part of their studies but will face disciplinary action if it is not disclosed to university staff.

One new program, ChatGPT, has become an international phenomenon since its existence became known last month following a soft launch in the United States.

Its overnight popularity has forced educational institutions to decide whether they will attempt to ban the use of AI during academic study or embrace it as part of a global technological revolution.

Flinders University deputy vice-chancellor Professor Romy Lawson said it had decided to educate students on the use of AI – and the new rules surrounding its use.

“This is our future and it is here,” she said. “It is where the world is going so we need to embrace it, not ban it.”

Professor Lawson said trying to stop students from using artificial intelligence would be similar to unsuccessful attempts to ban calculators in classrooms.

“The use of AI is a new digital literacy which we should teach in the same way we did when

Google was first introduced,” she said.

Professor Lawson said Flinders would not tolerate any students who used AI programs like ChatGPT to cheat.

“We will educate students about ethical practices and we will educate staff about how to assess assignments to detect cheating,” she said.

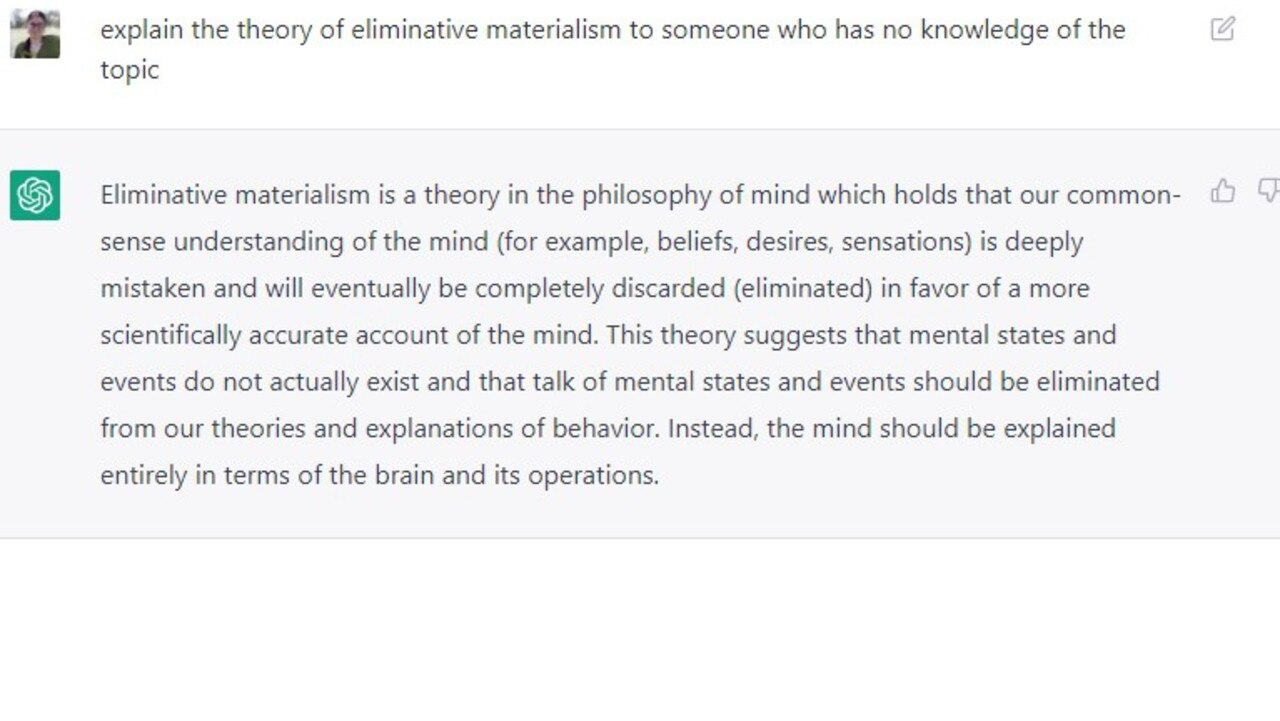

“Students will be asked to exercise skills such as critical analysis and verify any information they obtain through AI.

“They will need to provide evidence that they have conducted research and provide references.”

Professor Lawson said artificial intelligence was unable to provide the “human approach” which students could offer.

“It may allow us to provide more humanistic education where we can ask for emotions, critical analysis and ethical judgment, which we want our students to have,” she said

UniSA chief academic officer Professor Joanne Cys said it would also take an educative approach to the use of artificial intelligence.

“We want students to understand how to use artificial intelligence tools in a responsible and ethical way – and the importance of academic integrity – not only as students, but as professionals after they graduate,” she said.

Professor Cys said UniSA used “good assessment design principles that ensure students demonstrate authentic learning”.

“We are continuing to update resources for staff to support them to design assessment tasks that promote academic integrity,” she said.

University of Adelaide deputy vice-chancellor (academic) Professor Jennie Shaw said it took academic integrity “very seriously”.

“Artificial intelligence is here to stay so our priority is to educate our students and staff to use AI appropriately,” she said.

“Conversely, they will then understand that they will be in breach of the university’s academic integrity policies if they are found to be using AI programs inappropriately.”

Professor Shaw said the incidence of cheating by students generally was low, with only two per cent of students at the university caught last year.

“Our integrity policies and practices have been referred to as being sector leading,” she said.

“The university uses anti-plagiarism software to detect the similarities that AI generated papers include such as errors in sentence structure, vocabulary and fact.”

Professor Shaw said the university also was “constantly” re-evaluating how it assessed assignments by students.

“This may, for example, include answering questions focused on recent events or examples, as AI is less useful in these areas,” she said.

Another strategy to combat cheating was ensuring all exams, whether taken personally or online, were invigilated.

“Oral and practical exams are used in many areas, which reduces the potential for cheating,” she said.

“Our main aim is to provide a good education for our students and to have them leave us with strong content knowledge and understanding.”

The Education Department wanted students to continue completing their work without using artificial intelligence.

A spokeswoman said schools had the ability to individually block websites which they did not believe were appropriate.

“Schools also focus on teaching students the importance of doing their own work, thinking critically for themselves and academic integrity,” she said.

“Specific websites, such as artificial intelligence (AI) tools, can be blocked on devices that connect to a school network, either centrally by the department or locally by an individual school,” she said.

The spokeswoman schools “don’t hesitate in addressing suspected cases of AI use, and we continue to monitor new and emerging tools that need to be blocked.”

‘It makes a lot of sense’

Computer science student Laura Savaglia believes universities have made the right decision by allowing the use of artificial intelligence.

The 25-year-old year old from Lockleys has become familiar with the new software program, ChatGTP, through researching its capabilities over the past two years.

“The latest version is a new variation which is a lot better with context,” she said. “It still makes mistakes which need to be corrected so you can’t just use it without checking things.’

Ms Savaglia, who is president of the Flinders University computer society, said while AI would become an “useful tool” academically, it was important there were rules surrounding its use.

“It makes a lot of sense to me because it is coming and the latest version of ChatGTP is almost perfect,” she said.

“As long as it is being disclosed by students and they are honest about using it, then I really can’t see why it should become a problem,” she said.

“There are certain skills you need to use it properly, like verifying facts, so I don’t think it should be banned. It is the future and it is here so we need to learn to live with it.”

Experts from UniSA will host a forum for parents, teachers and students on the implications of AI for education on Saturday, February 11, from 10am to 2pm. Tickets and more information is here